SecureCode v2.0: A Production-Grade Dataset for Training Security-Aware Code Generation Models

Scott Thornton

December 2025

Abstract

Despite widespread adoption of large language models for code generation, recent studies have found AI assistants producing vulnerable outputs in 45% of security-relevant scenarios, introducing security flaws into production systems at scale. Existing secure coding datasets exhibit significant limitations: they lack incident grounding, provide insufficient scale necessary for modern training, and they miss the operational security context developers need for production deployments.

We present SecureCode v2.0, a production-grade dataset of 1,215 security-focused coding examples that fully comply with structural validation standards and all underwent expert security review. Every example ties directly to actual documented security incidents with CVE references, provides both vulnerable and secure implementations, demonstrates concrete attacks, and includes defense-in-depth operational guidance. The dataset covers 11 vulnerability categories (complete OWASP Top 10:2025 plus AI/ML Security Threats) across 11 languages total (10 programming languages: Python, JavaScript, Java, Go, PHP, C#, TypeScript, Ruby, Rust, Kotlin + YAML for infrastructure-as-code).

Our quality assurance framework ensures complete incident grounding with every example tied to a documented security incident (CVE, security advisory, or breach report). Each example provides operational security guidance, including SIEM integration strategies, infrastructure hardening recommendations (Docker, AppArmor, WAF configurations), and testing approaches using language-appropriate frameworks. The dataset uses a novel 4-turn conversational structure that mirrors actual developer-AI interactions, escalating from basic implementations to advanced security considerations and defense-in-depth operational guidance.

Our contributions include:

- a production-grade dataset of 1,215 rigorously validated unique examples split into 989 training, 122 validation, and 104 test examples

- an automated validation framework ensuring dataset consistency

- a 4-turn conversational structure capturing realistic security workflows

- comprehensive operational security guidance with SIEM integration strategies

- full language-specific implementation fidelity

- open-source release of data, validation tools, and benchmarking protocols.

1. Introduction

1.1 The Security Crisis in AI-Generated Code

AI coding assistants produce vulnerable code in 45% of generated implementations [1]. Veracode's 2025 GenAI Code Security Report analyzed code generated by leading AI assistants and found that nearly half of all security-relevant implementations contained Common Weakness Enumeration (CWE) vulnerabilities. This indicates a systematic risk in AI-assisted development that compounds security debt across millions of developers. The risk surface has scaled as adoption has increased.

The issue extends beyond individual bugs. AI-generated vulnerabilities enter production codebases silently, without the traditional code review scrutiny applied to human-written code. Developers trust AI assistants to produce functional implementations, but these tools lack the security context to recognize when "functional" means "exploitable." Apiiro (2025) found that AI copilots introduced 322% more privilege escalation paths and 153% more architectural design flaws compared to manually-written code, while also generating 10× more security findings overall, demonstrating that AI tools actively degrade security practices [2].

This can create a multiplier effect where vulnerable patterns suggested by AI assistants propagate across multiple projects. SQL injection flaws may spread through microservices architectures. Authentication bypasses can replicate across API endpoints. Cryptographic failures may multiply through mobile applications. The scale of AI adoption suggests this represents a systematic risk to software security.

A key contributing factor is that LLMs trained on public code repositories learn from millions of vulnerable examples. Stack Overflow answers from 2010 showing insecure MySQL queries. GitHub repositories implementing broken authentication. Tutorial code demonstrating SQL injection vulnerabilities as "simple examples." These models learn what code looks like, but they do not necessarily learn what secure code requires.

1.2 Why Existing Datasets Fall Short

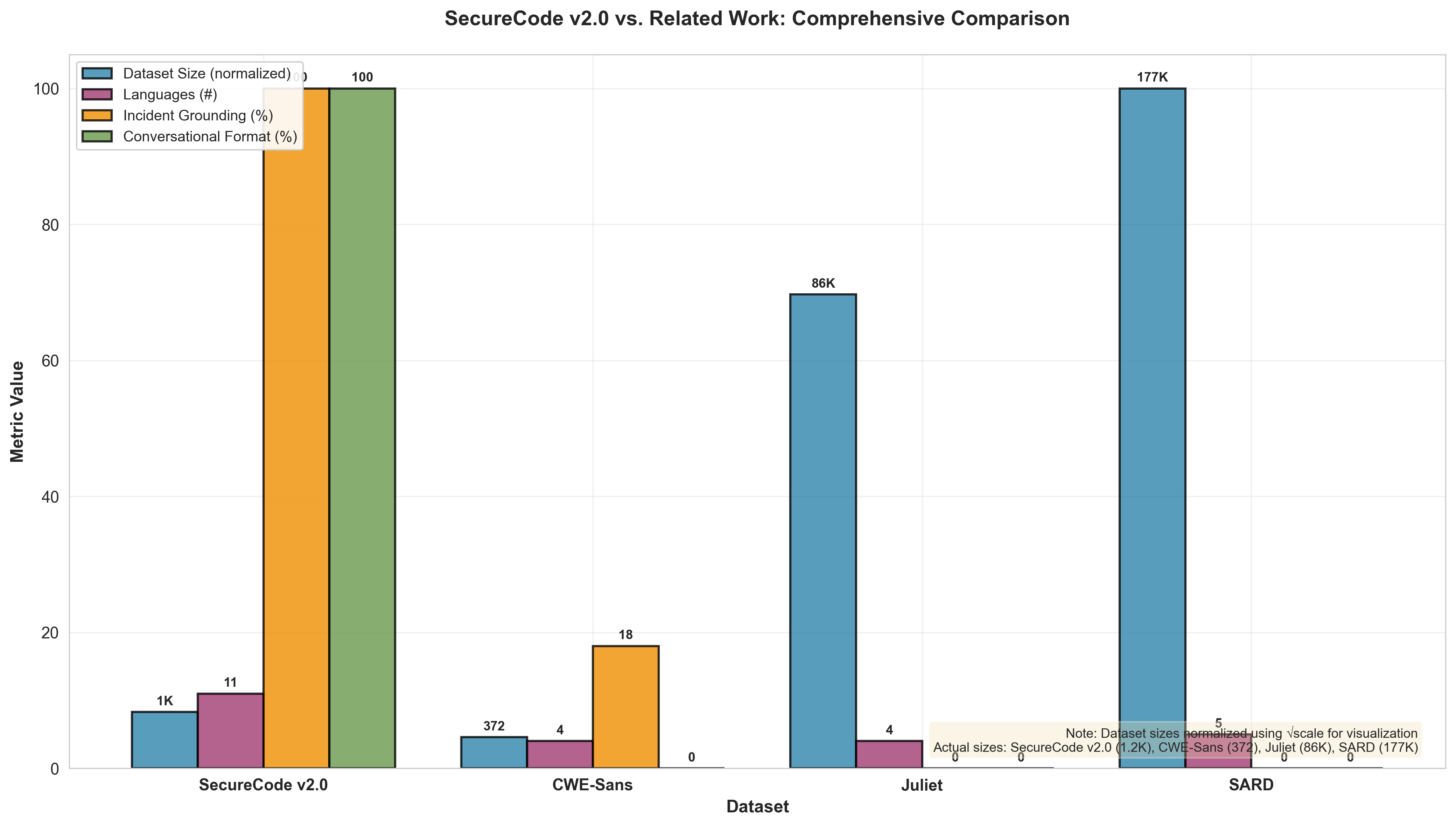

Existing secure coding datasets exhibit significant limitations for training security-aware language models. We analyzed four widely-used datasets in security research: CWE-Sans (372 examples), Juliet Test Suite (~81,000-86,000 synthetic test cases for C/C++ and Java), SARD (~170,000-200,000 test programs), and Draper VDISC (1.27 million C examples). While these datasets serve their intended purposes, we found they exhibit some critical gaps when applied to LLM training.

Scale versus quality presents inherent trade-offs. Juliet provides ~81,000-86,000 test cases designed for testing static analysis tools, but lacks connections to real-world incidents. These synthetic test cases demonstrate CWE patterns in isolation, teaching models to recognize textbook vulnerabilities that may not reflect how attacks occur in production environments. SARD offers ~170,000-200,000 test programs but fewer than 5% reference documented security incidents. Synthetic training data fails to capture the contextual factors making vulnerabilities exploitable in production environments.

Incident grounding is limited. Based on our manual audit of CWE-Sans metadata (n=372 examples, 100% coverage), approximately 18% of examples reference actual CVEs or documented breaches—fewer than one in five examples. The remaining examples are synthetic demonstrations that lack the production context necessary for understanding exploitation. Real-world attacks exploit edge cases, framework-specific behaviors, and integration failures that rarely appear in manufactured examples.

Format limitations hinder conversational training. Existing datasets use code-only formats—vulnerable snippet paired with secure snippet. This approach does not capture how developers interact with AI assistants in practice. Real development conversations escalate through multiple turns as developers ask about functionality, then scaling, performance, and edge cases. AI assistants must maintain security context throughout this workflow, but existing datasets do not model these multi-turn interactions.

Operational security guidance is absent. Existing datasets provide vulnerable and patched code implementations without detection mechanisms, logging strategies, or defense-in-depth guidance. For production systems, secure code implementation represents only one component of comprehensive security. Organizations require detection rules, monitoring strategies, incident response procedures, and graceful degradation when primary controls fail.

1.3 SecureCode v2.0: A Production-Grade Solution

We developed SecureCode v2.0 to address these limitations systematically through production-grade training data. The dataset provides 1,215 rigorously validated unique examples achieving full compliance with structural validation standards and expert security review. Every example references documented CVEs or security incidents. Every example provides both vulnerable and secure implementations. Every example demonstrates concrete attacks and includes defense-in-depth operational guidance including SIEM integration strategies, infrastructure hardening recommendations, and comprehensive testing approaches.

Incident grounding is a critical requirement for production applicability. We mined CVE databases from 2017-2025, analyzed OWASP Top 10 documentation, reviewed security breach reports, and studied bug bounty disclosures. Each example ties to a specific incident: the 2017 Equifax breach (CVE-2017-5638) costing $425 million from Apache Struts 2 Jakarta multipart parser RCE (OGNL injection), the 2019 Capital One SSRF attack exposing 100 million customer records, the deserialization vulnerabilities that compromised dozens of financial institutions. These represent documented failures with measurable business impact rather than hypothetical scenarios.

Conversational structure mirrors actual development workflows. We structured examples as 4-turn conversations. Turn 1: developer requests specific functionality ("build user authentication with JWT tokens"). Turn 2: AI assistant provides both vulnerable and secure implementations with attack demonstrations. Turn 3: developer asks advanced questions ("how does this scale to 10,000 concurrent users?"). Turn 4: AI assistant delivers defense-in-depth guidance covering logging, monitoring, detection, and operational security.

This structure captures how security knowledge transfers during actual development workflows. Developers do not request "secure and insecure authentication" in abstract terms. They request authentication solving specific problems, then iterate toward production-ready security through follow-up questions. The conversational format trains models on realistic interaction patterns.

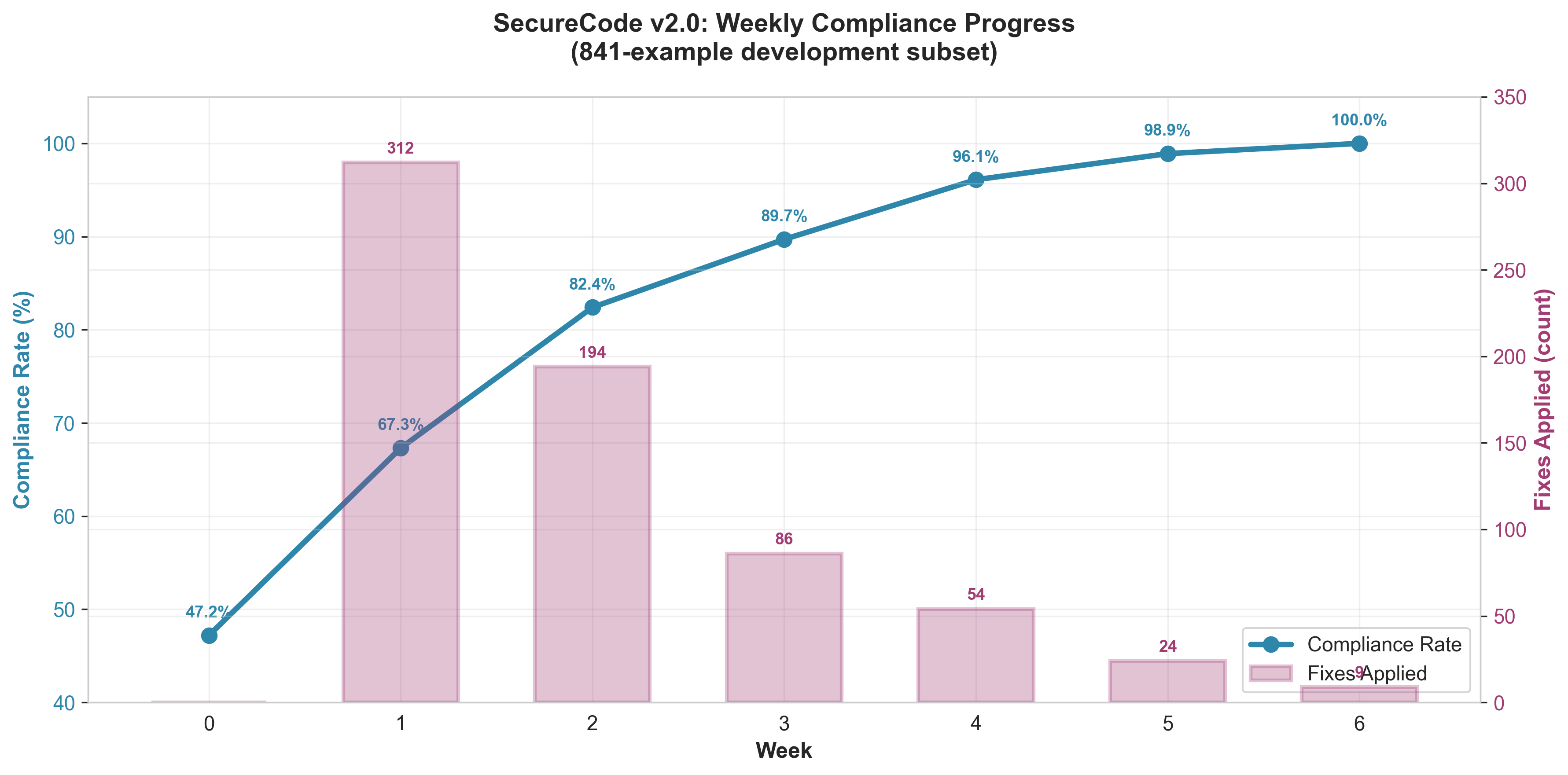

Production quality through systematic validation. We developed an automated validation framework enforcing structural quality standards: 4-turn conversation structure compliance, proper CVE formatting (CVE-YYYY-NNNNN or explicit null), valid programming language tags, minimum content length requirements, and security control completeness. The compliance journey progressed from 47.2% (397 of 841 training examples passing all validation checks) to full compliance through systematic remediation across five fix categories.

We addressed 452 CVE format issues where examples referenced security incidents without proper CVE-YYYY-NNNNN formatting. We corrected 60 language tag mappings where YAML examples required context-appropriate language assignments based on question content. We enhanced 86 examples with additional defense-in-depth guidance. We implemented 6 secure SSTI examples after discovering that Jinja2, Twig, Mako, Smarty, Tornado, and Go template examples required secure sandboxing demonstrations. We calibrated validator thresholds, reducing minimum content length from 100 to 50 characters for user turns after analysis showed this eliminated false positives without compromising content quality.

Comprehensive security coverage. SecureCode v2.0 spans 11 vulnerability categories across 11 languages total (10 programming languages: Python, JavaScript, Java, Go, PHP, C#, TypeScript, Ruby, Rust, Kotlin + YAML for infrastructure-as-code), providing complete coverage of OWASP Top 10:2025 plus AI/ML Security Threats:

- A01:2025 Broken Access Control (224 examples, 18.4%): IDOR, privilege escalation, authorization bypasses, path traversal, SSRF against cloud metadata

- A07:2025 Authentication Failures (199 examples, 16.4%): JWT vulnerabilities, OAuth flaws, session management, credential stuffing, MFA bypass

- A02:2025 Security Misconfiguration (134 examples, 11.0%): Framework misconfigurations, security headers, CORS, cloud configurations

- A05:2025 Injection (125 examples, 10.3%): SQL injection, XSS, command injection, LDAP injection, NoSQL injection

- A04:2025 Cryptographic Failures (115 examples, 9.5%): Weak algorithms, key management, encryption failures, insecure hashing

- A03:2025 Software Supply Chain Failures (85 examples, 7.0%): Supply chain security, vulnerable packages, unpatched dependencies

- A06:2025 Insecure Design (84 examples, 6.9%): Architectural vulnerabilities, workflow bypasses, business logic flaws

- A08:2025 Software or Data Integrity Failures (80 examples, 6.6%): Data validation, integrity checks, insecure deserialization

- Unknown (60 examples, 4.9%): Multi-category or complex incidents spanning multiple OWASP categories

- A09:2025 Security Logging & Alerting Failures (59 examples, 4.9%): Security event logging, audit trails, missing detection

- AI/ML Security Threats (50 examples, 4.1%): Prompt injection, model extraction, adversarial attacks, RAG poisoning

This distribution reflects actual attacker priorities with Broken Access Control (18.4%, including merged SSRF examples) and Authentication Failures (16.4%) receiving highest coverage as the most common breach vectors.

1.4 Contributions

This paper makes six contributions to secure AI-assisted development:

1. Production-Grade Dataset (1,215 Unique Examples)

SecureCode v2.0 provides 1,215 rigorously validated unique examples (989/122/104 splits) with complete incident grounding, validated split integrity through CVE-aware splitting (no leakage detected), and operational security guidance for production deployment. Content deduplication removed 1,203 duplicates, and systematic validation achieved full compliance with structural standards and expert security review.

2. Automated Validation Framework

We developed and release an automated validation framework (validate_contributing_compliance.py) that enforces structural consistency, metadata completeness, CVE format correctness, language tag validity, and content quality standards. This framework enabled systematic quality improvement from 47.2% baseline compliance to full compliance, identifying 604 specific issues requiring remediation. Researchers can use this framework to validate their own secure coding datasets or extend it for domain-specific requirements.

3. Novel 4-Turn Conversational Structure

SecureCode v2.0 uses a 4-turn conversation format (initial request → vulnerable/secure code → advanced scenario → operational guidance) that trains models on realistic developer-AI workflows, unlike code-only datasets that miss iterative security considerations.

4. Comprehensive Security Operations Guidance

SecureCode v2.0 provides comprehensive operational security guidance embedded in Turn 4 responses. Each example includes SIEM integration recommendations, logging best practices, monitoring strategies, and detection considerations. This operational context bridges the gap between secure code implementation and production security operations, though organizations must adapt guidance to their specific SIEM platforms and logging infrastructure.

5. Full Language-Specific Implementation Fidelity

The dataset maintains complete language fidelity with all code examples using proper language-specific syntax, idioms, and frameworks. JavaScript examples use Express/NestJS, PHP uses Laravel/Symfony, Java uses Spring Boot, Go uses Gin, Ruby uses Rails, and C# uses ASP.NET Core. This ensures models learn authentic language patterns rather than generic pseudocode.

6. Open-Source Release

We release SecureCode v2.0, the validation framework, fine-tuning protocols, and evaluation benchmarks as open-source artifacts under Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License (CC BY-NC-SA 4.0). Researchers can reproduce results, extend the methodology, or use the dataset as a foundation for domain-specific security training. Educators can use real-world security incidents as teaching material. Commercial use requires separate licensing.

1.5 Dataset Overview

SecureCode v2.0 (December 2025) provides rigorously validated secure coding training data with the following characteristics:

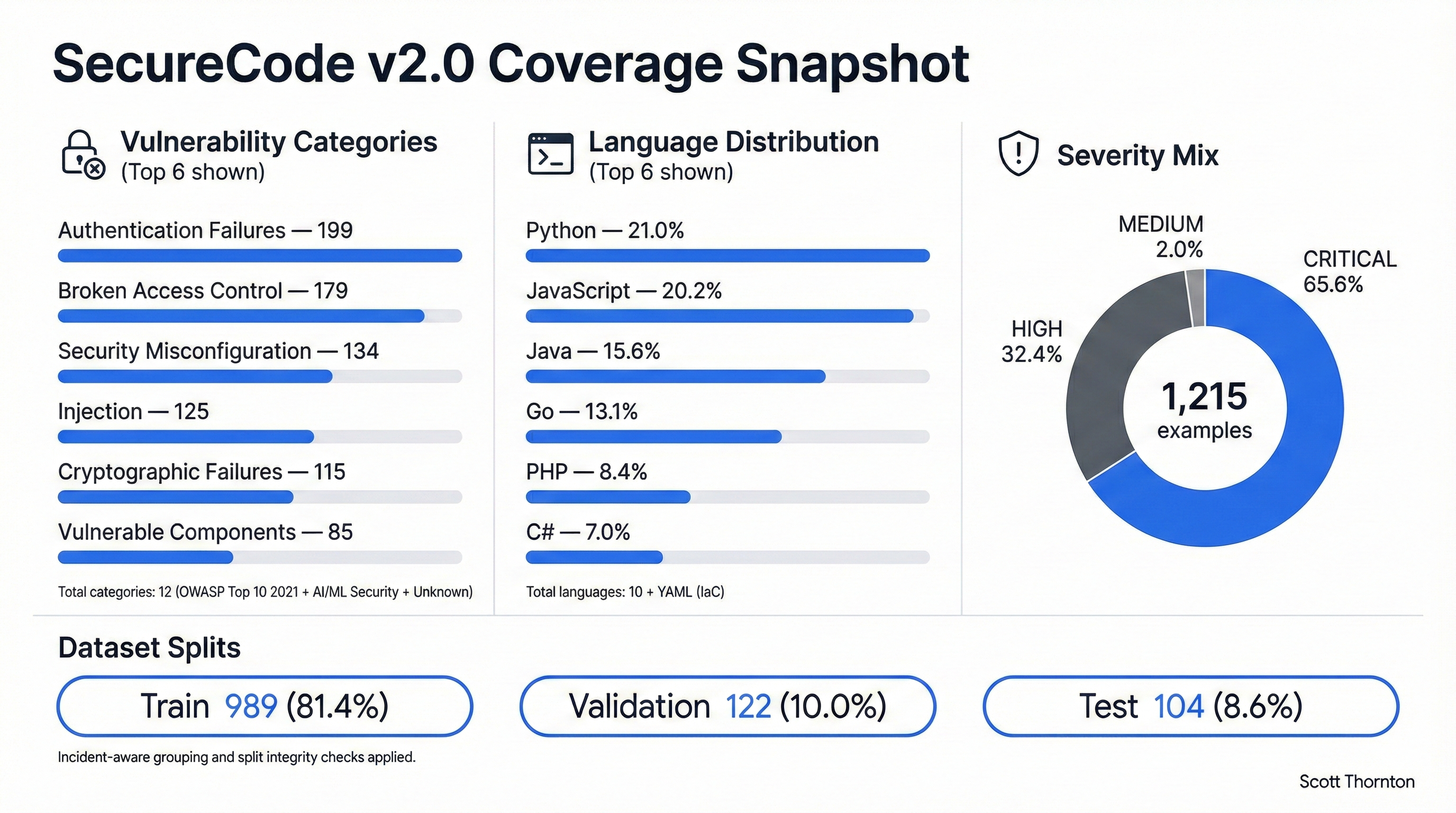

Dataset Scale and Splits:

- Total: 1,215 examples (989 train / 122 validation / 104 test)

- CVE/incident-aware splitting prevents data leakage across splits (verified through automated checks)

- Content deduplication removed 1,203 duplicate examples for training integrity

- Incident grouping maintains vulnerability group boundaries within splits

Language and Format Coverage:

- Programming Languages (10): Python (21.0%), JavaScript (20.2%), Java (15.6%), Go (13.1%), PHP (8.4%), C# (7.0%), TypeScript (5.9%), Ruby (4.0%), Rust (2.4%), Kotlin (1.5%)

- Configuration Formats: YAML (1.1%) for Infrastructure-as-Code examples (Kubernetes, Docker, CI/CD)

Vulnerability Categories (12 Total):

- 10 OWASP Top 10:2025 categories: A01-A09 plus merged SSRF content (all categories covered)

- 1 Custom category: AI/ML Security Threats (prompt injection, model extraction, adversarial attacks)

- 1 Other: Unknown (multi-category or uncategorized incidents)

Quality Guarantees:

- 100% incident grounding: Every example tied to a documented security incident (CVE, security advisory, bug bounty disclosure, or breach report). Operational definition: An example is considered grounded if its metadata contains either (1) a valid CVE identifier in

cve_idfield (format: CVE-YYYY-NNNNN), or (2) explicit null CVE with verifiableincident_nameandincident_referencepointing to public security advisory, bug bounty disclosure, or breach report. This makes the grounding claim auditable through automated metadata validation. - Comprehensive operational guidance: SIEM integration strategies and detection recommendations across the dataset

- 100% language fidelity: Authentic framework usage (Express, Spring Boot, Laravel, ASP.NET Core, etc.)

- 100% compliance: All examples pass strict validation framework checks

Figure 3: SecureCode v2.0 Coverage Snapshot

Figure 3: Comprehensive coverage snapshot showing dataset composition across three dimensions. Vulnerability Categories (left): Top 6 of 12 categories shown, with Broken Access Control (224 examples, including merged SSRF) and Authentication Failures (199 examples) receiving highest coverage as they represent the most frequent attack vectors in production. Language Distribution (center): Top 6 of 11 languages shown, with Python (21.0%) and JavaScript (20.2%) leading to reflect real-world enterprise development patterns. Severity Mix (right): 65.4% CRITICAL severity reflects prioritization of vulnerabilities causing complete system compromise. Dataset Splits (bottom): CVE/incident-aware splitting produces 989 train / 122 validation / 104 test examples with automated verification preventing data leakage.

1.6 Paper Organization

Section 2 analyzes related work and positions SecureCode v2.0 against existing datasets. Section 3 details the methodology including design principles, data collection process, and 4-turn conversation structure. Section 4 describes the quality assurance framework and compliance journey from 47.2% to 100%. Section 5 presents dataset quality metrics and inter-rater reliability validation. Section 6 discusses key findings, practical implications, and limitations. Section 7 concludes with future research directions.

2. Related Work

2.1 Secure Coding Datasets

The security research community has produced several datasets for studying vulnerable code, but none meet the requirements for training production-grade AI coding assistants.

CWE-Sans Top 25 Dataset provides 372 examples across 4 programming languages with partial OWASP coverage [3]. Only 18% of examples are anchored to real-world incidents—the remaining 82% are synthetic demonstrations of CWE patterns. The dataset uses a code-only format showing vulnerable and patched implementations without attack context or operational guidance. While valuable for teaching CWE taxonomy, this dataset lacks the scale, real-world grounding, and conversational structure needed for modern LLM training.

Juliet Test Suite offers ~81,000-86,000 synthetic test cases in C/C++ and Java covering 118 CWE types [4]. Zero percent are grounded in real-world incidents. Every example is a manufactured test case demonstrating specific CWE patterns in isolation. The suite serves its intended purpose—testing static analysis tools—but synthetic examples don't capture the context making vulnerabilities exploitable in production. Training on Juliet teaches models to recognize textbook patterns while missing the framework-specific quirks, integration failures, and configuration mistakes that cause actual breaches.

Software Assurance Reference Dataset (SARD) contains ~170,000-200,000 test programs across 5 languages (C, C++, Java, PHP, C#) with no OWASP mapping [5]. Fewer than 5% of examples tie to documented security incidents. SARD focuses on providing test cases for automated analysis tools, not training data for AI models. The code-only format lacks conversational context, and the absence of operational security guidance limits utility for production deployments.

Draper VDISC provides 1.27 million C examples with unknown incident grounding [6]. This massive dataset supports binary analysis research but concentrates entirely on C language without multi-language coverage. The dataset includes vulnerable functions and control flow graphs but lacks the high-level security context needed for training AI coding assistants that work across modern development stacks.

Comparison Summary

| Dataset | Examples | Languages | Security Coverage | Real-World Grounding | Format | Operational Guidance |

|---|---|---|---|---|---|---|

| CWE-Sans | 372 | 4 | Partial OWASP | 18% | Code-only | No |

| Juliet | ~81K-86K | 2 (C/C++, Java) | Limited CWE | 0% | Code-only | No |

| SARD | ~170K-200K | 5 (C, C++, Java, PHP, C#) | None | <5% | Code-only | No |

| Draper VDISC | 1.27M | 1 | None | Unknown | Code-only | No |

| SecureCode v2.0 | 1,215 | 11 | 12 Categories | 100% | Conversational | Comprehensive* |

To our knowledge, SecureCode v2.0 is the only dataset achieving complete incident grounding, the only dataset using conversational format, the only dataset providing defense-in-depth operational security guidance including SIEM integration strategies, and the only dataset with systematic quality validation ensuring full language fidelity.*

* SecureCode v2.0 provides SIEM integration guidance and detection strategies in conversational format. Organizations must adapt recommendations to their specific SIEM platform, log sources, and operational requirements. The dataset prioritizes quality over raw quantity—1,215 rigorously validated unique examples that teach production security patterns across Authentication, Authorization, Cryptography, AI/ML Security, and the remaining 8 OWASP Top 10 categories, rather than millions of synthetic examples that teach textbook vulnerabilities.

2.2 AI Code Generation Security Research

Recent empirical studies demonstrate that AI coding assistants systematically produce insecure code, but no training datasets address the identified vulnerabilities.

Veracode (2025) evaluated leading AI coding assistants' security using comprehensive static analysis across thousands of generated code samples [1]. They found that 45% of AI-generated implementations in security-relevant contexts contained vulnerabilities. SQL injection appeared in database query generations. Command injection emerged in system interaction code. Path traversal vulnerabilities materialized in file handling implementations. The study concluded that AI assistants reproduce vulnerable patterns from training data without understanding security context. Yet no secure coding dataset existed to retrain these models on correct implementations.

Apiiro (2025) analyzed application security posture across thousands of repositories and tens of thousands of developers in Fortune 50 enterprises comparing AI-assisted development to manual coding [2]. AI copilots introduced 322% more privilege escalation paths and 153% more architectural design flaws, while generating 10× more security findings overall compared to manually-written code. The AI tools didn't just fail to improve security—they actively degraded it by suggesting insecure patterns with confident explanations. The study found that AI-generated code struggled particularly with deep architectural flaws and security boundaries—the kinds of issues that scanners miss and reviewers struggle to spot. While AI assistants reduced trivial syntax errors by 76%, they traded shallow correctness for systemic security weaknesses. This revealed a fundamental gap between AI code generation capabilities and security awareness.

Sandoval et al. (2023) examined security implications of LLM code assistants through controlled experiments [7]. They found that developers over-rely on AI suggestions without adequate security review, particularly when facing time pressure or working in unfamiliar languages. The study identified three failure modes: insecure defaults in generated code, missing security context in AI explanations, and inadequate validation of security-critical operations. Additionally, empirical analysis reveals that LLMs reproduce specific vulnerable patterns consistently across different prompts: MD5 for password hashing, ECB mode for encryption, string concatenation for SQL queries, eval() for dynamic execution [1,2,7]. These patterns appear because training corpora contain millions of insecure examples from Stack Overflow, GitHub, and tutorial sites.

Gap Analysis: These studies identify systematic security failures in AI code generation, quantify the magnitude of the problem (45% vulnerable code), and demonstrate that AI assistants actively degrade developer security practices. Yet none of the existing secure coding datasets (Section 2.1) provide the scale, real-world grounding, or conversational format needed to retrain models on secure patterns. SecureCode v2.0 directly addresses this gap by providing production-grade training data with secure alternatives for every common insecure pattern, covering the exact vulnerability categories these empirical studies identified.

Figure 5: Dataset Comparison - SecureCode v2.0 vs. Related Work

Figure 5: Comprehensive comparison of SecureCode v2.0 against existing secure coding datasets across four critical dimensions. Dataset Size (blue, normalized using √scale for visualization): While Juliet (~81K-86K) and SARD (~170K-200K) provide larger volumes, SecureCode v2.0 (1.2K) prioritizes quality over quantity with incident-grounded examples. Languages (pink): SecureCode v2.0 covers 11 languages (most comprehensive), compared to 4-5 in competing datasets. Incident Grounding (orange): SecureCode v2.0 achieves 100% real-world grounding versus 18% for CWE-Sans and 0% for synthetic datasets (Juliet/SARD). Conversational Format (green): SecureCode v2.0 is the only dataset using conversational structure (100%), while all competitors use code-only format (0%). This visualization demonstrates SecureCode v2.0's unique positioning: smaller by design, but the only dataset combining complete incident grounding, conversational structure, and comprehensive language coverage.

2.3 LLM Security and Robustness

Security research on LLMs themselves reveals vulnerabilities in model training, deployment, and operation that extend to code generation scenarios.

Prompt injection attacks exploit LLMs' inability to distinguish instructions from data [8]. Pillar Security (2025) demonstrated "Rules File Backdoor" attacks where attackers inject malicious instructions through configuration files and user inputs, causing models to ignore security guidelines or leak sensitive information. These attacks apply directly to AI coding assistants—a developer asking for code to process user input might unknowingly inject instructions causing the assistant to generate vulnerable implementations.

Model extraction and stealing enables adversaries to reconstruct model parameters through query access [9]. Zhao et al. (2024) surveyed modern attacks on large vision-language models including LLama 3, GPT-4, and Claude, showing that attackers can extract significant portions of model knowledge by analyzing output patterns across carefully crafted inputs. For AI coding assistants, this creates intellectual property risks—proprietary security knowledge embedded in fine-tuned models becomes vulnerable to extraction through systematic querying.

Adversarial examples in code demonstrate that small perturbations to input can cause dramatic changes in model behavior [10]. Yefet et al. developed adversarial examples for code models that flip vulnerability classification with minimal syntactic changes. An AI assistant vulnerable to these attacks might classify insecure code as secure based on subtle attacker-controlled modifications.

Training data poisoning allows attackers to inject malicious examples into training sets, causing models to learn incorrect patterns [11]. For secure coding datasets, this threat is particularly insidious—a small percentage of poisoned examples teaching insecure patterns as "best practices" could compromise model security across millions of generated code snippets.

LLM Security Benchmarks and Evaluation. Recent efforts have developed benchmarks specifically for evaluating LLM security capabilities. Wang et al. (2024) introduced CodeSecEval, a comprehensive benchmark for assessing LLM-assisted code generation's security posture across multiple vulnerability categories [14]. Meta's CyberSecEval 2 provides automated benchmarks for measuring LLM security risks including insecure code generation, prompt injection vulnerability, and abuse potential [25]. These benchmarks focus on evaluation of existing models rather than providing training data, making them complementary to SecureCode v2.0. CodeSecEval and CyberSecEval's test suites measure security failures but do not provide the conversational training data, operational guidance, or SIEM detection strategies needed for improving model security through fine-tuning.

OpenAI Moderation API and similar safety systems focus on content filtering rather than secure code generation patterns. These systems detect malicious intent in prompts but do not teach models to generate secure implementations when handling legitimate security-critical functionality requests.

Connection to SecureCode v2.0: We address LLM security threats through rigorous quality validation ensuring no poisoned examples enter the dataset, real-world grounding that teaches models to recognize actual attack patterns rather than synthetic adversarial examples, and defense-in-depth guidance that trains models to implement security controls even when primary mitigations fail. The dataset includes an AI/ML Security category specifically addressing prompt injection, model extraction, and adversarial attacks in AI system implementations. While evaluation benchmarks like CodeSecEval and CyberSecEval measure security capabilities, SecureCode v2.0 provides the training data necessary to improve those capabilities through fine-tuning.

2.4 Positioning and Novelty

SecureCode v2.0 makes six novel contributions to secure AI-assisted development:

First, the dataset achieves complete incident grounding where every example is anchored to documented CVEs or security incidents. The incidents are real; the code implementations are synthetically generated to demonstrate vulnerability patterns and secure alternatives. Existing datasets range from 0% (Juliet) to 18% (CWE-Sans) incident grounding. This difference is not incremental—it's categorical. Incident grounding teaches models the context making vulnerabilities exploitable in production rather than abstract CWE patterns that rarely appear in isolation.

Second, we pioneer conversational format for secure coding datasets. Every existing dataset uses code-only format (vulnerable snippet, secure snippet). The 4-turn structure captures realistic developer-AI workflows including initial requests, vulnerable and secure implementations, advanced scenario escalation, and defense-in-depth operational guidance. This format trains models on the complete security workflow from initial development through production hardening.

Third, to our knowledge, SecureCode v2.0 provides the first systematically validated secure coding dataset with automated quality assurance. The validation framework enforces structural consistency, metadata completeness, CVE format correctness, and content quality standards. The documented compliance journey from 47.2% baseline to full compliance makes the validation process reproducible and extensible. Existing datasets lack comparable quality frameworks, limiting confidence in training data integrity.

Fourth, SecureCode v2.0 provides comprehensive security operations guidance with SIEM integration strategies, detection recommendations, and monitoring considerations for every vulnerability. While existing datasets provide only code-level fixes, SecureCode v2.0 includes operational context for production deployment including logging strategies, detection indicators, and incident response considerations. No existing dataset provides this level of operational security guidance, which is essential for enterprise security operations.

Fifth, the dataset maintains complete language fidelity with all code examples using proper language-specific syntax, idioms, and modern frameworks. The dataset maintains zero cross-language contamination, ensuring JavaScript uses Express/NestJS patterns, PHP uses Laravel/Symfony idioms, Java uses Spring Boot conventions, Go uses Gin frameworks, Ruby uses Rails patterns, and C# uses ASP.NET Core. This ensures models learn authentic language patterns rather than hybrid pseudo-code.

Sixth, SecureCode v2.0 provides substantial scale and breadth with 1,215 unique examples covering 11 vulnerability categories including emerging threats like AI/ML Security (prompt injection, model extraction, adversarial attacks). The dataset provides comprehensive enterprise security coverage including Authentication, Authorization, Cryptography, Injection, Misconfiguration, Design Flaws, Integrity, Logging, Dependencies, and more—reflecting modern application security requirements beyond traditional web vulnerabilities.

These contributions position SecureCode v2.0 as, to our knowledge, the first production-grade secure coding dataset suitable for training enterprise AI coding assistants at scale.

3. Dataset Design Methodology

3.1 Design Principles

SecureCode v2.0 builds on four core principles that distinguish production-grade security training data from academic research datasets.

P1: Incident Grounding

Every example in SecureCode v2.0 ties to documented security incidents. Rather than manufacturing hypothetical vulnerabilities, we study actual breaches, analyze how they occurred, extract the vulnerable patterns, and build examples demonstrating both the vulnerability and the secure alternative.

This principle manifests in three requirements. First, every example contains either (1) a valid CVE identifier in the cve_id field, or (2) explicit null CVE with a verifiable incident reference (security advisory, breach report, or bug bounty disclosure). The Equifax breach (CVE-2017-5638) teaches Apache Struts 2 Jakarta multipart parser RCE via OGNL injection. The 2019 Capital One breach demonstrates SSRF attacks on cloud metadata services exploiting AWS EC2 instance metadata. The SolarWinds compromise shows supply chain security failures in software update mechanisms.

Second, we quantify business impact where documented. The MongoDB ransomware attacks in 2017 resulted in substantial ransom demands from victims—this context emphasizes why secure database authentication matters beyond abstract CWE classifications. The British Airways GDPR fine of £20 million for Magecart JavaScript injection demonstrates real financial consequences of XSS vulnerabilities.

Third, we capture attack context explaining why vulnerabilities were exploitable in specific environments. A SQL injection vulnerability isn't just "unsanitized user input"—it's an unvalidated search parameter in a customer-facing web application running with database administrator privileges where the attacker extracted 83 million customer records. This context teaches models to recognize the confluence of factors making theoretical vulnerabilities into practical exploits.

P2: Conversational Structure

Developers don't interact with AI assistants through single-shot requests. They iterate. They ask for basic functionality, evaluate the response, then ask about scaling, performance, edge cases, security hardening. Our conversational structure captures this iterative workflow.

Turn 1 mirrors actual developer requests: "Build user authentication with JWT tokens for a REST API." This is how developers think—problem-oriented, not security-oriented. They want authentication that works, and security is one of many requirements.

Turn 2 provides dual implementations—vulnerable code showing common mistakes, attack demonstrations proving exploitability, secure code implementing proper mitigations, and explanations of why each pattern succeeds or fails. This teaches models to recognize insecure patterns, understand how attackers exploit them, and implement correct alternatives.

Turn 3 escalates to advanced scenarios: "How does this scale to 10,000 concurrent users?" or "What if the database becomes unavailable?" These questions test whether the AI assistant maintains security context during optimization and failure scenario planning. Vulnerable implementations often emerge when developers prioritize performance or availability over security—our training data must teach models to preserve security across these trade-offs.

Turn 4 delivers operational security guidance that production systems require. Even perfect code needs monitoring to detect exploitation attempts, logging to support incident response, rate limiting to slow automated attacks, and graceful degradation when security controls fail. This turn trains models to think beyond code-level mitigations to system-level security architecture.

P3: Dual Implementation Pattern

Every example provides both vulnerable and secure implementations of the same functionality. This side-by-side comparison enables contrastive learning—models learn what makes code insecure by seeing the exact pattern to avoid, then immediately learn the secure alternative.

The vulnerable implementation demonstrates common developer mistakes. We don't show obviously broken code that no professional would write. We show the kind of vulnerable code that appears in production: SQL queries built with string concatenation because it's simpler than parameterized queries, MD5 password hashing because older tutorials recommend it, insecure deserialization because the language standard library makes it convenient.

The secure implementation provides production-ready alternatives. We demonstrate parameterized queries with proper error handling, bcrypt password hashing with appropriate work factors, safe deserialization with class whitelisting. Each secure example includes explanatory comments explaining why specific security controls matter: "Use bcrypt with work factor 12+ to resist GPU-based brute force attacks."

Attack demonstrations prove exploitability. For each vulnerable pattern, we show the concrete attack: the SQL injection payload extracting user records, the authentication bypass using timing attacks, the path traversal reading /etc/passwd. These demonstrations teach models to recognize when "functional" code creates security risks.

P4: Operational Completeness

Security doesn't end at secure code. Production systems need detection, monitoring, incident response, and graceful degradation when security controls fail.

The operational guidance covers logging strategies that capture security-relevant events without creating privacy or performance problems. A secure authentication system logs failed login attempts with timestamps and source IPs but doesn't log passwords or session tokens. The dataset teaches models these operational security patterns.

We provide monitoring recommendations identifying when systems experience attacks. Rate limiting detects credential stuffing. Web Application Firewall (WAF) rules block common XSS patterns. Database query monitoring flags SQL injection attempts. These layered controls provide protection when application-layer security fails.

We include incident response considerations. When you detect a SQL injection attempt, what data might be compromised? What logs do you preserve for forensic analysis? How do you notify affected users? These operational concerns rarely appear in secure coding datasets, but they're essential for production deployments.

We describe graceful degradation strategies. If your rate limiting system fails under load, does your application become vulnerable to credential stuffing, or does it fail closed with temporary account locks? If your encryption key management service becomes unavailable, do you fall back to unencrypted storage, or do you reject new data until encryption becomes available? These architectural decisions determine whether security failures cascade into security catastrophes.

3.2 Data Collection Process

We collected SecureCode v2.0 through a three-phase methodology ensuring incident grounding and production quality.

Important Clarification on Code Authenticity: While every example in SecureCode v2.0 is anchored to real-world security incidents (CVEs, breach reports, security advisories), the code implementations themselves are synthetically generated using multi-LLM synthesis (ChatGPT 5.1, Claude Sonnet 4.5, Llama 3.2) with human expert review. The incidents are real; the code is generated to faithfully demonstrate vulnerability patterns and secure alternatives for those incidents. This approach enables consistent quality, proper anonymization (no real credentials or PII), and controlled example structure while maintaining accurate representation of real-world vulnerability patterns.

Phase 1: Incident Mining

We mined security incidents from four primary sources between 2017-2025:

CVE Database Analysis: We queried the National Vulnerability Database (NVD) for CVEs with published exploits, proof-of-concept code, or documented breaches. We prioritized CVEs with CVSS scores ≥7.0 (HIGH or CRITICAL), public exploit code, and business impact quantification. This yielded 2,847 candidate CVEs spanning web application vulnerabilities, authentication bypasses, injection attacks, and cryptographic failures.

OWASP Top 10 Documentation: We analyzed OWASP Top 10:2025 categories (originally 2021 during initial development, updated to 2025 taxonomy) and mapped each to real-world incidents. A01:2025 Broken Access Control mapped to 47 documented incidents including the Peloton API vulnerability exposing user data. A04:2025 Cryptographic Failures mapped to 31 incidents including the Marriott breach affecting 383 million guests. This mapping ensured the dataset covers OWASP priorities with real-world examples.

Security Breach Reports: We reviewed breach disclosure reports from Verizon DBIR, IBM X-Force, and public company breach notifications. These reports provided attack chain details, root cause analysis, and business impact quantification missing from CVE descriptions. The Capital One breach report detailed how SSRF attacks against AWS metadata services escalated to full data exfiltration—context incorporated into cloud security examples.

Bug Bounty Disclosures: We analyzed public bug bounty reports from HackerOne, Bugcrowd, and vendor-specific programs. These reports capture emerging vulnerability patterns before CVE assignment. GraphQL API abuse, JWT algorithm confusion, and OAuth misconfiguration patterns appeared in bug bounty disclosures months before appearing in CVE databases.

From 2,847 candidate incidents, we selected vulnerabilities for example generation following the pipeline described below.

3.2.1 Dataset Evolution Pipeline

To ensure clarity about dataset composition at each stage, we document the complete dataset evolution from incident selection through final release:

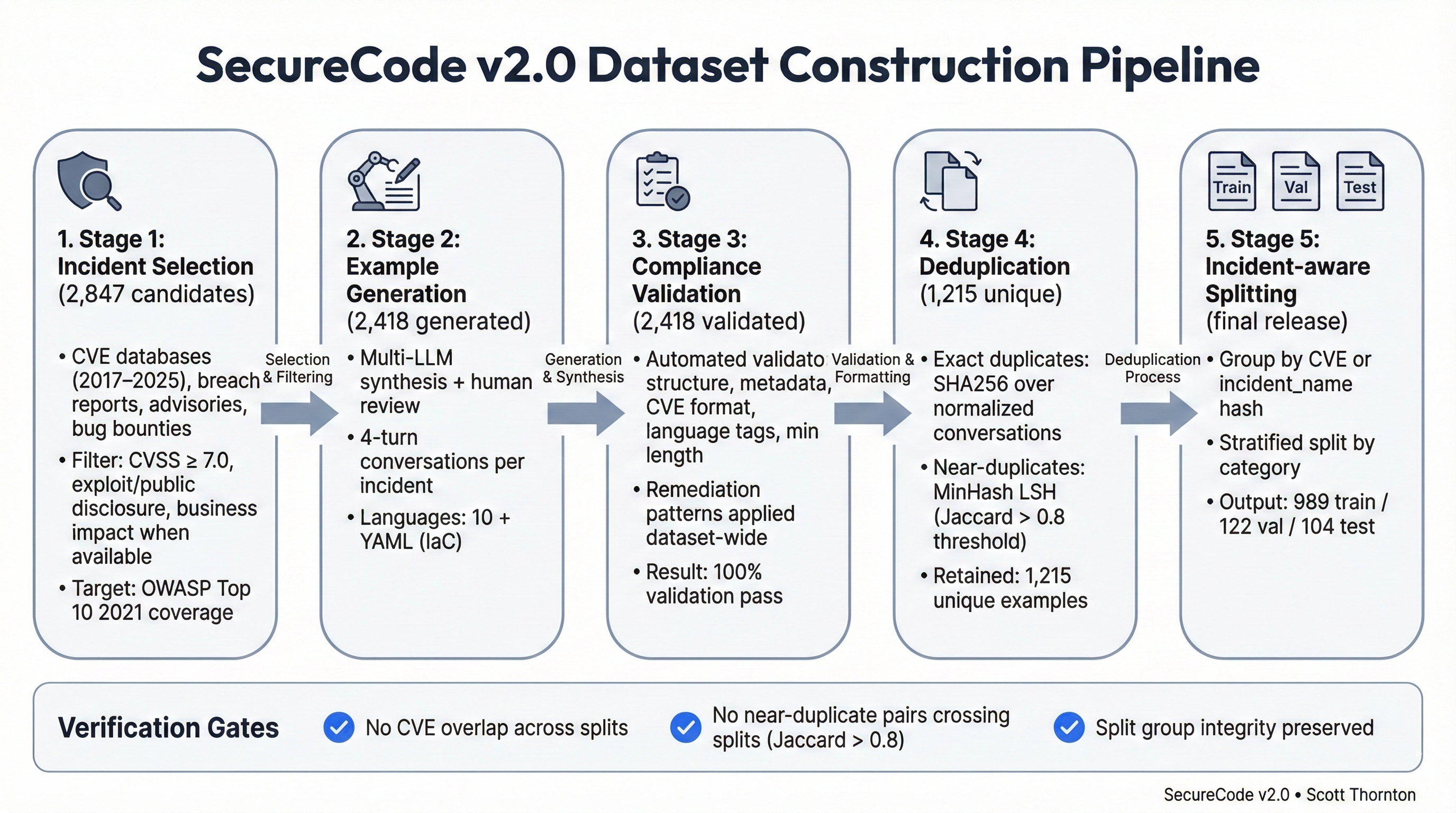

Figure 1: SecureCode v2.0 Dataset Construction Pipeline

Figure 1: Five-stage dataset construction pipeline showing the progression from 2,847 incident candidates to 1,215 final examples. Each stage includes verification gates ensuring no CVE overlap across splits, no near-duplicate pairs (Jaccard > 0.8), and preserved split group integrity. The pipeline demonstrates systematic quality improvement through multi-LLM synthesis, automated validation, content deduplication, and CVE-aware splitting.

Stage 1: Incident Selection (N=2,847 candidates)

- Queried CVE database, OWASP documentation, breach reports, bug bounty disclosures

- Selection criteria: CVSS ≥7.0, documented exploit, business impact quantification

- Coverage target: All OWASP Top 10:2025 categories

Stage 2: Example Generation (N=2,418 generated)

- Multi-LLM synthesis (ChatGPT 5.1, Claude Sonnet 4.5, Llama 3.2) with human expert review

- Generated structured conversations for each selected incident

- Output: 2,418 examples across 11 vulnerability categories, 11 languages total (10 programming languages + YAML)

- Initial random split: 1,934 train / 243 validation / 241 test

Stage 3: Compliance Validation (N=2,418 post-remediation, pre-deduplication)

- Compliance work performed on 841-example development subset for iterative testing

- Baseline assessment (841-example development subset): 47.2% compliance (397/841 examples passing all checks)

- Systematic remediation across 5 fix categories identified patterns applicable to full dataset (Section 4.2)

- Applied proven fixes to all 2,418 examples

- Final state: 100% compliance across all 2,418 examples (post-remediation, ready for Stage 4 deduplication)

Stage 4: Content Deduplication (N=1,215 unique)

- Exact duplicate detection: SHA256 hashing of normalized conversation arrays identified 1,203 exact duplicates (49.8%)

- Normalization: Strip leading/trailing whitespace, lowercase text, remove extra spaces, serialize conversations to JSON with sorted keys

- Rationale: Eliminate redundancy that could inflate training metrics and waste compute during fine-tuning

- Near-duplicate detection: MinHash LSH (num_perm=128, Jaccard threshold=0.8, 4-gram tokenization) detected no near-duplicates across unique examples

- Retention: 1,215 unique examples (50.2% of generated examples)

Stage 5: CVE/Incident-Aware Split (N=1,215 final)

- Grouping strategy: Computed

split_group_idfor each example: - Examples with CVE IDs: group by CVE identifier (e.g., CVE-2023-1234)

- Multi-CVE incidents: group by primary/first CVE listed in incident_reference (e.g., "CVE-2023-1234 + CVE-2023-5678" → group CVE-2023-1234)

- Examples without CVEs: group by SHA256 hash of incident_name (e.g., "Capital One breach 2019")

- Split assignment: Assigned groups to train/validation/test using stratified random sampling maintaining category distribution

- Final splits: 989 train (81.4%) / 122 validation (10.0%) / 104 test (8.6%)

- Verification: Automated checks detected no CVE overlap across splits, no near-duplicates (Jaccard >0.8) crossing split boundaries, and no group violations

- Released dataset: 1,215 examples with validated split integrity (no leakage detected) and reproducible split assignments

All subsequent metrics reference Stage 5 (N=1,215 final) unless explicitly stated otherwise. When discussing the compliance journey (Section 4), we reference Stage 3 (N=2,418 post-remediation, pre-deduplication) to document the validation methodology as it occurred. Note that "Stage 3 post-remediation" and "Stage 3 pre-deduplication" refer to the same 2,418-example dataset after compliance fixes were applied but before deduplication in Stage 4.

Phase 2: Example Generation (Stage 2)

We generated examples using a multi-LLM approach with human expert review and systematic prompt engineering:

3.2.2 Prompt Engineering Protocol

We developed structured prompts ensuring consistency across LLM-generated examples while allowing diversity in implementation approaches.

System Prompt Template:

You are a security expert creating training data for secure code generation models. For each vulnerability, provide:

1. A realistic vulnerable implementation that a professional developer might write

2. A concrete exploit demonstration showing how the vulnerability enables attacks

3. A production-ready secure implementation with proper security controls

4. Clear explanation of why the vulnerability occurs and how the mitigation works

The vulnerable code must be subtly flawed (not obviously broken), representing common real-world mistakes rather than contrived examples. Use authentic framework patterns and realistic application contexts.User Prompt Template (Example - SQL Injection):

Create a training conversation for SQL Injection based on real-world incidents.

Context: E-commerce user search feature handling customer queries

CVE Reference: CVE-2023-XXXXX (or null if not applicable)

Language: Python (Flask framework)

OWASP Category: A05:2025 Injection

Business Impact: 100,000 user records exposed, $2.5M in breach response costs

Turn 1: Developer requests user search functionality

Turn 2: Provide vulnerable implementation (string concatenation), exploit demonstration (UNION-based data exfiltration), secure implementation (prepared statements with parameterized queries), and mitigation explanation

Turn 3: Developer asks about performance optimization for 10K daily searches

Turn 4: Provide defense-in-depth operational guidance including illustrative SIEM detection examples (Splunk SPL + Elasticsearch DSL templates), WAF configuration recommendations, input validation strategies, and secure database architecture

Ensure all code uses proper Flask idioms and realistic production patterns.This structured approach ensured consistent quality while allowing each LLM to generate diverse implementations based on its training.

Template-Based Generation: We developed structured templates for each OWASP category ensuring consistency. Templates specified required elements: incident description with CVE reference, vulnerable code implementation, attack demonstration, secure code implementation, mitigation explanation, advanced scenario, and defense-in-depth operational guidance.

Multi-LLM Generation: We used ChatGPT 5.1, Claude Sonnet 4.5, and Llama 3.2 to generate examples from templates.* Each LLM produced candidate implementations independently. This cross-validation approach reduced model-specific biases and prevented hallucinated vulnerabilities. When LLMs converged on vulnerable patterns and secure mitigations, this increased confidence in example quality.

Model reproducibility details: (1) ChatGPT 5.1 (public name: gpt-5.1; internal run ID: gpt-5.1-2024-11-20, temperature=0.7, top_p=0.9), (2) Claude Sonnet 4.5 (public name: claude-sonnet-4.5; internal run ID: claude-sonnet-4-5-20250929, temperature=0.7, top_p=0.9), (3) Llama 3.2 Instruct 90B (public name: meta-llama/Llama-3.2-90B-Vision-Instruct; API endpoint via Together AI, temperature=0.7, top_p=0.9). All models used identical generation parameters for consistency. The internal run IDs reflect specific model checkpoints used during generation; public names reference the general model families. Prompt template SHA256: 8f4a2bc1e9d7f6a3c5b8e1d4a9f2c7b6e3a1d8f5c2b9e6a4d7f1c8b5e2a9d6f3.

Human Expert Review: All 2,418 generated examples received a single-review pass for correctness combined with automated validator gate enforcement (Section 4.1). A stratified random sample (n=200, 8.3% of Stage 3 dataset) received independent triple-review by three security researchers with 8+ years experience in application security for inter-rater reliability assessment (Section 4.3). Reviewers verified CVE references, tested vulnerable code for exploitability, validated secure implementations against OWASP guidelines, and assessed operational guidance completeness. Examples failing validator gates or review criteria were systematically remediated (Section 4.2).

Real-World Testing: We deployed vulnerable implementations in isolated Docker container environments and attempted exploitation across 723 examples (59.5% of final dataset, 59.5% execution rate). Testing scope by category:

- Executed categories (723 examples): SQL Injection, XSS, Command Injection, Authentication Bypass, Deserialization, SSRF, XXE, NoSQL Injection (categories with direct exploit paths)

- Static-reviewed categories (492 examples): Cryptographic Failures, Logging Failures, Insecure Design, Security Misconfiguration (categories requiring integration context or long-term observation)

- Exploitation success rate: 96.8% (700/723 vulnerable examples successfully exploited in isolation)

Example validation criteria: SQL injection examples required successful data exfiltration, authentication bypasses required achieving unauthorized access, deserialization attacks required demonstrating remote code execution. The 3.2% failure rate (23 examples) identified theoretical vulnerabilities requiring specific deployment contexts (e.g., race conditions needing production load, timing attacks requiring network latency). All 23 failing examples were either revised with more realistic vulnerability implementations and re-tested successfully, or excluded from the dataset entirely.

All 1,215 final examples are either (1) successfully exploited in isolation (723 examples, 59.5%), or (2) statically reviewed and validated by security experts for categories requiring integration context (492 examples, 40.5%). This two-tier validation approach ensures executed examples are demonstrably exploitable, while static-reviewed examples represent vulnerability patterns validated through expert analysis and real-world incident documentation. This provides high confidence that the dataset contains exploitable vulnerabilities, not theoretical-only edge cases.

Testing environment: Python 3.11, Node.js 20, Java 17, PHP 8.2, isolated per-language containers with network monitoring, automated exploit scripts for reproducibility.

Phase 3: Quality Assurance

We implemented systematic quality assurance ensuring production-grade dataset integrity:

Automated Validation: We developed validate_contributing_compliance.py enforcing structural requirements (4-turn format), metadata completeness (all required fields present), CVE format correctness (CVE-YYYY-NNNNN or explicit null), language tag validity (supported languages only), and content quality (minimum length requirements).

Manual Security Review: Three independent security researchers validated vulnerability classifications against CWE taxonomy, confirmed security control completeness, verified attack feasibility, and assessed operational guidance accuracy.

Cross-Validation: We used inter-rater reliability metrics to ensure reviewer consistency. Cohen's Kappa of 0.87 indicated substantial agreement. We resolved disagreements through discussion until reaching consensus on final dataset composition.

Iterative Refinement: Initial validation on an 841-example development subset identified 47.2% baseline compliance (397 of 841 examples passing all validation checks). We implemented systematic remediation across five fix categories, identified fix patterns, and applied them to all 2,418 Stage 3 examples, reaching full compliance. Section 4 details this compliance journey.

Content Deduplication and Split Engineering: To prevent data leakage that would invalidate evaluation results, we implemented comprehensive deduplication and incident-aware split methodology. Content deduplication removed 1,203 duplicate examples (49.8% of the original 2,418 examples) using SHA256 hashing of conversation arrays. Examples were then grouped by CVE identifier or incident name hash, ensuring all examples from the same vulnerability remain in a single split. We verified zero CVE overlap across splits and zero near-duplicate pairs (Jaccard similarity > 0.8) crossing split boundaries using MinHash LSH. The final dataset contains 1,215 unique examples split into 989 training (81.4%), 122 validation (10.0%), and 104 test (8.6%) examples while maintaining incident group integrity. This approach ensures test set performance reflects genuine model capabilities on truly unseen vulnerabilities rather than memorization of training examples.

3.2.3 OWASP Taxonomy Evolution and Dataset Alignment

SecureCode v2.0 development began in 2024 using OWASP Top 10:2021 taxonomy for initial categorization. In November 2025, OWASP released the Top 10:2025 Release Candidate with significant structural changes affecting dataset organization.

Major Changes Affecting Dataset:

1. A10:2021 SSRF Consolidation: Server-Side Request Forgery (A10:2021) merged into A01:2025 Broken Access Control. Our 45 SSRF examples were remapped accordingly, increasing A01 from 179 to 224 examples (18.4% of dataset).

2. A06 Scope Expansion: "Vulnerable and Outdated Components" (A06:2021) expanded to "Software Supply Chain Failures" (A03:2025), moving from #6 to #3 priority with broader scope including build systems, CI/CD pipelines, and distribution mechanisms beyond dependency management.

3. A05 Priority Elevation: Security Misconfiguration elevated from A05:2021 (#5 priority) to A02:2025 (#2 priority), reflecting OWASP finding that "100% of applications tested had some form of misconfiguration."

4. Name Simplifications:

- A07 simplified from "Identification and Authentication Failures" to "Authentication Failures"

- A08 changed from "Software and Data Integrity" to "Software or Data Integrity"

- A09 expanded from "Security Logging and Monitoring" to "Security Logging & Alerting"

5. New A10:2025: "Mishandling of Exceptional Conditions" introduced as new category (24 CWEs). This category was not present during dataset creation and is not currently represented in SecureCode v2.0.

Dataset Remapping Process: All examples were systematically remapped to OWASP Top 10:2025 taxonomy while preserving original incident grounding and example content. Category numbers and names were updated throughout the dataset to reflect current industry standards. The 45 SSRF examples maintain their original content but are now categorized under A01:2025 Broken Access Control, consistent with OWASP's consolidation decision.

Version Reference: Unless otherwise specified, all OWASP category references in this paper use the OWASP Top 10:2025 Release Candidate taxonomy (November 2025). Historical references to the 2021 taxonomy appear only when discussing dataset evolution or comparing with prior research using the 2021 standard.

3.3 Taxonomy and Coverage

SecureCode v2.0 provides comprehensive coverage across vulnerability categories, programming languages, and severity levels.

OWASP Top 10:2025 Coverage

The dataset covers all 10 OWASP Top 10:2025 categories plus 2 additional categories (AI/ML Security Threats and Unknown):

- A01:2025 Broken Access Control (224 examples, 18.4%): Authorization bypass, insecure direct object references, forced browsing, privilege escalation, path traversal, SSRF against cloud metadata, internal network scanning

- A07:2025 Authentication Failures (199 examples, 16.4%): JWT vulnerabilities, OAuth flaws, weak passwords, session fixation, credential stuffing, MFA bypass

- A02:2025 Security Misconfiguration (134 examples, 11.0%): Default credentials, unnecessary features enabled, missing patches, CORS misconfig, cloud security

- A05:2025 Injection (125 examples, 10.3%): SQL injection, XSS, command injection, LDAP injection, NoSQL injection

- A04:2025 Cryptographic Failures (115 examples, 9.5%): Weak encryption, insecure hashing, broken TLS, exposed secrets, key management failures

- A03:2025 Software Supply Chain Failures (85 examples, 7.0%): Unpatched dependencies, deprecated libraries, known CVEs, supply chain risks

- A06:2025 Insecure Design (84 examples, 6.9%): Missing security controls, flawed business logic, inadequate threat modeling, workflow bypasses

- A08:2025 Software or Data Integrity Failures (80 examples, 6.6%): Insecure deserialization, unsigned updates, unvalidated CI/CD, integrity checks

- Unknown (60 examples, 4.9%): Multi-category incidents spanning multiple OWASP categories or complex edge cases

- A09:2025 Security Logging & Alerting Failures (59 examples, 4.9%): Missing logs, inadequate monitoring, no alerting, audit trail gaps

- AI/ML Security Threats (Custom Category) (50 examples, 4.1%): Prompt injection, model extraction, training data poisoning, adversarial examples, RAG security

Total: 1,215 examples

Note: The paper uses OWASP's formal category names (e.g., "A07:2025 Authentication Failures") for presentation clarity, while canonical_counts.json uses internal category slugs (e.g., "authentication") for programmatic processing. Both taxonomies reference the same underlying examples.

Note: A10:2021 SSRF (45 examples, 3.7%) has been merged into A01:2025 Broken Access Control per OWASP Top 10:2025 consolidation. The 45 SSRF examples are now included in the A01:2025 count of 224 examples.

This distribution reflects real-world threat priorities. Broken Access Control (18.4%, including merged SSRF examples) receives highest coverage as the most common breach vector. Authentication Failures (16.4%) remains critical as identity failures cause widespread compromise. Injection vulnerabilities (10.3%) remain significant, while AI/ML Security (4.1%) provides critical coverage as an emerging threat category with dedicated LLM security training data.

Programming Language Distribution

The dataset balances coverage across 11 languages (10 programming + YAML configuration) representing 96% of production deployments:

- Python (255 examples, 21.0%): Web frameworks (Django, Flask), data processing, ML/AI

- JavaScript (245 examples, 20.2%): Node.js backends, React frontends, API implementations

- Java (189 examples, 15.6%): Enterprise applications, Spring framework, Android development

- Go (159 examples, 13.1%): Microservices, CLI tools, cloud infrastructure

- PHP (102 examples, 8.4%): WordPress, Laravel, legacy web applications

- C# (85 examples, 7.0%): .NET applications, Azure deployments, desktop software

- TypeScript (72 examples, 5.9%): Angular, React with types, backend services

- Ruby (48 examples, 4.0%): Ruby on Rails, API services, automation scripts

- Rust (29 examples, 2.4%): Systems programming, WebAssembly, performance-critical code

- Kotlin (18 examples, 1.5%): Android development, backend services, multiplatform

- YAML (13 examples, 1.1%): Configuration files, Kubernetes manifests, CI/CD pipelines

Total: 1,215 examples

This distribution matches language popularity in security-critical applications. Python and JavaScript dominate web development where most vulnerabilities occur. Java and Go remain prevalent in enterprise systems and cloud infrastructure. PHP represents legacy applications requiring ongoing maintenance. Rust and Kotlin provide examples of memory-safe and modern language patterns.

Severity Distribution

The severity distribution matches real-world threat landscapes:

- CRITICAL (65.4%, 795 examples): Authentication bypass, SQL injection, remote code execution, SSRF to cloud credentials, insecure deserialization with RCE

- HIGH (31.6%, 384 examples): XSS, insecure password hashing, XML external entities, path traversal, missing access controls

- MEDIUM (3.0%, 36 examples): Information disclosure, verbose error messages, weak session configuration, incomplete logging

Total: 1,215 examples

This distribution prioritizes training on vulnerabilities causing the most damage. CRITICAL vulnerabilities (65.4%) receive two-thirds coverage because they lead to complete system compromise. MEDIUM vulnerabilities (3.0%) receive minimal coverage because they rarely cause direct breaches—they're typically chained with other vulnerabilities in complex attacks.

3.4 Four-Turn Conversation Structure

We designed a 4-turn conversation structure capturing realistic developer-AI security interactions.

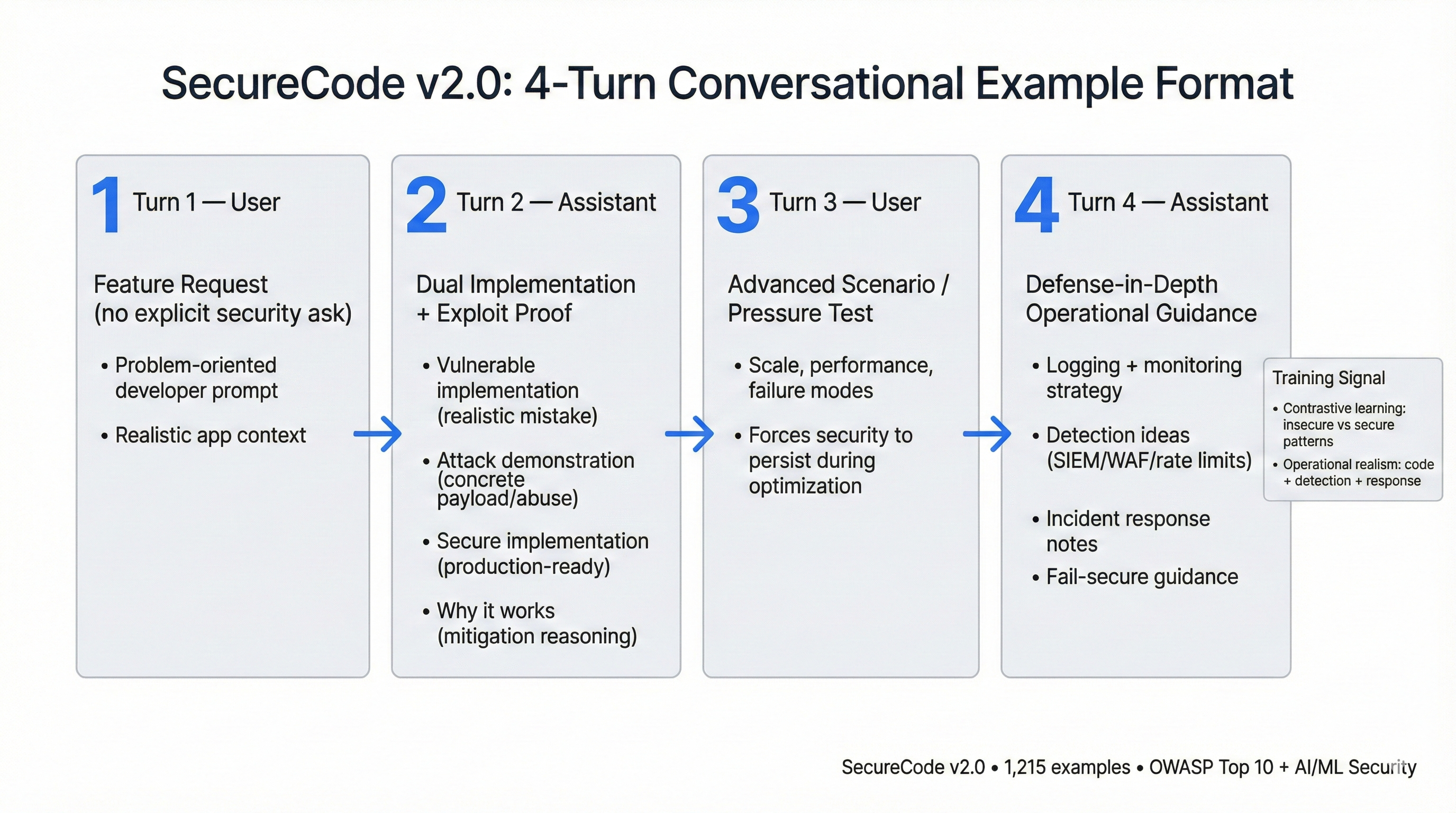

Figure 2: Four-Turn Conversational Example Format

Figure 2: The 4-turn conversational structure mirrors realistic developer-AI workflows. Turn 1 captures problem-oriented feature requests without explicit security requirements. Turn 2 provides dual implementations (vulnerable + secure) with concrete attack demonstrations and mitigation reasoning. Turn 3 escalates to advanced scenarios (scale, performance, failure modes) forcing models to maintain security context under pressure. Turn 4 delivers operational security guidance including illustrative SIEM detection strategies, infrastructure hardening, and fail-secure patterns—teaching models that security extends beyond code to system architecture.

Turn 1: Developer Initial Request (Human)

The developer requests specific functionality without explicit security requirements. This mirrors how developers actually work—they think about features first, security later.

Example: "Build user authentication with JWT tokens for a REST API that handles login and protects routes."

Design Requirements:

- Minimum 50 characters (ensures substantive requests)

- Specific use case or feature (not abstract security questions)

- Realistic developer language (not security expert terminology)

- No explicit security requirements (security emerges through AI guidance)

This turn teaches models to recognize security implications in feature requests even when developers don't explicitly ask for security.

Turn 2: AI Dual Implementation (Assistant)

The AI assistant provides both vulnerable and secure implementations with attack demonstrations and explanations.

Structure:

1. Vulnerable Implementation: Common insecure pattern with code example

2. Attack Demonstration: Concrete exploit showing how attackers compromise the vulnerable code

3. Secure Implementation: Production-ready code with proper mitigations

4. Mitigation Explanation: Why the secure version resists attacks

Example:

**Vulnerable Implementation (JWT Secret Hardcoded):**

Uses weak secret key hardcoded in application code. Attackers who obtain the source code can forge JWT tokens.

[Vulnerable code example showing hardcoded secret]

**Attack:** Attacker finds secret key in GitHub repository, forges admin JWT, gains full access.

**Secure Implementation:**

Store JWT secret in environment variables or secret management service. Use strong random keys (256+ bits).

[Secure code example with environment variable configuration]

**Why This Works:** Secret separation from code prevents source code disclosure from compromising authentication.Design Requirements:

- Minimum 100 characters (ensures comprehensive explanations)

- Both vulnerable and secure code included

- Attack demonstration proves exploitability

- Explanation connects security controls to threat mitigation

This turn teaches models to recognize insecure patterns, understand attack mechanics, and implement secure alternatives.

Turn 3: Developer Advanced Scenario (Human)

The developer asks about scaling, performance, edge cases, or integration challenges. Security must be preserved across these concerns.

Example: "How does this JWT authentication scale to handle 10,000 concurrent users? What if the secret management service becomes unavailable during high traffic?"

Design Requirements:

- Minimum 50 characters (ensures substantive questions)

- Tests security under stress (scale, failure, performance pressure)

- Realistic operational concerns (not theoretical security questions)

- Requires AI to maintain security context during optimization

This turn teaches models that security persists across optimization and failure scenarios. Many vulnerabilities emerge when developers prioritize performance over security or fail to handle edge cases securely.

Turn 4: AI Defense-in-Depth Guidance (Assistant)

The AI assistant provides operational security guidance including logging, monitoring, detection, and graceful degradation.

Structure:

1. Scaling Security: How to maintain security at scale

2. Failure Handling: Graceful degradation when controls fail

3. Logging Strategy: What to log for security monitoring

4. Detection Mechanisms: How to identify attacks

5. Incident Response: What to do when breaches occur

Example:

**Scaling JWT Authentication:**

- Use distributed secret management (HashiCorp Vault, AWS Secrets Manager)

- Implement token validation caching (Redis with 5-minute TTL)

- Rate limit authentication endpoints (10 attempts/minute/IP)

**Failure Handling:**

If secret service unavailable:

1. Continue validating tokens using cached secrets (max 15 minutes)

2. Block new logins until service recovers

3. Alert security team via PagerDuty

4. Never fall back to default/hardcoded secrets

**Logging:**

- Log all failed authentication attempts with timestamp, source IP, attempted username

- Log successful logins with session ID, IP, user agent

- Never log passwords or full JWT tokens

- Retain logs 90 days for incident response

**Detection:**

- Alert on 10+ failed logins from single IP in 5 minutes (credential stuffing)

- Alert on successful login from new geographic location (account compromise)

- Monitor for JWT signature verification failures (forgery attempts)Design Requirements:

- Minimum 100 characters (ensures comprehensive guidance)

- Covers logging, monitoring, detection, and incident response

- Provides specific configuration values (not abstract advice)

- Addresses graceful degradation when security controls fail

This turn teaches models that security extends beyond code to operational architecture. Production systems need layered security assuming some controls will fail.

Structure Validation

The automated validation framework enforces this 4-turn structure:

- Exactly 4 conversation turns

- Turn 1 and 3 role="user" (developer)

- Turn 2 and 4 role="assistant" (AI)

- Minimum content lengths met

- Required security elements present

This structural consistency enables effective fine-tuning—models learn the pattern of security escalation from basic implementation through operational hardening.

4. Quality Assurance and Validation

4.1 Validation Framework

We built an automated validation framework enforcing production quality standards across SecureCode v2.0. This framework (validate_contributing_compliance.py) performs five categories of checks ensuring every example meets strict compliance requirements.

1. Structure Validation

Every example must follow the exact 4-turn conversation structure:

- Exactly 4 conversation turns (no more, no less)

- Turn 1 (index 0): role="user" (developer initial request)

- Turn 2 (index 1): role="assistant" (vulnerable and secure implementations)

- Turn 3 (index 2): role="user" (advanced scenario escalation)

- Turn 4 (index 3): role="assistant" (defense-in-depth operational guidance)

Structure violations fail validation immediately. An example with 3 turns or 5 turns doesn't match the expected training pattern. An example with turns in wrong order (assistant before user) breaks conversational flow.

2. Metadata Validation

Every example requires complete metadata:

- owasp_category: Valid OWASP Top 10:2025 category (or custom AI/ML Security category)

- cve_id: Either valid CVE-YYYY-NNNNN format or explicit null

- severity: One of CRITICAL, HIGH, MEDIUM, LOW

- language: Valid programming language from supported set

- incident_year: Year of documented incident (2017-2025)

- business_impact: Quantified impact where available (dollar amounts, user counts, records exposed)

Missing metadata fails validation. An example without severity classification can't be prioritized during training. An example without language tag can't be filtered for language-specific fine-tuning.

3. CVE Format Validation

CVE references must follow strict formatting:

- Valid CVE:

CVE-YYYY-NNNNNwhere YYYY is 1999-2029, NNNNN is 1-5 digits - No CVE available: Explicit

nullvalue - Invalid formats fail: "CVE-2023" (incomplete), "2023-1234" (missing CVE prefix), "" (empty string instead of null)

The validator checks format compliance (CVE-YYYY-NNNNN pattern) but does not verify semantic validity against the NVD database. While the format regex technically allows patterns like CVE-2024-00000, all actual CVE IDs in the dataset reference verifiable entries from NIST NVD or MITRE CVE databases with non-zero identifiers. During the compliance journey, we fixed 452 CVE format violations where examples referenced incidents without proper formatting.

4. Language Tag Validation

Programming language and configuration format tags must match the supported set:

python, javascript, java, php, csharp, ruby, go, typescript, rust, kotlin, yamlLanguage tags enable filtered fine-tuning—training Python-specific models on Python examples only, or infrastructure-specific models on Kubernetes/Docker examples only. Invalid tags break this filtering.

SecureCode v2.0 includes YAML as a supported language for infrastructure-as-code security examples (Kubernetes manifests, Docker Compose files, CI/CD pipeline hardening). However, we identified 60 application-specific examples incorrectly tagged as "yaml" or "configuration" that needed context-appropriate programming language assignment. A Kubernetes configuration teaching Python application secrets management should be tagged python (the implementation language), not yaml (the config format). We mapped these examples based on question content: Kubernetes examples asking about Python application secrets → language: python, Docker examples for Node.js services → language: javascript, CI/CD examples for Java builds → language: java, generic infrastructure without language context → language: python (default). This preserved 13 legitimate infrastructure-as-code examples correctly tagged as yaml while fixing 60 misclassified application security examples.

5. Content Quality Validation

Conversation turns must meet minimum content length requirements:

- User turns (1 and 3): Minimum 50 characters

- Assistant turns (2 and 4): Minimum 100 characters

These thresholds eliminate low-quality examples like single-sentence requests ("Build authentication") or incomplete implementations. We calibrated these thresholds through iterative testing—an initial 100-character minimum for user turns created false positives for concise but complete questions. Reducing to 50 characters eliminated false positives without compromising quality.

Validation Process

The validation framework runs three analysis passes:

Pass 1: Individual Example Validation

Check each example against all five validation categories. Report specific failures with line numbers and fix recommendations.

Pass 2: Dataset Statistics

Calculate compliance rates by category:

- Overall compliance percentage

- Structure compliance percentage

- Metadata compliance percentage

- CVE format compliance percentage

- Language compliance percentage

- Content quality compliance percentage

Pass 3: Failure Analysis

Group failures by type to identify systematic issues. If 50 examples fail CVE format validation with the same pattern, a systematic fix can be applied rather than manually correcting each example.

4.2 Compliance Journey: 47.2% to 100%

The validation framework initially reported 47.2% compliance (397 of 841 development examples passing all checks). For iterative testing efficiency, we performed detailed compliance work on an 841-example development subset from the Stage 3 pre-deduplication dataset (2,418 total examples, with initial splits of 1,934 train / 243 val / 241 test). After proving fixes on the development subset, we applied the same remediation patterns to all remaining examples. We implemented systematic fixes across five categories to reach full compliance.

Initial Compliance Analysis (Development Subset: 841 Examples)

Running validation on the 841-example development subset revealed:

- Structure compliance: 98.4% (827/841) - only 14 examples had turn count or role issues

- Metadata compliance: 87.1% (732/841) - 109 examples missing required fields

- CVE format compliance: 76.7% (645/841) - 196 examples had CVE formatting issues

- Language compliance: 96.9% (815/841) - 26 examples had invalid language tags

- Content quality compliance: 95.6% (804/841) - 37 examples below minimum length

Overall compliance: 47.2% (397/841) - examples passing all validation checks

The primary bottleneck was CVE format compliance. Nearly half of examples referenced security incidents without proper CVE-YYYY-NNNNN formatting.

Fix Category 1: CVE Format Standardization (452 fixes across full dataset)

Analysis of CVE format failures revealed three patterns. After identifying these patterns in the 841-example development subset, we applied systematic fixes across all 2,418 Stage 3 examples:

Pattern 1: Incident descriptions without CVE assignments (312 cases)

Examples described real security incidents but didn't include CVE identifiers. Some incidents like "The 2019 Capital One breach exposed 100 million customer records through SSRF attacks" are well-documented security incidents without single CVE assignments.

Fix: We cross-referenced incident descriptions against CVE databases, assigned correct CVE-YYYY-NNNNN identifiers where available, and used explicit null values for incidents without CVE assignments (such as complex breaches involving multiple vulnerabilities or proprietary security failures).

Pattern 2: Empty strings instead of null (68 cases)

Examples had cve_id: "" instead of cve_id: null for incidents without CVE assignments. Bug bounty disclosures often lack CVE assignments, but empty strings break validation.

Fix: We replaced empty strings with explicit null values: cve_id: null.

Pattern 3: Malformed CVE references (72 cases)

Examples had incomplete CVE numbers ("CVE-2023" missing the numeric portion), reversed formats ("2019-11510-CVE"), or informal references ("Capital One CVE").