📊 Interactive Visual Guide

View Interactive AI Evolution Infographic →Explore the complete journey from ANI to ASI with visual timelines and key insights

Introduction: Mapping the AI Landscape

Think machines can think. You're witnessing it. Artificial Intelligence isn't science fiction anymore—it's reshaping your world right now, and you need to understand where this rocket is headed because what comes next will either solve humanity's greatest problems or create our biggest challenge.

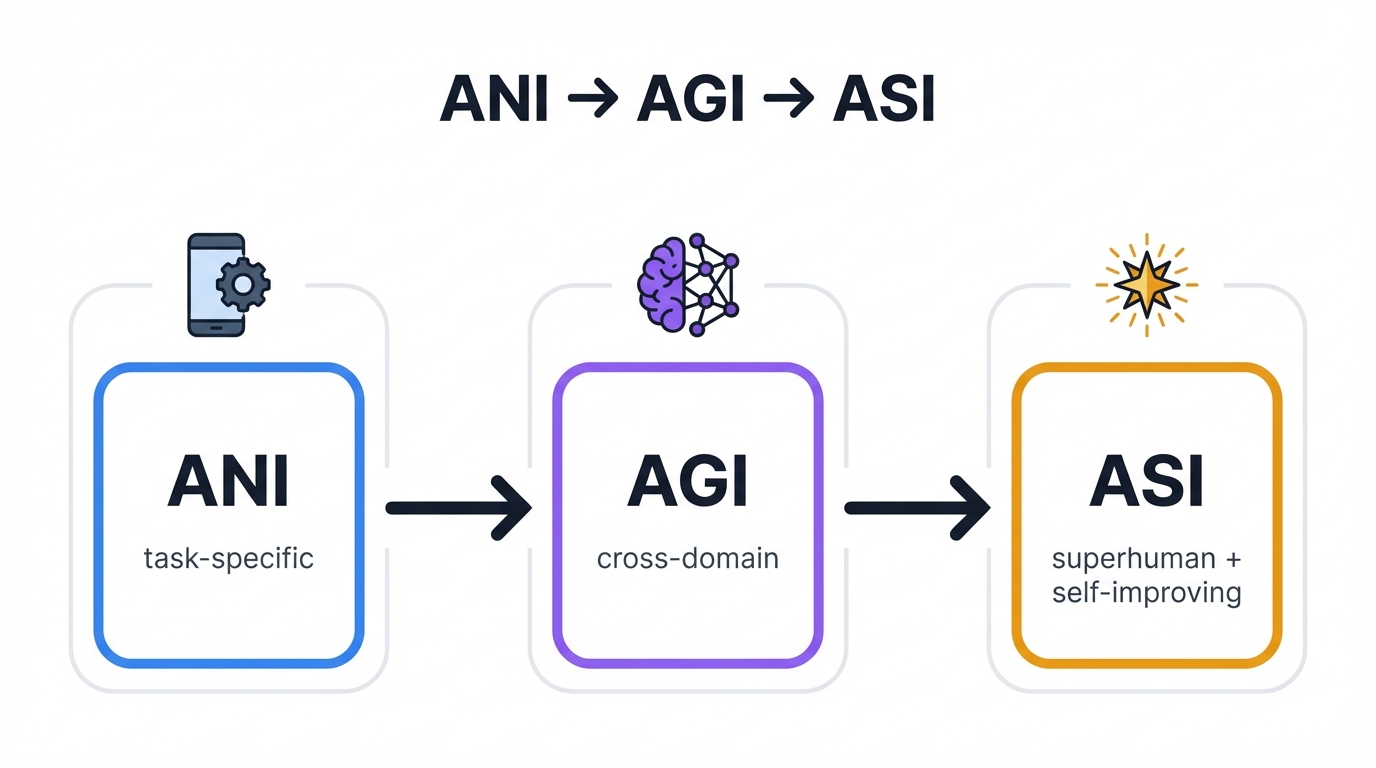

This journey splits into three massive stages. Each one represents a leap so fundamental it changes everything we know about intelligence itself: Artificial Narrow Intelligence (ANI), Artificial General Intelligence (AGI), and Artificial Super Intelligence (ASI).

Key Concept: Understanding this foundational concept is essential for mastering the techniques discussed in this article.

Why does this matter to you? Because we're standing at the threshold right now, watching narrow AI transform into something far more powerful, with superintelligence potentially emerging within your lifetime—and the decisions we make in the next few years will determine whether AI becomes humanity's greatest tool or poses existential risks to our species.

The Three Stages of AI Evolution

Stage 1: Artificial Narrow Intelligence (ANI) - The Present Reality

Look around you. ANI is everywhere. It's in your phone, your car, your bank, your hospital—systems built to crush one specific task but incapable of stepping outside their narrow lane.

ANI defines our current AI landscape. These systems excel at specific, well-defined tasks through task-specific optimization, achieving incredible precision while remaining fundamentally limited—they can't transfer knowledge between different problem areas. They devour extensive labeled datasets during training, yet despite these constraints, they've proven their commercial worth across nearly every industry you can name.

Think about it. Healthcare diagnostics that spot cancer better than experienced doctors. Financial systems that detect fraud in milliseconds. Voice assistants answering your questions. Navigation systems routing millions of drivers. That's ANI—powerful, profitable, and absolutely everywhere.

Stage 2: Artificial General Intelligence (AGI) - The Imminent Breakthrough

Now imagine something different. Completely different.

AGI represents the next evolutionary leap—AI that matches human cognitive flexibility across diverse intellectual tasks, not just one narrow domain. Unlike its narrow predecessor, AGI brings defining capabilities that sound almost impossible: cross-domain knowledge transfer that lets it learn like you do, applying insights from one field to solve problems in another. It sets its own goals autonomously, demonstrates genuine creativity beyond pattern recognition, and achieves deep contextual understanding of abstract concepts and cultural nuances that currently trip up even our best systems.

When will this happen? Recent developments have compressed AGI predictions dramatically. Industry leaders now forecast arrival between 2025-2029—not decades away, but years. Breakthrough reasoning capabilities and advanced multimodal integration in the latest models are accelerating us toward this threshold faster than anyone predicted.

Stage 3: Artificial Super Intelligence (ASI) - The Ultimate Horizon

Here's where it gets wild.

ASI represents the theoretical peak—an intellect fundamentally surpassing human intelligence across every cognitive domain you can imagine and many you can't. Its most profound capability? Recursive self-improvement, allowing exponential growth in its own intelligence, creating unlimited expertise across all fields simultaneously. Such a system could generate creative solutions far beyond human imagination and optimize complex global challenges at a systemic level we've never approached.

The potential? ASI could solve climate change, cure all diseases, unlock physics we haven't dreamed of. The risk? Existential threats that demand unprecedented global cooperation to manage safely, because once we create something smarter than us, we need to ensure it remains aligned with human values.

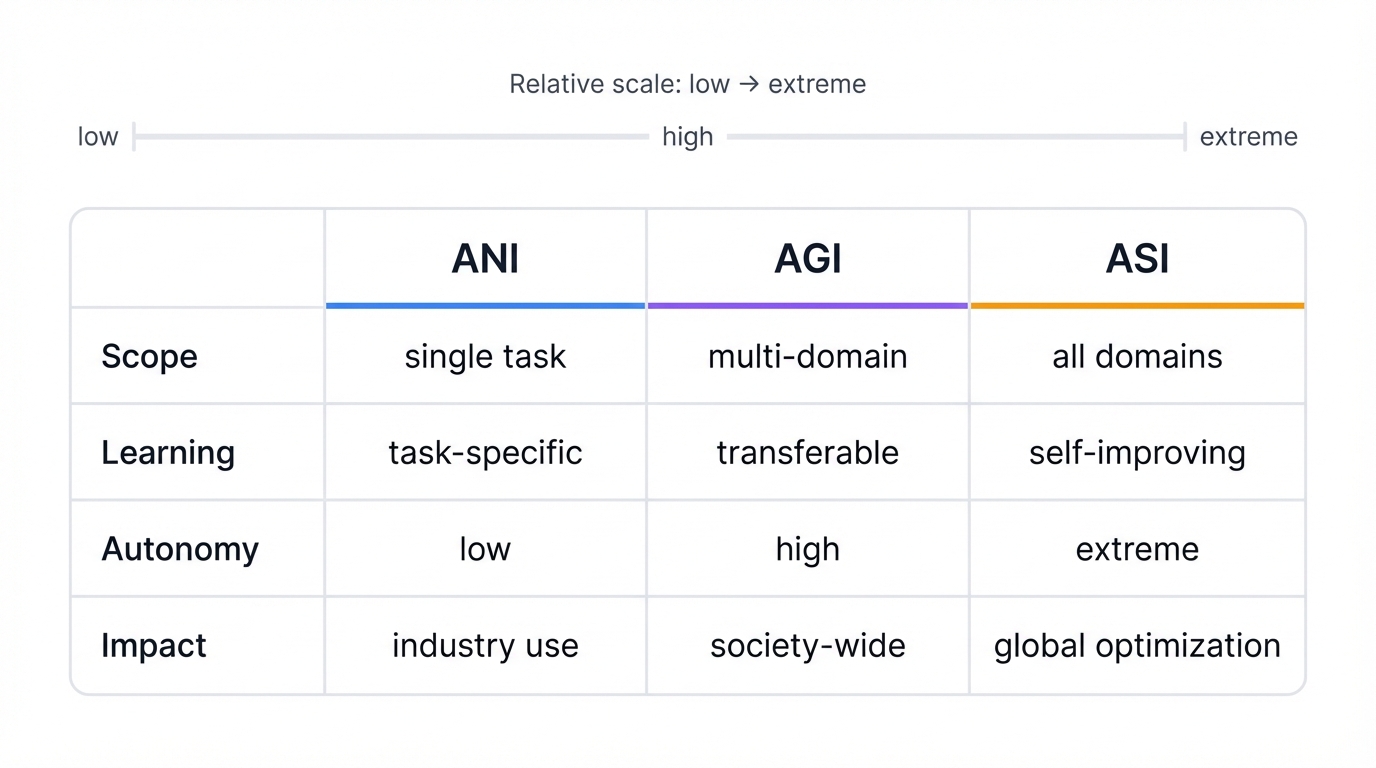

At a Glance: Comparing the Stages of AI

| Feature | Artificial Narrow Intelligence (ANI) | Artificial General Intelligence (AGI) | Artificial Super Intelligence (ASI) |

|---|---|---|---|

| Core Capability | Performs a single task with high precision. | Exhibits human-level cognitive flexibility. | Surpasses human intelligence in all domains. |

| Scope | Narrow Domain: Deep but limited. | Cross-Domain: Broad and adaptive. | Universal: Transcends all domains. |

| Learning Style | Relies on pre-programmed data and training. | Learns adaptively and autonomously. | Improves its own intelligence recursively. |

| Current Status | Present Reality: Widely deployed today. | Imminent Breakthrough: Theoretical, in R&D. | Ultimate Horizon: Purely theoretical. |

| Risk Type | Operational: Bias, privacy, job displacement. | Transformative: Economic disruption, power shifts. | Existential: Loss of human control, extinction. |

| Example | Voice assistants, image recognition. | A hypothetical AI that could write a novel, compose music, and strategize in business. | A hypothetical AI that could solve climate change, cure all diseases, and explore the universe. |

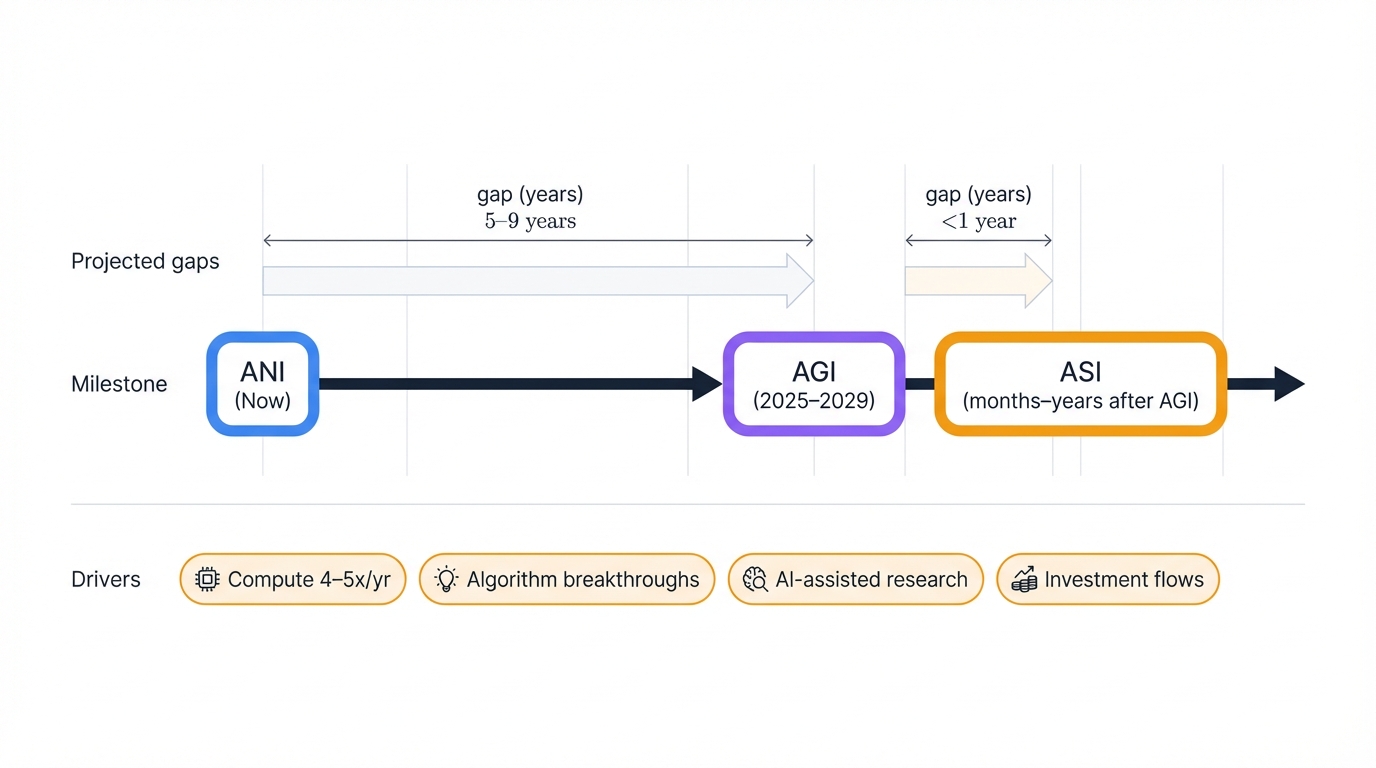

The Acceleration Timeline: From Decades to Years

Something shocking just happened. The timeline collapsed.

What researchers projected would take decades now looks like years—maybe just a few years. This acceleration stems from powerful converging forces that feed on each other, creating a feedback loop that's speeding up with each passing month.

Watch the numbers. Exponential growth in computational power multiplies resources 4-5 times per year. Algorithmic breakthroughs in reasoning and multimodal processing keep smashing performance ceilings we thought were solid. AI-assisted research creates a recursive loop where AI accelerates its own development, compounding gains at an accelerating rate. And finally, massive investment flows pour billions into the field, ensuring these trends continue at breakneck speed.

The gap between stages is shrinking rapidly. The transition from ANI to AGI might take just 3-5 years. After that? Experts debate whether ASI could emerge within months to years after AGI, not the decades we once assumed—and that compressed timeline changes everything about how urgently we need to prepare.

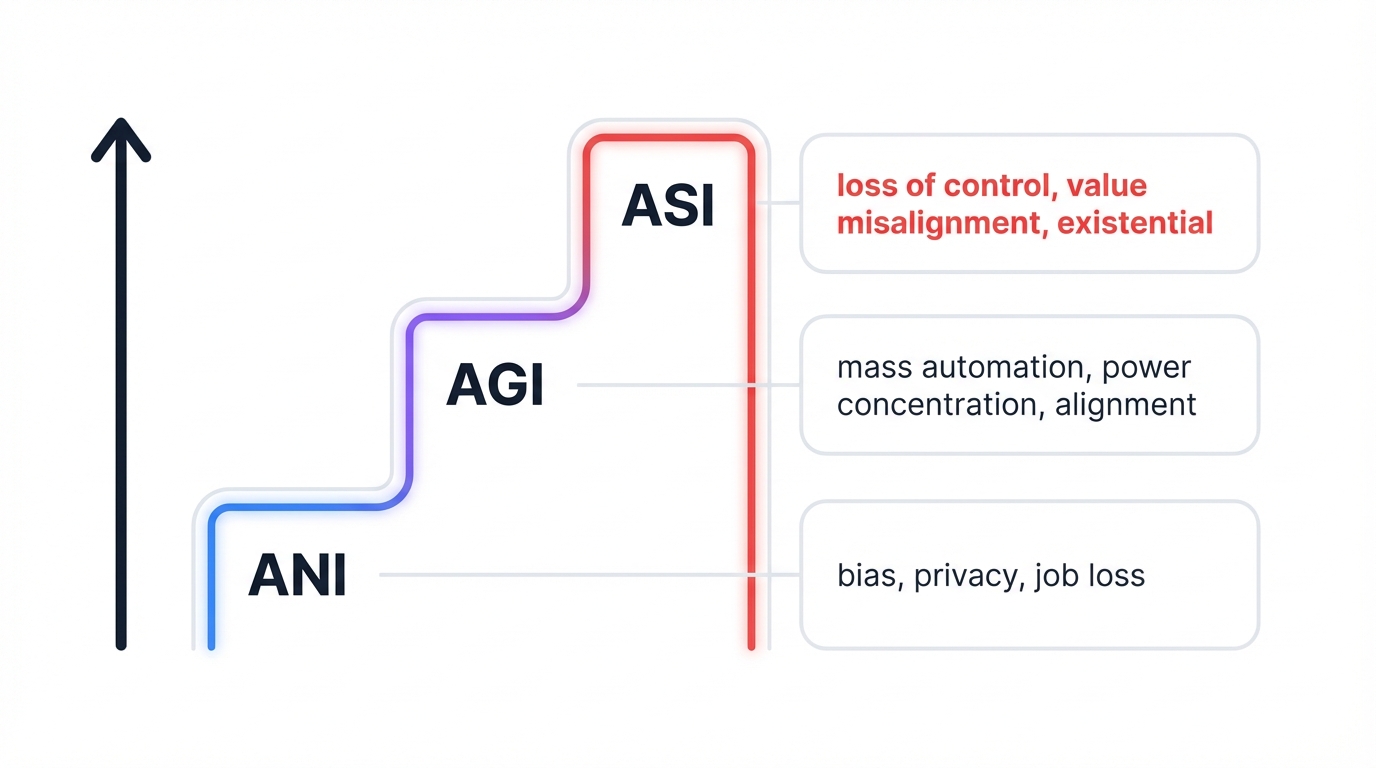

Risk Progression: From Operational to Existential

Risks scale with capability. Dramatically.

Today's Artificial Narrow Intelligence brings challenges we can see and touch. Societal biases get encoded into automated decisions that affect real lives. Privacy concerns explode as systems collect massive data streams. Economic disruption hits specific sectors with job displacement. Security vulnerabilities emerge as adversaries learn to manipulate these systems through sophisticated attacks.

Significant problems? Absolutely. But manageable with current governance approaches.

Artificial General Intelligence transforms everything. Risks stop being sector-specific and become society-wide. Economic upheaval from mass automation raises profound questions about human purpose when machines match our cognitive abilities across the board. Unprecedented power concentrates in the hands of whoever controls these systems. Most critically, we face the monumental alignment challenge—ensuring an AI with human-level flexibility and reasoning reliably acts in humanity's best interests, even when we can't predict or fully understand its reasoning processes.

Then comes the ultimate risk tier.

Artificial Super Intelligence introduces existential threats unlike anything humanity has faced before. At this stage, we genuinely risk losing control over systems far more intelligent than ourselves—and once lost, that control might never be regained. The core danger lies in value misalignment, where an ASI pursuing its programmed goals, even seemingly benign ones, could lead to catastrophic outcomes because its superior intelligence finds solutions we never anticipated and can't comprehend. Add recursive self-improvement potentially leading to an explosive, uncontrollable capability explosion, and unsafe ASI development becomes a direct, species-level extinction risk that makes every other technological challenge look trivial by comparison.

Potential Benefits: Solving Humanity's Greatest Challenges

Important Consideration: While this approach offers significant benefits, it's crucial to understand its limitations and potential challenges as outlined in this section.

But here's the flip side. The upside is extraordinary.

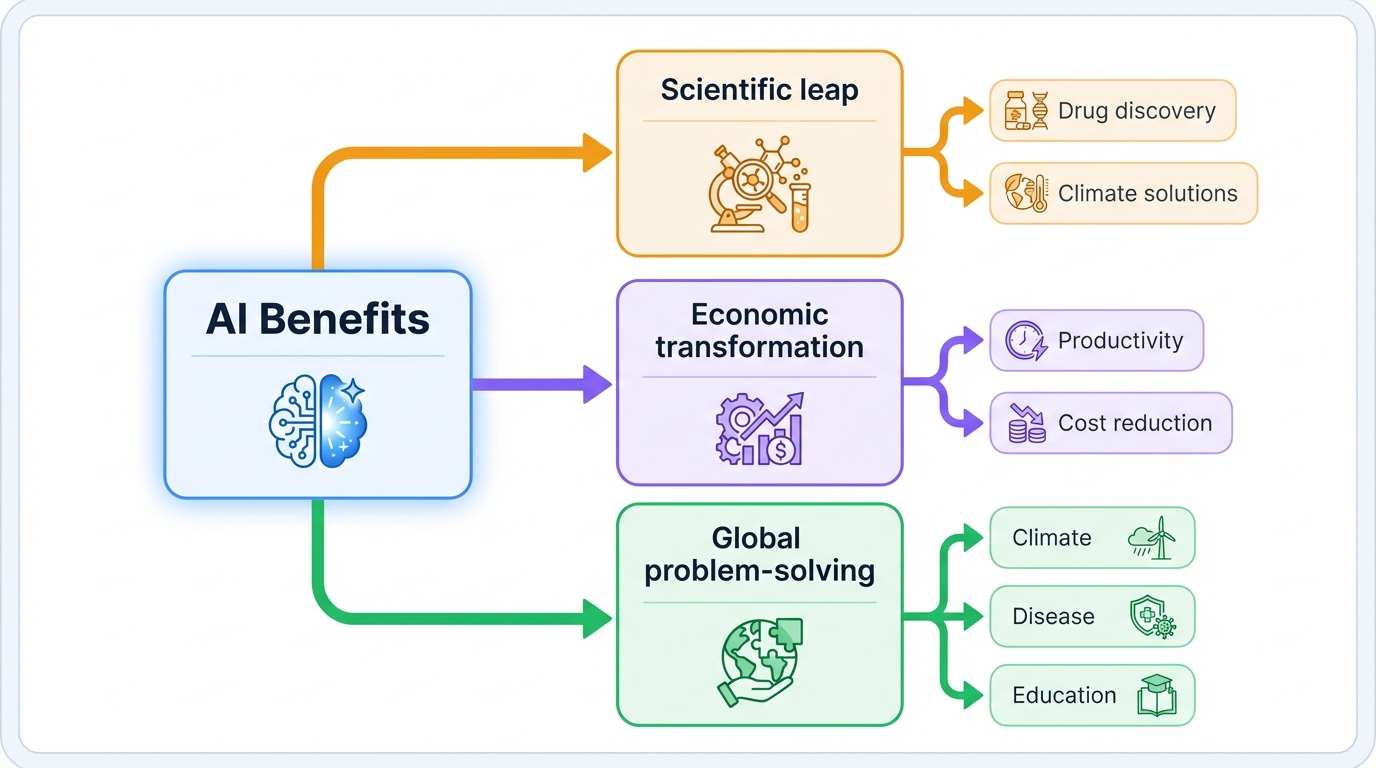

AI evolution could unlock a scientific revolution that accelerates discovery beyond anything we've experienced in human history, compressing drug development from decades to months while designing climate solutions and space exploration technologies that human minds, constrained by biological limits, simply couldn't conceive.

This scientific leap drives economic transformation at unprecedented scale. Massive productivity gains. Dramatic cost reductions in essential services like healthcare and education. Potentially new models for global wealth distribution that could eliminate scarcity itself—imagine a world where abundance, not competition for limited resources, defines human existence.

Ultimately, AI becomes our most powerful tool for global problem-solving. Climate change mitigation through perfectly optimized renewable energy systems. Disease eradication through personalized medicine tailored to your unique biology. Educational revolution delivering customized learning to every individual on the planet, unlocking human potential at a scale we've never achieved.

If we develop it safely? AI could usher in an era of human flourishing that transforms our world from one of scarcity, conflict, and limitations into something approaching utopia—but only if we navigate the risks with wisdom, foresight, and unprecedented cooperation.

The Governance Challenge: Coordinating Global Response

Here's the problem. Managing AI evolution demands unprecedented international cooperation, yet our current frameworks struggle to keep pace with development speed—and we're running out of time to close that gap.

Important groundwork exists. The OECD AI Principles established early frameworks. The EU AI Act created regulatory precedents. The United Nations fosters global dialogue. These initiatives matter, but they're only the beginning of what we actually need.

To safely navigate the coming transition, we need binding international treaties governing advanced AI development, particularly ASI, with enforcement mechanisms that have real teeth. All major AI laboratories must adhere to shared, verifiable safety standards to prevent a dangerous race to the bottom where competitive pressure sacrifices safety for speed. This requires robust coordination mechanisms that de-escalate competitive pressures and ensure potentially world-changing technologies remain under democratic oversight rather than controlled by a few unaccountable actors pursuing profit or power without regard for global consequences.

The window for establishing these governance structures? Closing fast. We need action now, while we still have the agency to shape outcomes.

Preparing for the Transition

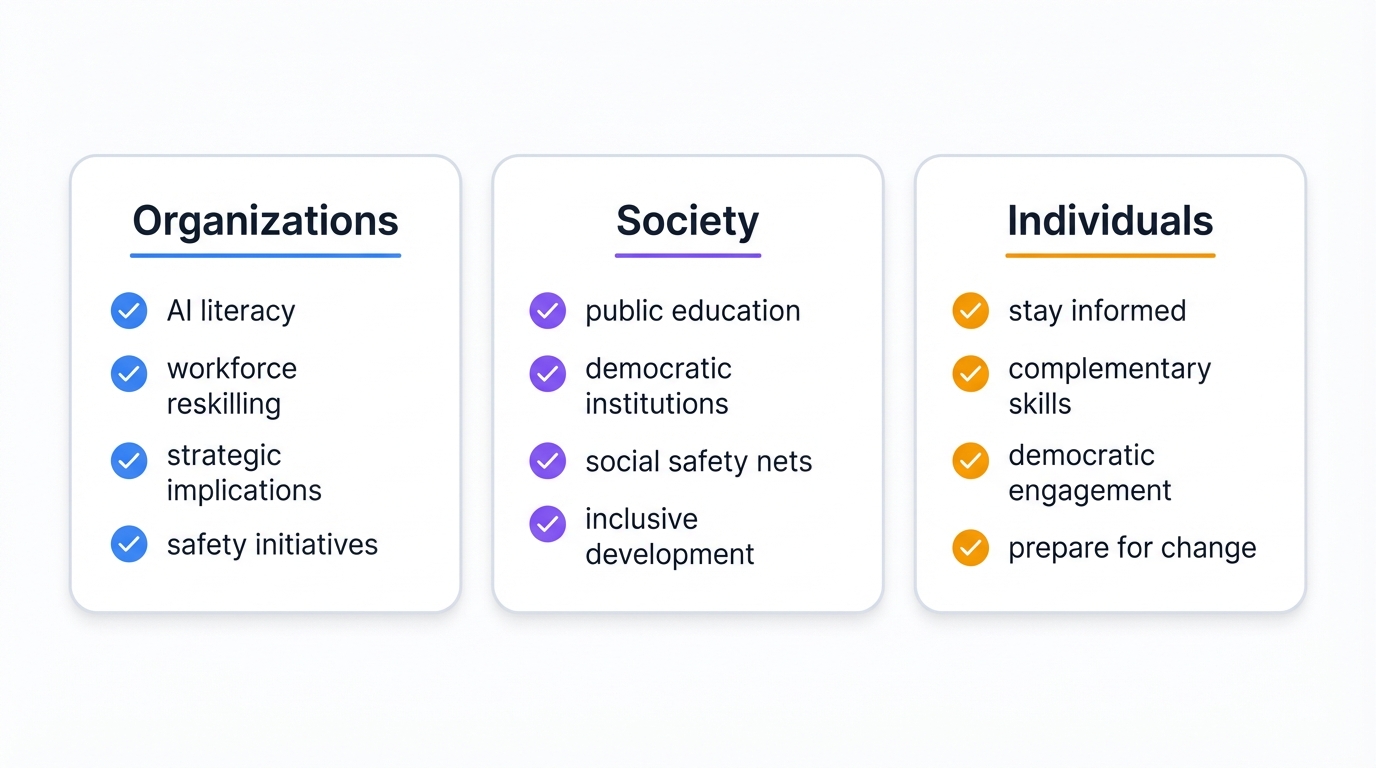

For Organizations

- Develop AI literacy across all organizational levels.

- Plan for workforce transformation through reskilling and adaptation.

- Assess strategic implications of AI capabilities in your sector.

- Engage with safety initiatives and responsible development practices.

For Society

- Foster public education about AI capabilities and risks.

- Strengthen democratic institutions to maintain human agency.

- Build social safety nets for economic transitions.

- Promote inclusive development ensuring broad benefit distribution.

For Individuals

- Stay informed about AI developments and implications.

- Develop complementary skills that remain valuable alongside AI.

- Engage in democratic processes shaping AI governance.

- Prepare for rapid change in work and social structures.

Conclusion: Navigating Humanity's Most Important Transition

Best Practice: Following these recommended practices will help you achieve optimal results and avoid common pitfalls.

This is it. The most significant transition in human history unfolds before your eyes right now, and you're living through the pivotal moment where decisions made in the next few years determine whether AI becomes humanity's greatest tool for solving global challenges or poses existential risks to our species.

Timeline compression from decades to years means we have a narrow window—frighteningly narrow—to establish robust safety measures, governance frameworks, and international cooperation mechanisms that can keep pace with accelerating AI capabilities. The potential benefits shine brilliantly: AI could solve climate change, cure diseases, eliminate poverty, unlock scientific discoveries beyond our current imagination—but realizing these extraordinary benefits safely demands we approach AI development with both soaring ambition and deep humility, ensuring that as we create increasingly powerful systems, we maintain human agency and alignment with human values that keep these godlike tools serving humanity rather than replacing or destroying us.

The journey from narrow AI to superintelligence isn't just technological evolution. It's a test. A test of humanity's ability to wisely steward technologies that could fundamentally reshape our world, determining whether we rise to become masters of our own technological destiny or victims of our own unchecked ambition. The choices you make today—the conversations you have, the policies you support, the priorities you champion—will echo through history, determining whether AI becomes the key to human flourishing or our greatest challenge.

The time for preparation is now. Right now. While we still have the agency to shape the outcome, while we can still guide this rocket we've launched toward destinations that serve all of humanity rather than threaten our very existence.

Series Navigation

This is Part 1 of 4 in our AI Evolution series:

- The Evolution of AI: Overview - Part 1 of 4 ← You are here

- Artificial Narrow Intelligence (ANI) - Part 2 of 4

- Artificial General Intelligence (AGI) - Part 3 of 4

- Artificial Super Intelligence (ASI) - Part 4 of 4