Executive Summary

Complexity Management

Multi-cloud AI deployments multiply security challenges across different platforms. Success requires unified security policies, centralized monitoring, and careful data governance across providers.

The stakes are high. Modern enterprises face threats that didn't exist five years ago when securing AI deployments across multiple cloud platforms, and the numbers tell a stark story—86% of organizations now run multi-cloud AI strategies, creating attack surfaces 3.2 times larger than single-cloud deployments, with average breach costs hitting $10.22 million in 2025 and 97% lacking proper access controls.

This guide cuts through the complexity. We'll show you how to build strong security across hybrid AI environments, tackling the unique challenges of protecting data, models, and infrastructure across AWS, Azure, Google Cloud, Oracle, and IBM Cloud platforms, because the technical implementation is only half the battle—the human and process factors often determine whether multi-cloud AI security succeeds or fails.

Complete Guide Contents

The Multi-Cloud AI Security Challenge

Understanding the Complexity Landscape

Picture this scenario. You're trying to secure not just one house but an entire neighborhood where each house has different owners, different security protocols, and different rules, yet your family needs to move freely between them every day—that's multi-cloud AI security in a nutshell.

Traditional security was simpler. Everything stayed in one place, behind one firewall, and the 2017 Equifax breach was devastating but actually straightforward compared to today's threats because attackers found one vulnerability and exploited it, whereas modern threats are far more sophisticated.

They don't just break into platforms. They target the connections between them, the handoffs, the trust relationships that make multi-cloud AI possible.

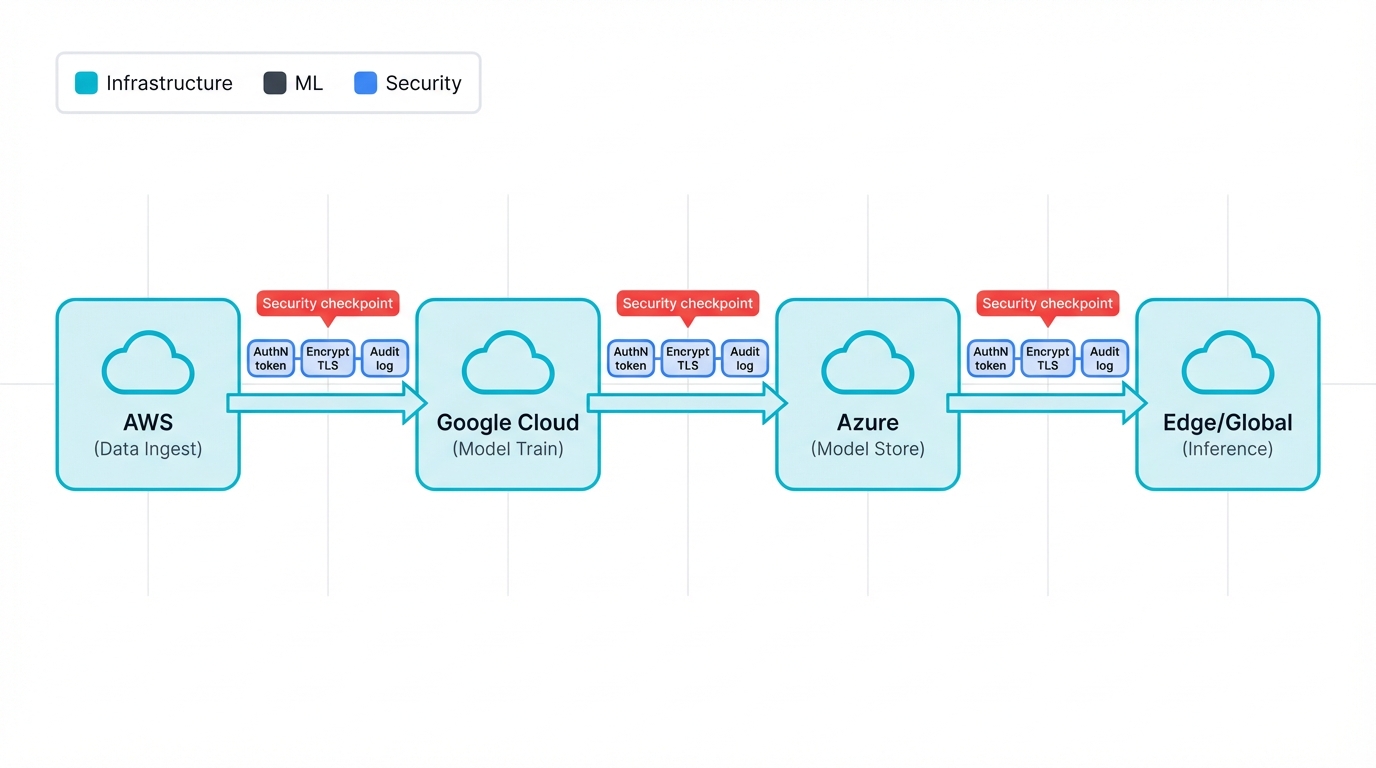

Modern AI Workflow Example

Watch how complex this gets. A typical machine learning pipeline starts with data ingestion on AWS (great data tools), moves model training to Google Cloud's TPUs (superior performance), stores models in Azure (enterprise integration), and delivers inference through global edge locations (minimize latency), and each step isn't just a technical handoff but a security checkpoint where controls must work smoothly, data governance must be preserved, and compliance requirements must be met across different regulatory jurisdictions simultaneously.

The challenge intensifies when you realize each cloud provider approaches security differently. AWS emphasizes shared responsibility with detailed service configurations, Azure focuses on enterprise identity integration, Google Cloud prioritizes zero-trust principles, Oracle stresses hardware-level security, and IBM brings decades of enterprise experience to the table.

These aren't just different brands. They represent fundamentally different security philosophies that must somehow work together harmoniously.

The Integration Challenge

Here's the problem. Your AWS security group rules don't translate to Azure Network Security Groups, your Google Cloud IAM policies require completely different syntax than AWS IAM, and your monitoring systems need to understand and correlate events from platforms that log information in entirely different formats.

It's like conducting an orchestra. Each section reads music written in a different language.

The Business Drivers Behind Multi-Cloud AI

Why do organizations accept this complexity? They have compelling reasons, though each one brings security challenges that most people don't anticipate.

Nobody Wants to Be Stuck with One Vendor

Look at the reality. Nobody trusts a single cloud provider completely because what happens when AWS raises prices or Google decides your industry isn't profitable anymore—companies want options.

Each cloud provider excels at different things. Google Cloud's TPUs will crush your machine learning training times, AWS has tools for everything imaginable, and Azure plays nice with all your Microsoft infrastructure.

But here's the catch. Now you need security policies that work everywhere, and these platforms speak completely different languages when it comes to security.

Speed Matters More Than You Think

Ever tried using an AI assistant that takes three seconds to respond? Feels broken, right? Now imagine that delay in a self-driving car making a split-second decision or a trading algorithm where microseconds literally cost millions.

That's why companies scatter AI across clouds. Put models closer to users, cut response times from hundreds of milliseconds down to dozens, and users get better experiences while you gain competitive advantage.

But your security problem just exploded. Instead of protecting one fortress, you're securing dozens of outposts around the globe, and each one needs the same level of protection but exists in different countries with different laws.

When AWS Goes Down, You Need a Backup Plan

Remember when AWS US-East-1 crashed? Half the internet broke, and your customers didn't care that it was Amazon's fault—they just knew your service was dead.

Multi-cloud gives you a safety net. AWS crashes, you failover to Azure; Google has problems, you switch to AWS; it's like having multiple data centers except now they're run by different companies with different security approaches.

Resilience Reality Check

This resilience only works when security architectures truly support seamless failover without creating new vulnerabilities, and many organizations discover too late that their failover procedures introduce security gaps like emergency access credentials that bypass normal controls, network configurations that prioritize availability over security, and data synchronization processes that temporarily disable encryption.

Regulatory Compliance and Data Sovereignty

Global operations navigate increasingly complex data protection regulations that often conflict. European patient data must remain within GDPR boundaries and cannot be processed on US soil due to Privacy Shield invalidation, Chinese user information requires local processing under Cybersecurity Law provisions, and US government contracts demand FedRAMP compliance with specific cloud certifications.

Multi-cloud strategies provide necessary geographic flexibility while multiplying compliance complexity, requiring legal expertise that spans multiple jurisdictions and technical implementation that can prove data residency and processing compliance in real-time.

Real-World Implementation: Global Fintech Case Study

TechnoBank: A Multi-Cloud Journey

Consider TechnoBank. This global financial services company operates in 47 countries, serves over 100 million customers, and uses AI for fraud detection, personalized banking recommendations, and automated trading algorithms, and their multi-cloud journey started with a simple goal—cut infrastructure costs by 30% while boosting service availability to 99.99%.

Initially, TechnoBank ran everything on AWS. This worked well for US branches, but expansion into Europe required GDPR compliance, meaning European customer data couldn't be processed in US data centers, and entry into Asian markets revealed that AWS lacked sufficient presence in key regions, leading to unacceptable latency for real-time fraud detection.

Their solution involved migration. European operations moved to Azure for strong compliance and GDPR-ready infrastructure, while Asian operations deployed on Google Cloud for superior regional network performance, and the security implications were immense.

The Security Transformation

What began as single AWS security architecture suddenly required coordination across three cloud providers. Each had different authentication systems, networking models, and monitoring tools, and their fraud detection AI, which previously accessed a single PostgreSQL database on AWS RDS, now had to correlate data from Azure SQL Database, Google Cloud SQL, and various edge databases for real-time processing.

Each connection demanded authentication, encryption, and audit logging. Even more challenging, TechnoBank's compliance needs meant they had to prove in real-time that European customer data never left European data centers, that Asian customer data was processed per local regulations, and that all processing met both local banking laws and global security standards.

This involved deploying data classification systems across all three clouds, encryption key management that adhered to the strictest security standards in every jurisdiction, and audit logging capable of satisfying regulators in multiple countries simultaneously.

The Results

The project succeeded. It cut costs by 35% and enhanced availability to 99.995%, but it required eighteen months of intensive security architecture work, retraining their entire security team, and deploying entirely new categories of security tools, and most importantly, it fostered a fundamental shift from "securing our cloud" to "securing our multi-cloud ecosystem."

Identity and Access Management: The Foundation of Multi-Cloud Security

Beyond Traditional SSO: Identity Orchestration at Scale

Let me walk you through a scenario. Imagine an AI researcher at your company—let's call her Sarah—who needs to access training data stored in AWS S3, use development tools in Google Cloud's Vertex AI, and deploy her models via Azure Kubernetes Service, and in the days of single-cloud deployments, Sarah would have one set of credentials to access everything through her company's VPN, but now she needs separate credentials for each cloud, each with its own authentication requirements, session timeouts, and security policies.

The traditional approach fails. You'd give Sarah three different sets of credentials and hope she manages them responsibly, but think about what that means for security—now you have three times the attack surface, three places where credentials could be compromised, and three systems to monitor and manage, and multiply that by hundreds or thousands of employees, contractors, and automated systems, and this quickly becomes unmanageable.

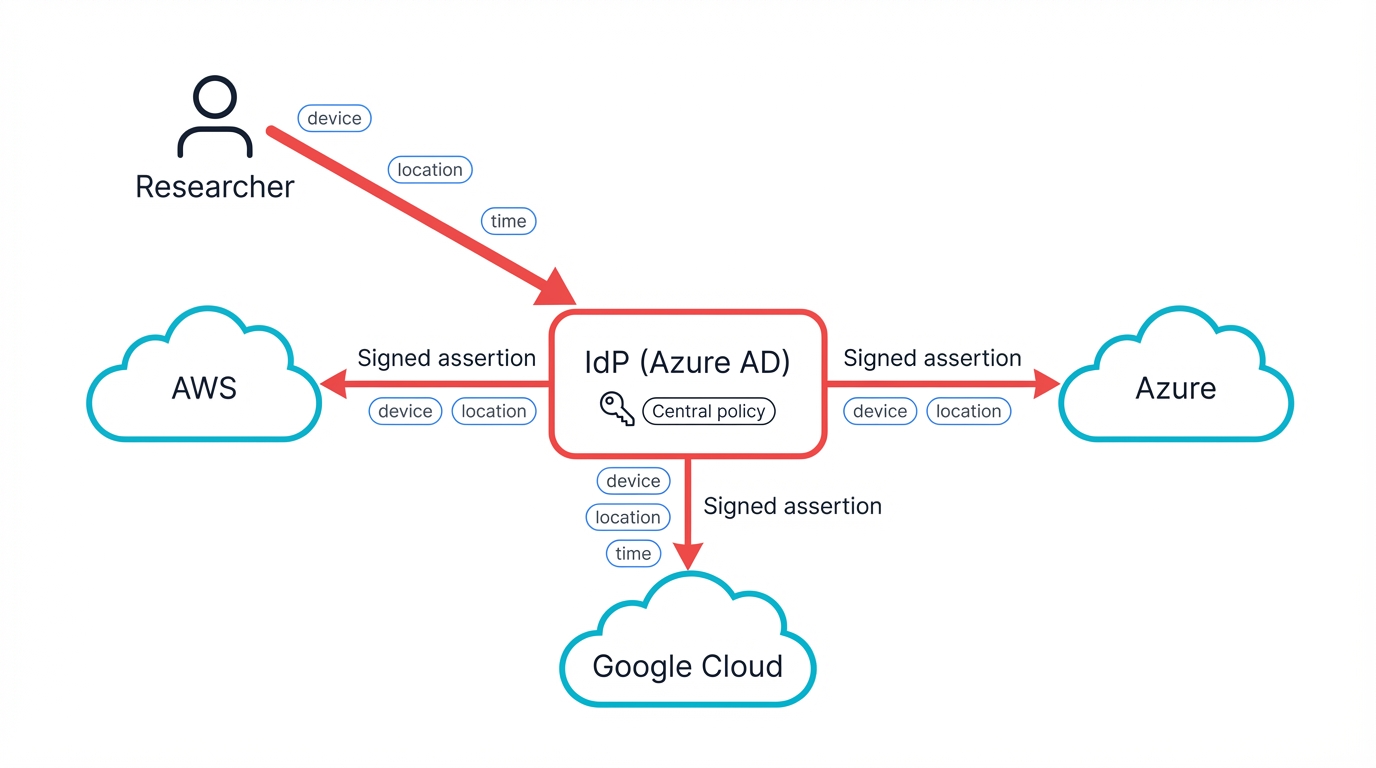

Modern federated identity architectures solve this. Instead of managing credentials separately, you establish trust relationships between your cloud providers while maintaining centralized control, and Sarah authenticates once with your main identity provider—say, Azure Active Directory—and that identity is securely passed to AWS and Google Cloud through cryptographically signed assertions, so she remains Sarah, with the same permissions and restrictions, but now she can work smoothly across all three clouds without juggling multiple passwords or facing separate login screens.

Technical Implementation Details

The technical implementation demands attention to detail. Each identity assertion includes not just who Sarah is but contextual information about her request—what time she's accessing systems, from which geographic location, what device she's using, and whether her access patterns match her typical behavior—and this contextual information becomes part of the authorization decision across all cloud platforms.

Modern identity orchestration platforms go beyond SAML or OAuth. They implement dynamic risk assessment that continuously evaluates the security posture of each access request, and if Sarah typically works from the San Francisco office during business hours but suddenly requests access to sensitive training data from a coffee shop in Bangkok at 3 AM, the system can require additional authentication factors or even temporarily restrict access while security teams investigate.

Service-to-Service Authentication: Zero-Trust Implementation

This is where things get interesting. And honestly, a bit scary if you're not ready for it, because most AI systems are not primarily accessed by people like Sarah but by other services, APIs, and automated systems that handle millions of requests each day.

Think about a recommendation engine. It provides personalized product suggestions to your e-commerce site, handles millions of requests daily, and all those requests come from other systems, not humans sitting at computers.

Multi-cloud makes this tricky. Your recommendation engine on AWS needs user data from Azure, inventory info from Google Cloud, and it needs to deliver results before your customer gets bored and leaves, and every one of those connections needs to be secure, but if security adds even half a second of delay, you've lost the sale.

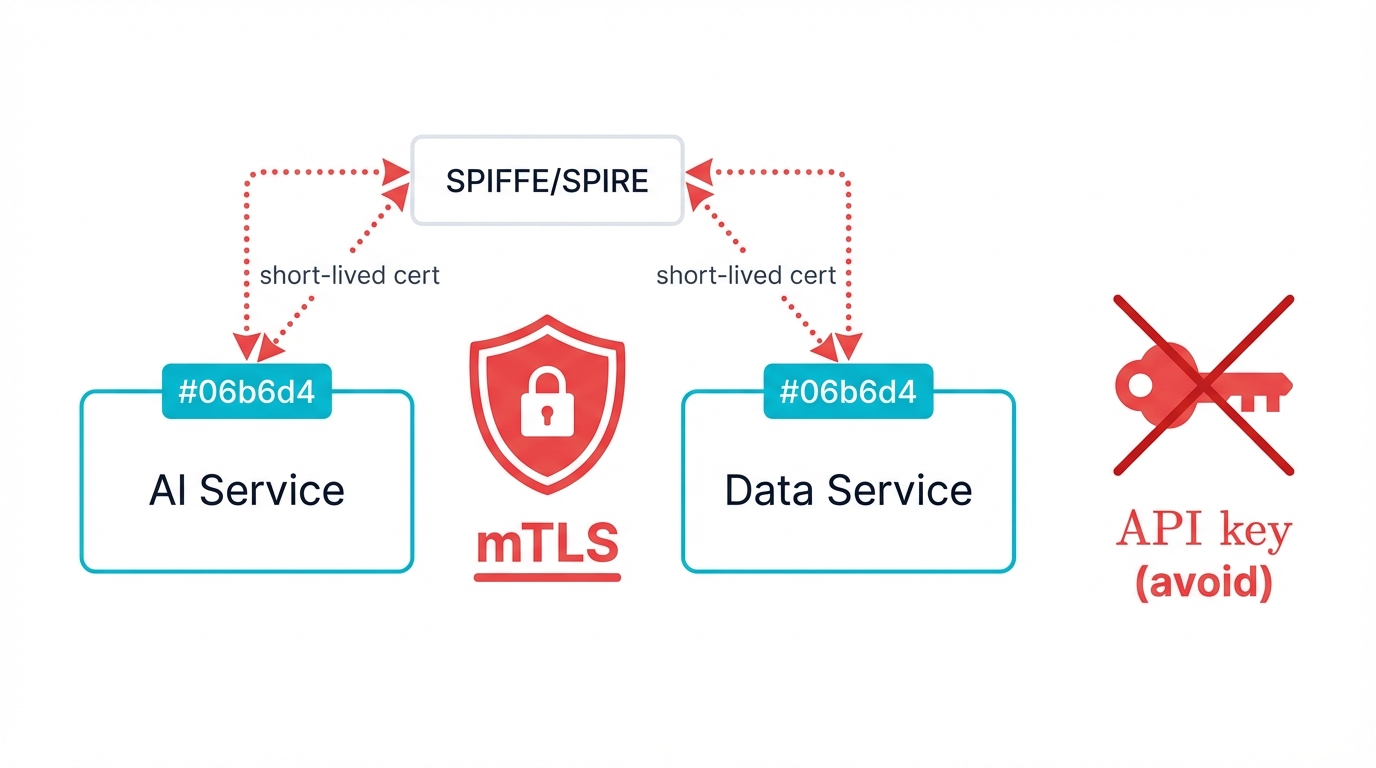

Mutual TLS becomes essential. Every connection between your AI services must be mutually authenticated and encrypted, and it isn't enough to just encrypt the data as it travels—the service asking for AI predictions must be authorized to receive them, and equally important, the AI service responding must be legitimate and not compromised.

API Key Security Anti-Pattern

I've seen too many organizations stumble here. They think they can rely on long-lived API keys for these connections—you know the approach—generate an API key that lasts months or years, embed it in your application code, and hope no one finds it, but in multi-cloud setups, this becomes a ticking time bomb because these keys often end up in configuration files, get accidentally committed to code repositories, or are discovered by attackers who have compromised part of your system.

Modern architectures use dynamically generated tokens. These short-lived tokens automatically rotate and are validated against current authorization policies, and think of it this way—instead of giving someone a house key that works forever, you give them a temporary access code that only works for today and only grants access to specific areas, so even if someone intercepts that code, it's useless tomorrow, and it could only access what was absolutely necessary.

Implementing short-lived credentials requires orchestration. Each service must prove its identity cryptographically, request temporary credentials for specific tasks, and automatically refresh those credentials before they expire, and this creates a seamless authentication process invisible to applications but offering strong security against credential theft and privilege escalation attacks.

SPIFFE/SPIRE for Universal Identity

The Secure Production Identity Framework provides cryptographically verifiable service identity across clouds, on-premises systems, and edge locations, independent of network location or IP addresses, and SPIFFE works by issuing unique, verifiable identities to every service in your environment while SPIRE acts as the runtime system that validates these identities and issues short-lived certificates.

Attribute-Based Access Control (ABAC) for Dynamic Authorization

Multi-cloud privilege management requires moving beyond traditional role-based access control. Instead of simply asking "Is Sarah in the Data Science role?" modern systems ask "Is Sarah in the Data Science role, accessing appropriate data for her current project, during business hours, from a managed device, within acceptable risk parameters, and in compliance with current data governance policies?"

This shift to attribute-based access control enables granular authorization. A data scientist might have broad access to training datasets during normal business hours but restricted access to sensitive customer information outside of business hours or when accessing systems from unmanaged devices, and these policies can be consistently applied across all cloud platforms while adapting to the specific capabilities and constraints of each provider.

Regulatory Compliance Through ABAC

The power of ABAC becomes evident in regulatory compliance. A single policy can enforce that European customer data is only accessed by employees with appropriate privacy training, only during European business hours, only from European locations, and only for approved business purposes, and the same policy can simultaneously enforce different restrictions for US customer data under different regulatory requirements, all while maintaining consistent security controls across your entire multi-cloud infrastructure.

Network Security: Establishing Trust Across Cloud Boundaries

Zero Trust Architecture for Multi-Cloud AI

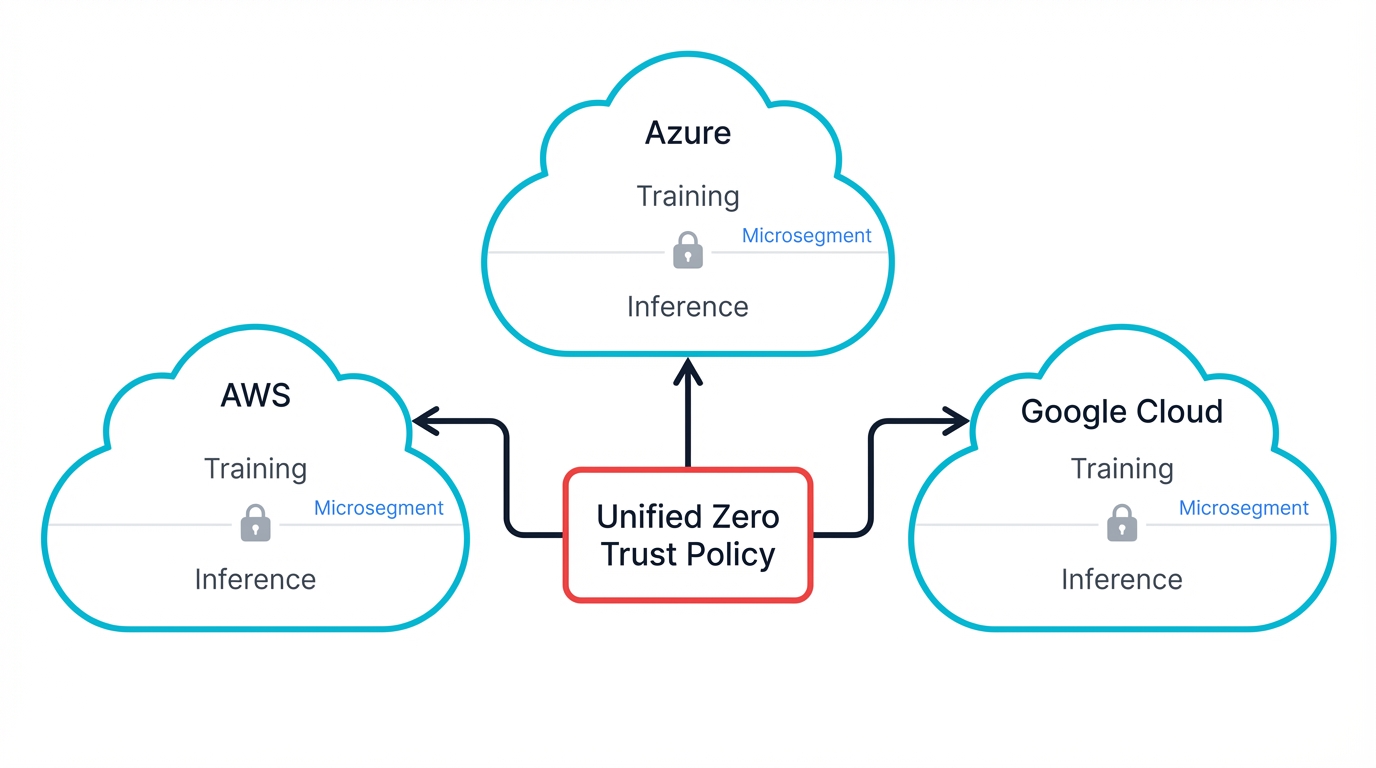

Traditional network security assumes internal networks are trustworthy. Multi-cloud AI shatters that assumption because every connection must be authenticated and authorized, regardless of its source, and this fundamental shift calls for rethinking network architecture from perimeter-based security to identity-based security that moves with workloads wherever they operate.

Implementing zero trust demands careful consideration. Different cloud providers manage network security differently—AWS emphasizes security groups and NACLs for micro-segmentation, Azure uses Network Security Groups and Azure Firewall for traffic control, and Google Cloud relies on VPC firewall rules and hierarchical access policies, and each method has advantages, but coordinating consistent security policies across all three platforms requires a unified orchestration layer that can translate security intent into platform-specific configurations.

Microsegmentation Implementation

Don't treat clouds as monolithic zones. Sophisticated architectures implement microsegmentation creating isolated network segments for different AI workloads, data classifications, and risk levels, and healthcare AI training pipelines operate in completely separate segments from customer-facing recommendation systems, even when spanning identical cloud providers, and this segmentation extends beyond simple network isolation to include separate identity domains, encryption keys, and monitoring systems.

The challenge lies in maintaining consistency. A healthcare AI training workload might require HIPAA compliance controls that include encrypted communication, audit logging, and access restrictions based on employee background checks, and these same policy requirements must be enforced whether the workload runs on AWS EC2 instances, Azure Virtual Machines, or Google Cloud Compute Engine, despite the different implementation mechanisms each platform provides.

Modern microsegmentation uses software-defined networking. This creates logical boundaries independent of physical network topology, and it enables consistent security policies that follow workloads as they move between clouds, scale up and down based on demand, or migrate to different regions for disaster recovery purposes.

Software-Defined Perimeters: Rethinking Network Security

Picture the traditional enterprise network. It's like a medieval castle with high walls and a single drawbridge where everyone inside the walls is trusted and everyone outside is a threat, and this worked when your servers lived in a single data center, but multi-cloud AI deployments have blown up this model entirely because your AI training might happen in Google Cloud, your models live in Azure, and your inference engines run on AWS edge locations, and the old castle walls don't exist anymore.

Software-Defined Perimeters change everything. Instead of trying to define a single trusted network boundary, SDP creates individualized, encrypted micro-tunnels between specific services that need to communicate, and think of it as giving every single service its own private VPN tunnel that only opens to the exact services it needs to talk to.

Here's how this works. Imagine you have an AI model serving predictions from AWS that needs to access feature data stored in a database on Azure, and in the past, you'd set up network routes between your AWS VPC and Azure VNet, configure firewall rules, and hope no one else on those networks intercepts the traffic, but with SDP, that AI service gets its own cryptographically secured tunnel directly to that specific database, and no other service can see this traffic, even if they're on the same network segment.

SDP Advantages for AI Workloads

SDP is powerful for AI workloads. It makes access decisions based on workload identity rather than network location, and your fraud detection model doesn't access customer data just because it runs on the "trusted" network segment—it gains access because it can cryptographically prove it's the legitimate fraud detection service, regardless of where it's running, and this allows you to move workloads between clouds, scale services up or down, and even operate at the edge without constantly reconfiguring network security policies.

The advantage becomes clear with dynamic workloads. Your training pipeline might spin up hundreds of compute instances for a few hours and then disappear completely, and traditional network security would require pre-configuring firewall rules for every possible connection, but SDP handles this dynamically, and when a new AI service starts, it cryptographically proves its identity and is granted immediate access only to the resources it needs.

AI-Aware Traffic Inspection

Multi-cloud AI generates unique traffic patterns. Traditional network monitoring tools see a machine learning training job transferring 50 gigabytes of data and flag it as suspicious, but AI-aware monitoring tools understand that this is normal behavior for training jobs and would immediately flag if an inference service suddenly started downloading training datasets.

The sophistication extends to understanding communication patterns. A natural language processing service that normally communicates with text databases showing sudden connections to image repositories might indicate a compromise or misconfiguration, and similarly, an inference service that typically serves predictions in milliseconds showing connections to training data repositories could indicate data exfiltration attempts.

Data Protection and Encryption Strategies

Comprehensive Encryption Implementation

All major cloud providers encrypt customer data by default. But multi-cloud environments require coordinated key management strategies that ensure consistent protection regardless of where data resides, and the challenge isn't just encrypting data—it's maintaining cryptographic control and auditability across platforms that handle encryption differently.

Understanding each cloud provider's approach is crucial. AWS utilizes KMS for centralized key management with automatic rotation capabilities and integrates with CloudHSM for hardware-backed key storage, Azure leverages Key Vault with FIPS 140-2 Level 2 validated HSMs and provides bring-your-own-key capabilities for ultimate customer control, and Google Cloud implements automatic encryption with optional Customer-Managed Encryption Keys through Cloud KMS and provides external key management integration for keys stored outside Google's infrastructure.

Customer-Managed Key Strategies

Implement centralized key management. This enables consistent encryption policies across all cloud platforms while maintaining the flexibility to leverage each provider's unique capabilities, and the goal is cryptographic consistency without sacrificing the specialized features that drove multi-cloud adoption in the first place.

Oracle Cloud provides Vault service with FIPS 140-2 Level 3 HSM-backed keys and emphasizes customer key control with dedicated key management appliances, and IBM Cloud requires customer-managed keys with FIPS 140-2 Level 4 HSMs and provides quantum-safe encryption options for organizations preparing for post-quantum cryptography threats, and each platform's approach reflects different security philosophies and compliance requirements, making unified key management both critical and complex.

The sophistication extends beyond simple encryption. Modern key management includes key derivation, attribute-based encryption, and format-preserving encryption for maintaining data usability while protecting sensitive information, and multi-cloud key management platforms can generate encryption keys based on data attributes, enabling fine-grained access control where different users see different encrypted views of the same dataset.

Confidential Computing for Sensitive AI Workloads

For highly sensitive AI processing, confidential computing secures data in use. It uses hardware-based secure enclaves that prevent even cloud providers from accessing data during processing, and this is the last frontier of data protection—securing the only remaining vulnerable stage where data must be decrypted to be processed.

The implementation varies greatly among providers. AWS Nitro Enclaves create isolated compute environments with cryptographic attestation, allowing external parties to verify that code runs inside a genuine, secure enclave, Azure Confidential VMs use AMD SEV-SNP technology to encrypt memory and CPU registers, defending against both software and hardware threats, and Google Confidential VMs offer encrypted memory with sealed secrets that tie encryption keys to specific hardware and software configurations.

Performance Considerations

The practical implementation requires careful consideration of performance trade-offs. Secure enclaves typically have memory limitations and processing overhead that can significantly impact AI model training and inference performance, and organizations must balance security benefits against performance costs, often implementing confidential computing only for the most sensitive operations while using traditional encryption for less critical workloads.

Oracle Dedicated Cloud offers hardware-isolated compute with customer-controlled encryption that provides dedicated physical infrastructure with additional security controls, and IBM Secure Enclaves implement hardware-based memory protection with cryptographic verification that leverages IBM's decades of mainframe security experience adapted for cloud environments.

Data Classification and Governance Across Clouds

Effective multi-cloud data protection requires comprehensive data classification systems. These systems understand data sensitivity levels, regulatory requirements, and appropriate protection mechanisms regardless of where data is stored or processed, and modern data governance platforms provide automated classification based on data content, context, and usage patterns while maintaining consistent policies across cloud providers.

The challenge extends beyond simple classification. It includes data lineage tracking, access auditing, and compliance reporting across platforms that handle these requirements differently, and organizations need visibility into how data flows between clouds, who accesses it at each stage, and whether processing meets regulatory requirements for data protection and privacy.

Advanced data governance platforms provide real-time monitoring. These systems automatically detect when sensitive data is accessed inappropriately or processed outside of approved workflows, and they can identify when personally identifiable information is being used for unauthorized purposes, when training datasets contain sensitive information that should be anonymized, or when data sovereignty requirements are being violated by cross-border data transfers.

Threat Detection and Incident Response

AI-Native Security Operations Center (AI-SOC)

Building effective threat detection for multi-cloud AI requires specialized capabilities. These capabilities understand AI workload patterns and can detect AI-specific threats that traditional security tools would miss entirely, and the emergence of AI-specific attack vectors—model poisoning, adversarial inputs, data extraction attacks, and model stealing—requires security operations centers that can distinguish between legitimate AI operations and malicious activity.

Consider the complexity. Monitoring a distributed machine learning pipeline that spans multiple clouds involves normal operations that might transfer hundreds of gigabytes of training data from AWS S3 to Google Cloud for processing, store intermediate results in Azure, and serve final models through edge locations globally, and traditional security tools would flag these massive data transfers and unusual network patterns as suspicious, but AI-aware security operations understand these patterns as normal business operations while remaining alert for genuine threats.

Cross-Cloud Telemetry Aggregation

Modern SIEM solutions must aggregate security telemetry from all cloud providers while understanding the context and relationships between events that span platform boundaries, and a security incident that begins with suspicious authentication attempts in AWS might progress to unauthorized data access in Azure and conclude with model exfiltration through Google Cloud, and traditional SIEM tools analyzing each cloud in isolation would miss the attack pattern that only becomes visible when correlated across all platforms.

The sophistication extends to understanding subtle indicators. Model extraction attempts might appear as normal inference requests but with systematic patterns designed to reverse-engineer proprietary models, data poisoning attacks might involve subtle modifications to training datasets that are nearly impossible to detect without specialized monitoring tools, and adversarial input attacks might appear as normal user requests but contain carefully crafted perturbations designed to cause model misbehavior.

Modern AI-SOC implementations leverage machine learning for security monitoring. This creates interesting recursive scenarios where AI systems monitor AI systems for security threats, and these meta-AI security tools can learn normal patterns of AI workload behavior and identify anomalies that indicate potential security incidents, but they also introduce new attack vectors where adversaries might attempt to poison the security monitoring systems themselves.

Automated Incident Response Playbooks

The complexity of multi-cloud AI security incidents requires sophisticated automated response capabilities. These capabilities can coordinate actions across multiple cloud providers while maintaining business continuity, and traditional incident response procedures designed for single-platform environments cannot handle the complexity of threats that span cloud boundaries and affect interconnected AI services.

Model Compromise Response

When AI models are compromised, response procedures must account for distributed deployment. This includes immediate model quarantine across all deployment locations, credential revocation across all cloud platforms, and network isolation while preserving forensic evidence.

Modern incident response platforms provide critical capabilities. They maintain comprehensive asset inventories that track AI workloads, data flows, and dependencies across all cloud providers, enabling rapid impact assessment when security incidents occur, and they provide automated containment capabilities that can isolate compromised services across multiple clouds simultaneously while preserving evidence for forensic analysis.

AI-Specific Threat Detection

Develop detection rules for AI-unique attack patterns. These rules leverage understanding of machine learning workflows, data science operations, and AI model behavior, and they must distinguish between legitimate AI operations and malicious activity while adapting to the dynamic nature of AI workloads.

The technical implementation requires sophisticated data normalization. Each cloud provider generates security events in different formats, with different timestamps, different severity levels, and different contextual information, and modern SIEM platforms provide pre-built connectors and data parsers for major cloud providers while enabling custom integration for specialized AI security events.

Integration Examples

AWS Integration: CloudTrail for API calls, GuardDuty for threat detection, Security Hub for finding aggregation, VPC Flow Logs for network monitoring, and CloudWatch for application metrics.

Azure Integration: Activity Logs for resource operations, Defender for Cloud alerts and recommendations, Sentinel analytics for threat hunting, Network Security Group logs for traffic analysis, and Application Insights for application performance.

Google Cloud Integration: Audit Logs for administrative operations, Security Command Center findings for vulnerability management, Chronicle for security analytics, VPC Flow Logs for network visibility, and Operations Monitoring for infrastructure metrics.

The Human Element: People and Process in Multi-Cloud AI Security

Building Security-First Culture in DevOps (DevSecOps)

The most advanced multi-cloud AI security architecture will fail without the right people and processes. The shift to DevSecOps involves more than just adding security tools to existing development workflows—it demands fundamental changes in how teams view security responsibilities, risk management, and collaborative problem-solving across cloud platforms.

Traditional security models established clear boundaries. Development teams built applications, operations teams deployed and managed infrastructure, and security teams enforced policies and investigated incidents, but multi-cloud AI environments have rendered these boundaries obsolete because a data scientist training models in Google Cloud might accidentally introduce security vulnerabilities that affect inference services running in AWS.

Cross-Functional Security Training

Effective multi-cloud AI security requires that every team member understands the security implications of their work across all platforms. Data scientists need to understand how their model training practices affect network security policies, DevOps engineers need to understand how their deployment procedures impact data governance requirements, and security teams need to understand enough about AI operations to distinguish between normal behavior and genuine threats.

This training cannot be a one-time event. Multi-cloud AI environments change rapidly, with new services, security features, and threat vectors emerging regularly, and organizations must implement continuous learning programs that keep teams current with evolving security best practices across all cloud platforms they use.

Modern training programs leverage hands-on exercises that simulate real-world scenarios teams will encounter, and instead of abstract security concepts, teams work through practical scenarios like "Your fraud detection model is performing poorly, and investigation reveals that training data in AWS S3 has been modified—walk through the incident response procedure across AWS, Azure, and Google Cloud," and these exercises build muscle memory for security procedures while reinforcing the interconnected nature of multi-cloud security.

Upskilling Security Teams for Multi-Cloud AI

The specialization required for effective multi-cloud AI security extends far beyond traditional cybersecurity skills. Security professionals must develop deep understanding of machine learning operations, cloud platform differences, and the unique threat landscape that emerges when AI systems span multiple cloud providers.

The technical skill development includes hands-on experience. This means understanding how data scientists work with Jupyter notebooks, how MLOps teams deploy models through CI/CD pipelines, and how inference services integrate with business applications, and security teams that try to secure AI workloads without understanding these workflows inevitably implement controls that either provide inadequate protection or create such significant friction that development teams find workarounds.

Cross-Platform Security Architecture

The architectural thinking required for multi-cloud AI security represents a significant evolution from traditional enterprise security. Instead of designing security for a known, relatively static environment, security architects must create flexible frameworks that adapt to dynamic workloads while maintaining consistent protection across platforms with different capabilities and constraints.

Governance and Compliance in Multi-Cloud AI

The governance challenges of multi-cloud AI environments extend beyond technical implementation. They include organizational structures, decision-making processes, and accountability frameworks that span multiple cloud platforms and regulatory jurisdictions.

Establishing clear ownership becomes critical. Multi-cloud environments can create confusion about who is responsible for security decisions and incident response when problems span multiple platforms, and traditional organizational models with separate teams for each cloud platform can result in security gaps where each team assumes another team is handling cross-platform security concerns.

Successful organizations implement cross-functional governance structures. These structures have clear accountability for multi-cloud security outcomes, and this might involve creating dedicated multi-cloud security teams, establishing cross-platform incident response procedures, or implementing matrix reporting structures that ensure security decisions consider impacts across all cloud platforms.

Technology Categories and Solution Landscape

Cloud Native Application Protection Platforms (CNAPP)

The emergence of multi-cloud AI deployments has driven the evolution of comprehensive security platforms. Cloud Native Application Protection Platforms represent a convergence of multiple security capabilities into integrated solutions that understand both cloud-native architectures and AI-specific requirements.

CNAPP solutions provide critical capabilities. They offer unified security posture management that provides consistent visibility and control across AWS, Azure, Google Cloud, and other platforms, and they implement runtime protection that can detect and respond to threats in real-time across containerized AI workloads, serverless inference functions, and traditional virtual machine deployments.

AI-Specific CNAPP Capabilities

The AI-specific capabilities of modern CNAPP platforms include model security scanning that can detect vulnerabilities in machine learning models, data flow security monitoring that tracks sensitive data as it moves between cloud platforms, and AI workload behavior analysis that can distinguish between normal AI operations and potential security threats.

Cloud Security Posture Management (CSPM) Evolution

Traditional CSPM tools focused on identifying misconfigurations. But the complexity of multi-cloud AI environments has driven the evolution of more sophisticated posture management capabilities, and modern CSPM platforms provide continuous compliance monitoring across multiple cloud providers while understanding the unique security requirements of AI workloads.

The AI-aware capabilities of evolved CSPM platforms include specialized configuration assessments for machine learning services, data pipeline security validation, and model deployment security scanning, and these tools understand that AI workloads have different security requirements than traditional applications—for example, the need for specialized network configurations that support high-bandwidth model training or the requirement for confidential computing capabilities for sensitive inference workloads.

Advanced Threat Detection Platforms

The unique threat landscape of multi-cloud AI environments has driven the development of specialized threat detection platforms. These platforms understand both AI-specific attack vectors and the complexity of correlating security events across multiple cloud providers.

AI-Aware SIEM Capabilities

Modern SIEM platforms designed for AI environments provide specialized event correlation capabilities that understand the normal patterns of AI workloads and can detect subtle anomalies that might indicate security threats, and these systems maintain behavioral baselines for different types of AI services and can alert on deviations that traditional security tools would miss.

The multi-cloud capabilities of AI-aware SIEM platforms include normalized event correlation across cloud providers, unified threat intelligence that considers threats to AI systems specifically, and automated response capabilities that can coordinate incident response actions across multiple cloud platforms simultaneously.

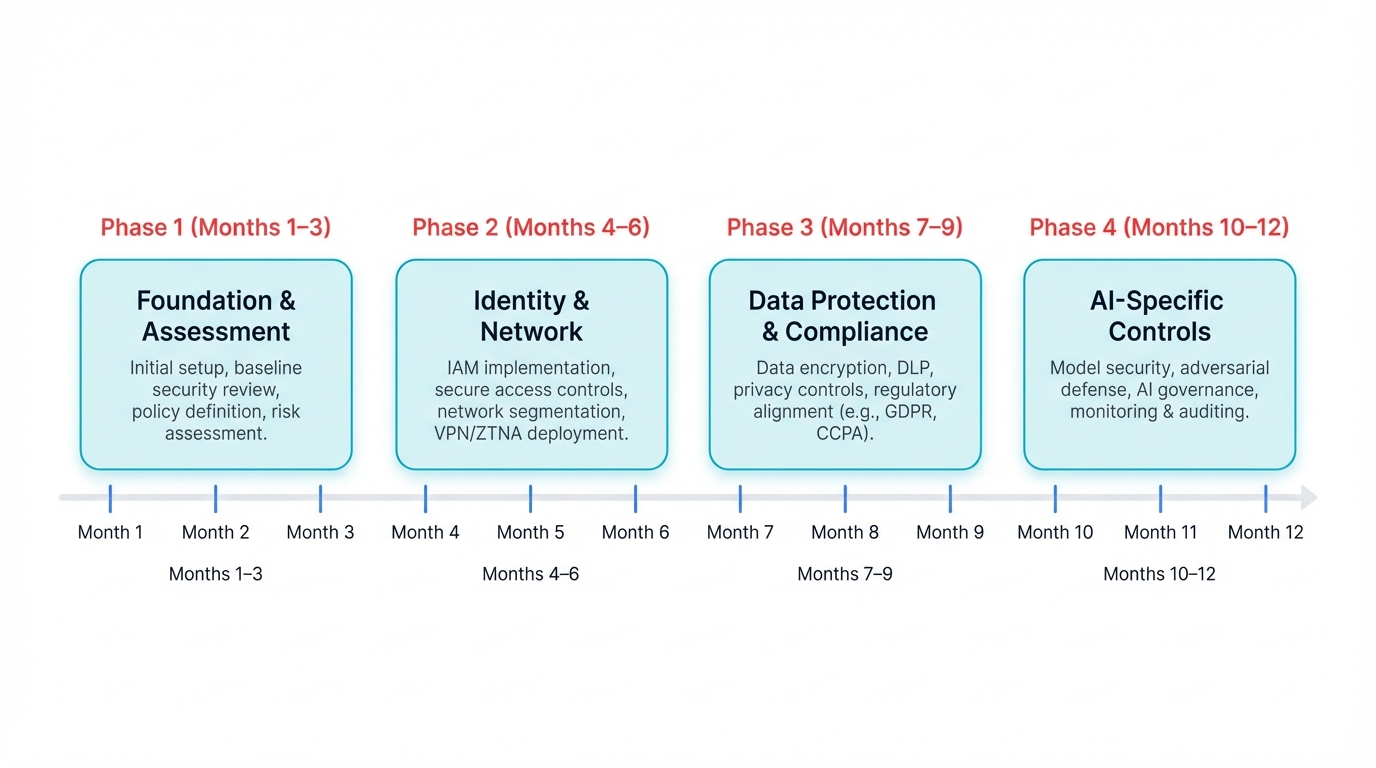

Implementation Roadmap: A Phased Approach

Phase 1: Foundation and Assessment (Months 1-3)

The foundation phase of multi-cloud AI security implementation requires comprehensive understanding of the current environment, identification of gaps and risks, and establishment of the organizational structure and processes needed to support ongoing security operations.

Comprehensive Asset Discovery

Before implementing security controls, organizations must understand their complete multi-cloud AI footprint with unprecedented detail and accuracy, and traditional asset discovery tools designed for static environments cannot adequately map the dynamic nature of AI workloads that scale automatically, move between platforms, and consume resources from multiple cloud providers simultaneously.

Modern asset discovery for multi-cloud AI environments requires specialized tools. These tools can identify not just compute resources and storage systems but also AI-specific services like managed machine learning platforms, data processing pipelines, and inference endpoints, and they must understand the relationships between assets across cloud boundaries and track data flows that span multiple platforms.

- Cross-Cloud Resource Inventory: Automated discovery across AWS, Azure, Google Cloud, Oracle, and IBM platforms using cloud-native APIs and specialized discovery tools

- AI Service Mapping: Identification of machine learning platforms, data processing services, model repositories, and inference endpoints across all cloud environments

- Network Topology Analysis: Comprehensive mapping showing inter-cloud connections, VPN tunnels, private network links, and internet-exposed services

- Data Flow Documentation: Detailed tracking of sensitive information movement between cloud platforms, including data classification, processing locations, and storage duration

Phase 2: Identity and Network Foundation (Months 4-6)

The identity and network foundation phase establishes the core security infrastructure. This phase is critical because all subsequent security controls depend on having robust identity management and secure network connectivity between cloud platforms.

Federated Identity Implementation

The implementation of federated identity across multiple cloud platforms requires careful planning and phased deployment to avoid disrupting existing operations while establishing stronger security controls, and the process typically begins with selecting a primary identity provider that will serve as the authoritative source of identity information for all cloud platforms.

The phased implementation approach typically begins with non-production environments. This validates configurations and identifies potential issues before affecting production operations, and this testing phase should include not just functional testing of authentication flows but also performance testing to ensure that federated authentication doesn't introduce unacceptable latency into AI workloads that require real-time responses.

Phase 3: Data Protection and Compliance (Months 7-9)

The data protection phase implements comprehensive encryption, data governance, and compliance monitoring capabilities. These capabilities ensure sensitive information remains protected throughout the AI lifecycle across all cloud platforms.

The implementation of advanced encryption across multi-cloud AI environments requires coordination. This involves key management systems, encryption policies, and audit procedures across platforms with different encryption capabilities and key management models.

Phase 4: AI-Specific Security Controls (Months 10-12)

The final implementation phase focuses on AI-specific security controls. These controls address the unique risks and requirements of machine learning workloads distributed across multiple cloud platforms.

AI Security Operations Center (AI-SOC)

The establishment of an AI-SOC requires specialized capabilities that go beyond traditional security operations to include understanding of AI workload behavior, AI-specific threat detection, and response procedures tailored to the unique characteristics of AI systems.

Measuring Success: Key Performance Indicators

Security Metrics

The measurement of multi-cloud AI security program effectiveness requires sophisticated metrics. These metrics account for both traditional cybersecurity outcomes and AI-specific success factors while considering the unique challenges of operating across multiple cloud platforms.

Multi-Cloud Incident Response Effectiveness

- Mean Time to Detect (MTTD) AI-Specific Security Incidents: Average time from incident occurrence to detection across all cloud platforms, with separate tracking for different types of AI security incidents

- Mean Time to Respond (MTTR) to Multi-Cloud Security Breaches: Average time from incident detection to initial containment actions across cloud boundaries

- Cross-Cloud Incident Correlation Accuracy: Percentage of security incidents that span multiple cloud platforms that are correctly identified as related events

Operational Metrics

Operational metrics must demonstrate that security controls enhance rather than hinder AI operations. They must provide the protection required for business and regulatory requirements.

AI Service Availability and Performance

- AI Service Uptime Across Clouds: Availability metrics for AI services with separate tracking for planned maintenance, security incidents, and configuration changes

- Security Control Performance Impact: Measurement of latency and throughput impact of security controls on AI inference and training operations

- Cross-Cloud Failover Success Rate: Percentage of successful automatic failovers between cloud platforms during outages

Compliance and Governance Metrics

Compliance metrics must demonstrate adherence to regulatory requirements across multiple jurisdictions. They must provide evidence of effective governance of AI systems distributed across cloud platforms.

Regulatory Compliance Effectiveness

- Multi-Jurisdiction Compliance Score: Comprehensive scoring of compliance posture across all applicable regulatory frameworks with jurisdiction-specific tracking

- Audit Readiness Metrics: Time required to produce comprehensive audit evidence and success rate of regulatory examinations

- Data Sovereignty Compliance: Percentage of data processing activities that comply with data residency requirements

Future Considerations: Preparing for Tomorrow's Threats

Quantum-Resistant Cryptography

The advancement of quantum computing represents an existential threat. Current cryptographic systems that protect multi-cloud AI deployments are vulnerable, and organizations must begin preparing for post-quantum cryptography migration now, even though practical quantum computers capable of breaking current encryption may still be years away.

The challenge extends beyond simply replacing encryption algorithms. It requires comprehensive planning for cryptographic agility that enables rapid migration to new algorithms as they become available while maintaining interoperability across cloud platforms that may adopt post-quantum cryptography at different rates.

Implementation Considerations

- Cryptographic Agility Architecture: Design encryption systems that can rapidly adopt new algorithms without requiring complete redeployment

- Hybrid Encryption Strategy: Plan for transition periods where both classical and post-quantum encryption algorithms operate simultaneously

- Performance Impact Assessment: Evaluate the computational and bandwidth overhead of post-quantum algorithms on AI workloads

AI-Native Security Evolution

The next generation of security tools will be purpose-built for AI workloads. They'll leverage artificial intelligence to protect artificial intelligence systems, and this evolution represents a fundamental shift from adapting traditional security tools for AI environments to creating security solutions that understand and protect AI systems natively.

AI-native security tools will provide capabilities that are impossible with traditional approaches. Deep learning-based anomaly detection will understand the complex patterns of AI workload behavior and identify subtle deviations that indicate potential security threats, and automated model security assessment will scan AI models for vulnerabilities, backdoors, and potential adversarial attack surfaces using techniques specifically designed for machine learning systems.

Recursive Security Challenges

The development of AI-native security creates interesting recursive challenges where AI systems must be secured against attacks on the AI systems that protect them, and this requires careful consideration of security boundaries and the development of AI security systems that are resilient against adversarial attacks specifically designed to bypass AI-based security controls.

Regulatory Landscape Evolution

The regulatory environment for AI systems is changing rapidly. New rules and requirements appear in various areas at the same time, and organizations need to stay ahead of these changes while building flexible systems that can quickly adapt to new compliance rules without disrupting current operations.

The European Union's AI Act is the first detailed AI regulation framework. It's likely to influence how other regions develop their rules, and organizations with multi-cloud AI setups must prepare for a complex regulatory landscape where different parts of AI systems might be subject to different regulations based on where they are created, trained, and used.

Emerging Threat Landscape

The threat landscape for multi-cloud AI systems continues to evolve as attackers develop new techniques specifically designed to exploit the unique characteristics of AI systems and the complexity of multi-cloud deployments, and supply chain attacks targeting AI systems represent an emerging threat vector where adversaries compromise AI development tools, pre-trained models, or data processing pipelines.

Conclusion: Transforming Complexity into Competitive Advantage

Multi-cloud AI security is both challenge and opportunity. Securing AI systems across multiple cloud platforms is complex, but organizations that develop strong security frameworks can gain major advantages through faster innovation, regulatory trust, customer confidence, and operational durability.

Transforming multi-cloud complexity from risk into asset requires more than technical solutions. It needs a mindset shift—from seeing security as a barrier to viewing it as a facilitator of innovation that enables more ambitious AI projects, and companies that follow the strategies in this guide will be well-positioned to unlock the full potential of multi-cloud AI while meeting security standards for compliance and customer trust.

The Path Forward

Success in multi-cloud AI security demands ongoing commitment. It requires continuous learning and adaptable implementation that evolves with shifting threat landscapes and regulatory changes, and organizations need to invest not only in technology and tools but also in people and processes capable of managing the complexities of multi-cloud AI operations.

The strategies and frameworks outlined in this guide offer a roadmap for transformation, but each organization must tailor these approaches to their unique circumstances, risk appetite, and business goals, and the key is to start the journey with a clear vision of the destination while staying flexible enough to adjust to changing conditions along the way.

Though the journey is difficult, the benefits make it worthwhile. As AI becomes more central to business success and competitive edge, the ability to securely manage AI across multiple clouds will become a key organizational skill rather than just a technical task.

Don't let multi-cloud complexity become your vulnerability. The time to implement comprehensive multi-cloud AI security is now, before threats escalate further and regulations tighten, and begin with foundational elements like identity federation and network security, then build on these with advanced data protection and AI-specific controls, continuously evolving your capabilities as new threats and opportunities arise.

Organizations that successfully manage the complexities of multi-cloud AI security today will be industry leaders tomorrow, and the advantages gained from effective implementation grow over time, creating sustained differentiation that becomes harder for competitors to copy, because the future belongs to organizations leveraging AI across multiple cloud platforms while maintaining security, compliance, and trust—key to ongoing innovation and growth.

Example Implementation

# Example: AI service configuration

apiVersion: v1

kind: ConfigMap

metadata:

name: ai-security-config

namespace: ai-platform

data:

security.yaml: |

authentication:

enabled: true

type: "oauth2"

provider: "identity-provider"

authorization:

rbac_enabled: true

default_role: "viewer"

monitoring:

metrics_enabled: true

logging_level: "INFO"

anomaly_detection: true

rate_limiting:

enabled: true

requests_per_minute: 100

burst_size: 20