You're diving into the Model Context Protocol. Excitement builds. You start coding, and then—something feels off. Old habits creep in, and you treat MCP like any other API, confuse tools with agents, or reduce the entire system to simple tool definitions. This isn't the full picture, and these shortcuts will bite you when you need reliability most.

Shaky agent setups emerge. Visibility vanishes. Your unpredictable AI thinking crashes into deterministic code execution, and chaos follows—errors cascade, debugging becomes a nightmare, and trust evaporates.

I'm here to dismantle three massive misconceptions that trip up even experienced developers, show you exactly why these mental models fail in production, and give you practical, battle-tested patterns that actually work when the stakes are high and your AI agents need to deliver consistent, auditable results under real-world pressure.

Misconception #1: "MCP is Basically Just Another API"

What people often say

Understanding MCP

The Model Context Protocol (MCP) is transforming how AI systems interact with data sources. This guide clarifies common misconceptions to help you implement MCP effectively in your applications.

"I'll handle MCP calls exactly like REST or gRPC—fire off a request, grab the response, done."

The real deal

Wrong approach. Dead wrong.

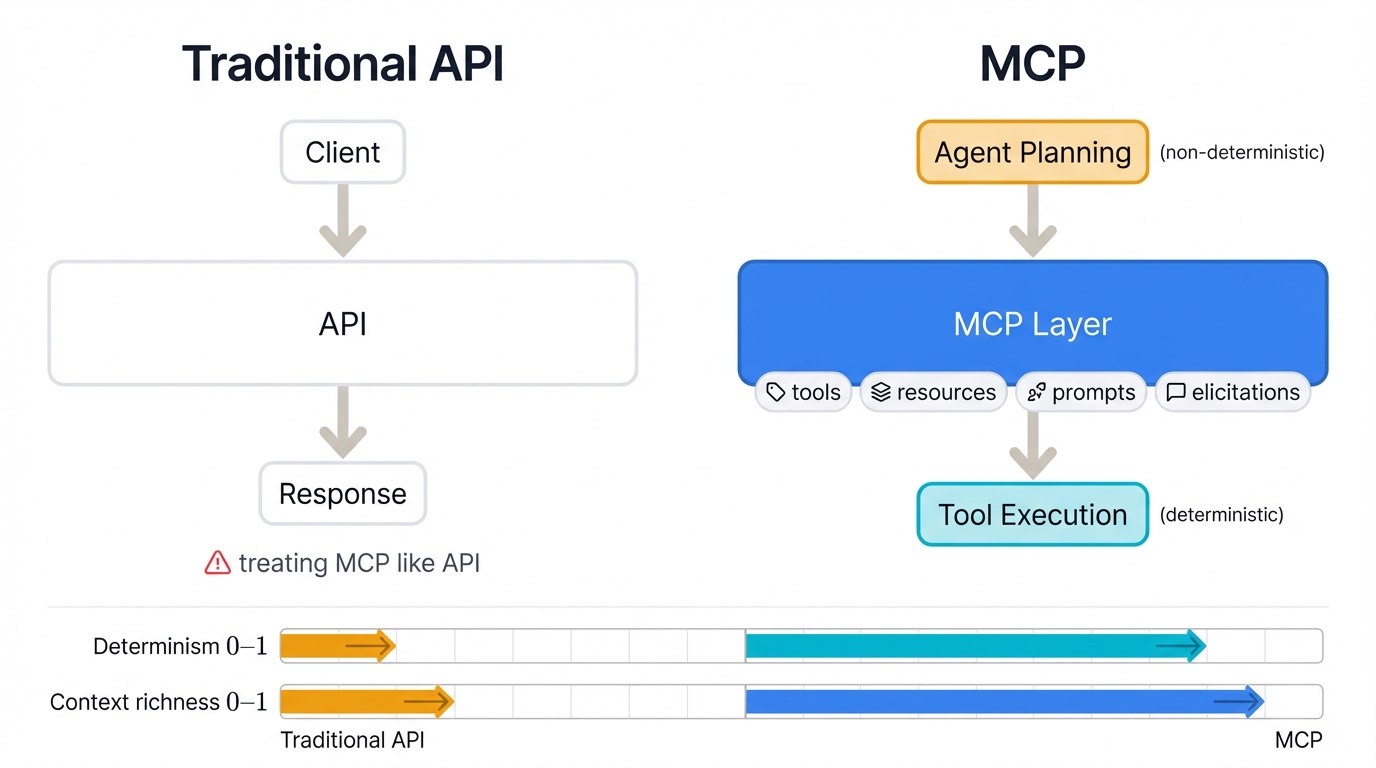

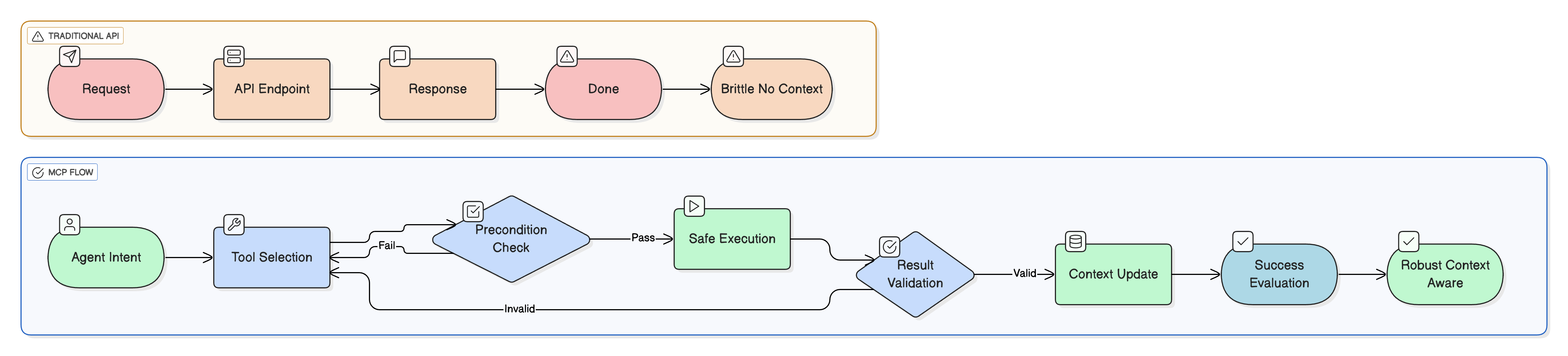

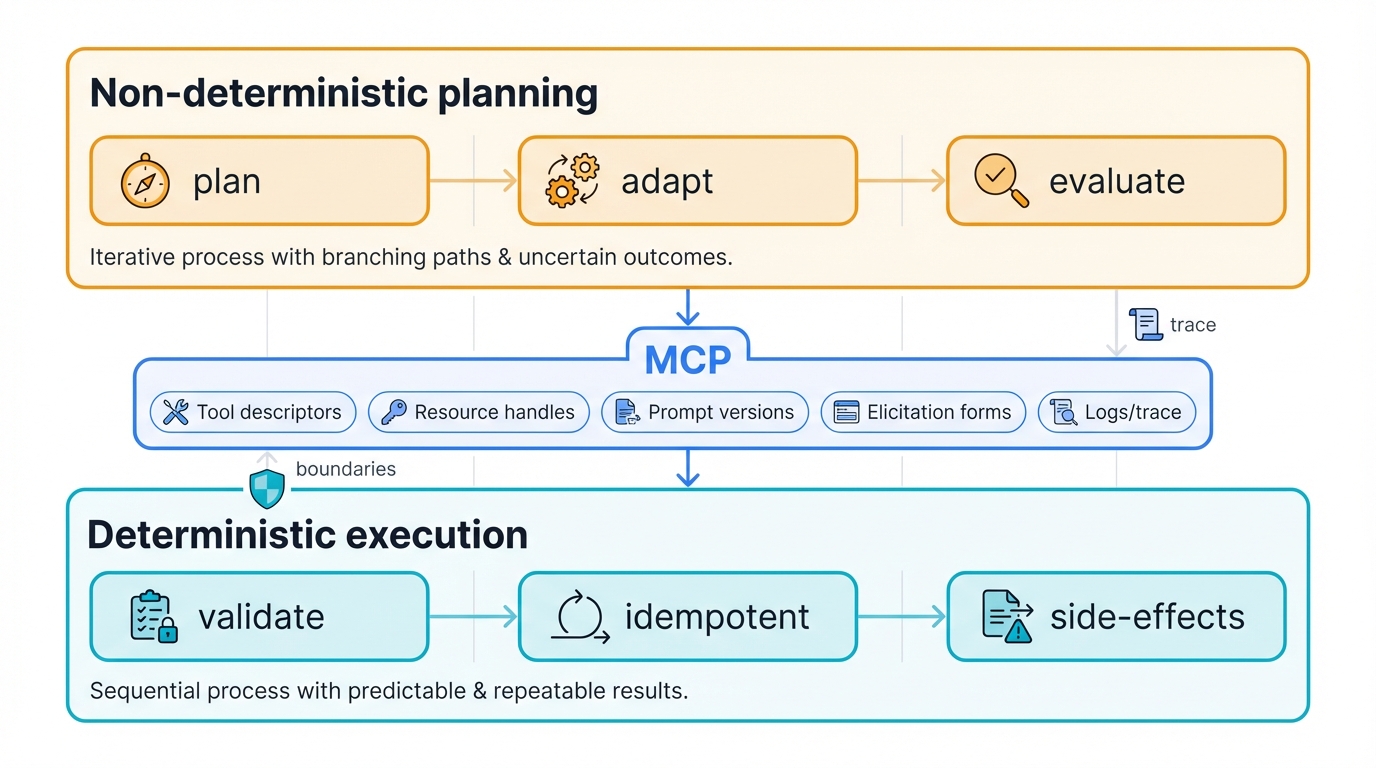

MCP doesn't replace traditional APIs—it serves a completely different purpose, specifically designed for language models to handle tools, manage user intentions, and exchange context dynamically. Sure, APIs and RPCs might power the underlying mechanics, but MCP's real mission is to bridge the gap between unpredictable agent behavior and safe, reliable tool execution.

Why does this mix-up happen?

Habit drives this mistake. You know APIs cold—they're straightforward, testable, comfortable. Quick demos reinforce the confusion with their simple "call tool, get result" flows that look identical to HTTP requests, and before you know it, you're treating MCP like just another endpoint to ping.

What you actually get with MCP

- Tool configurations that transcend simple endpoints—they encode the model's intentions, capabilities, and the semantic meaning of what those tools actually accomplish

- Rich contextual layers including prompts, resource discovery mechanisms, and elicitation patterns that actively shape how the model behaves and makes decisions

- A clear, enforceable boundary separating the non-deterministic planning layer from the deterministic execution layer, preventing failures from contaminating your production systems

Smart ways to design this

- Wrap your existing business APIs inside an MCP layer. Keep those core APIs stable and unchanged, but layer on top MCP tool descriptions that explicitly define preconditions, success criteria, and the decision space the model needs to navigate.

- Make the execution step completely deterministic. Treat tool invocation as idempotent operations—validate every input from the model's planning phase, verify constraints hold, and fail fast with clear error signals when something's off.

Things to steer clear of

- Never expose your core business APIs as MCP tools without protective validation layers—state mutations without safety nets will destroy production reliability

- Never assume the model will perfectly follow schemas on the first try—build in validation, retries, and model-aware error handling that accounts for how LLMs actually behave under real conditions

Quick checklist

- [ ] Define explicit preconditions and postconditions for every single tool in your system

- [ ] Return machine-verifiable results so the agent can programmatically determine success or failure

- [ ] Log the complete flow—plan to tool invocation to result—enabling full replay and audit capabilities

Misconception #2: "Tools Are Pretty Much Agents"

What people often say

"If it takes input and produces output, it's basically an agent, right?"

The real deal

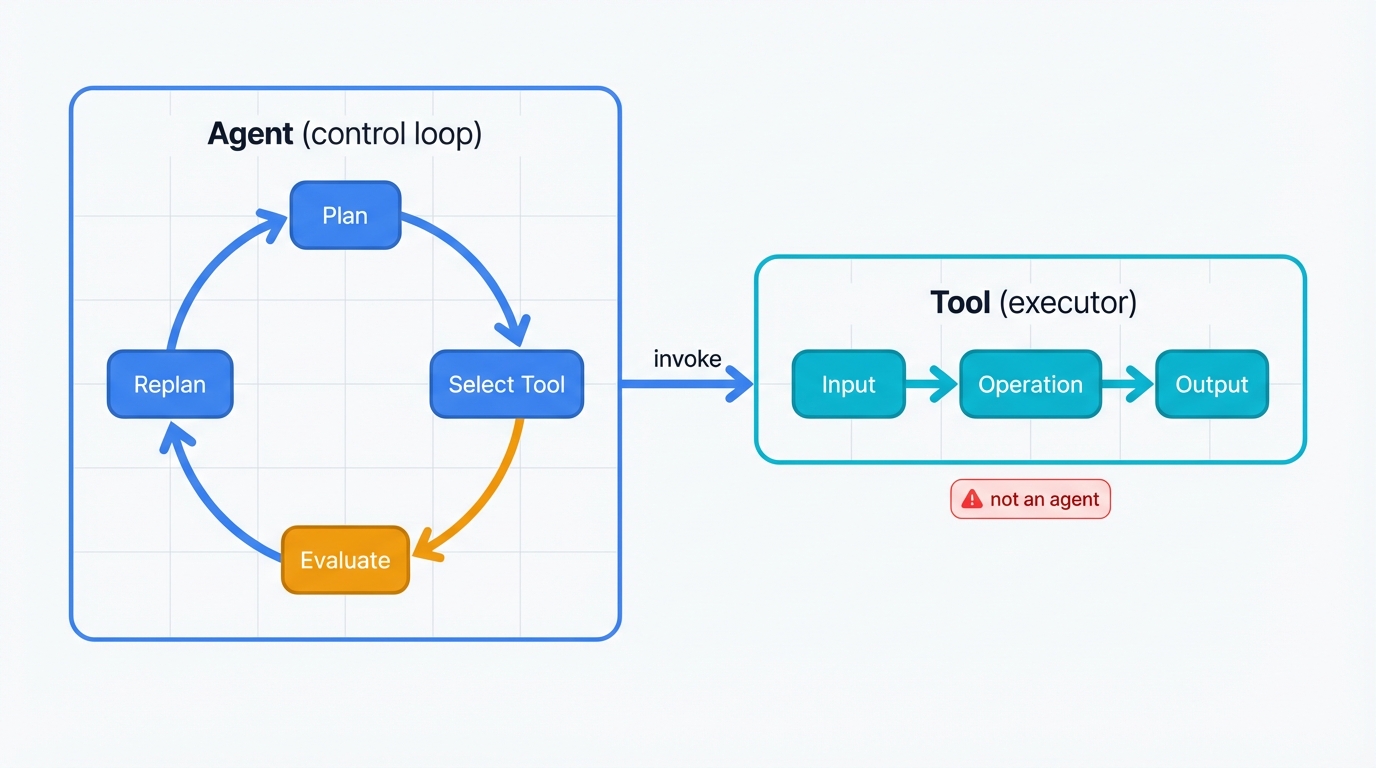

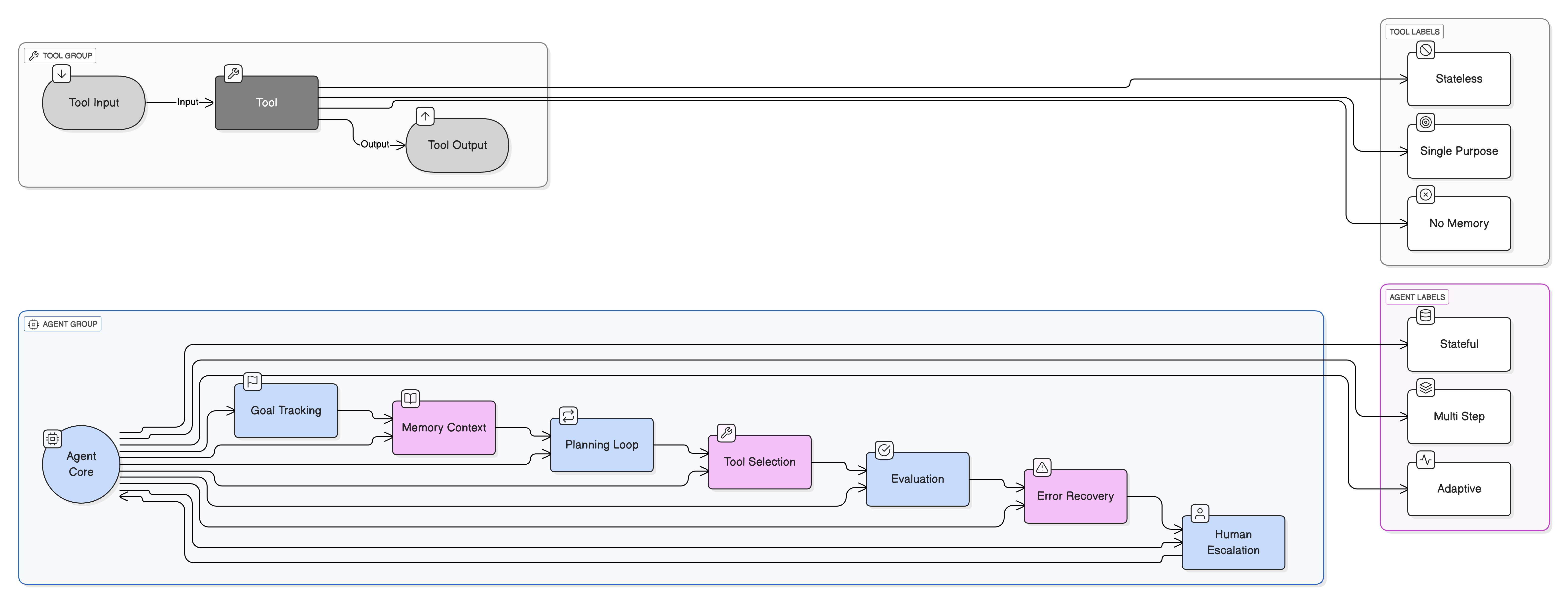

Not even close. Tools execute tasks—they receive instructions, perform operations, and return results. Period. Agents operate at a fundamentally different level: they plan multi-step workflows, adapt those plans when reality doesn't match expectations, and continuously evaluate progress toward goals using sophisticated feedback loops.

Agents keep iterating until they achieve their objective. Tools? They don't have that capability—they're stateless executors without the cognitive architecture for autonomous decision-making or goal-directed behavior.

Why does this mix-up happen?

Unix philosophy deserves partial blame. Chaining simple tools creates emergent intelligence—pipe grep into awk into sed, and suddenly you've got sophisticated text processing. Modern LLM demos amplify this confusion by making single tool calls look like they solve entire problems, blurring the fundamental distinction between execution primitives and intelligent orchestration.

What really sets agents apart from tools

- Genuine agency means maintaining goal state, dynamically replanning when circumstances change, and implementing robust recovery strategies when failures occur

- Real evaluation mechanisms with quantifiable success metrics and confidence scores, not just binary status codes that tell you nothing about quality

- Persistent memory and evolving context built up over multiple interactions, including versioned prompts, accumulated knowledge, and learned preferences that improve over time

Smart ways to design this

- Keep the main control loop firmly with the agent—that's where it selects the next tool, formulates the reasoning for that choice, and maintains the overall execution strategy

- Define concrete success criteria so the agent knows exactly when to terminate successfully, when to retry with different parameters, or when to escalate to human oversight

- Leverage MCP for structured human input when facing ambiguity or low confidence—the protocol provides elegant elicitation mechanisms for gathering clarification without breaking the agent's flow

Things to steer clear of

- Never cram all the planning logic into a single tool invocation—you'll lose the ability to observe, intervene, or understand the agent's decision-making process

- Never judge agent success purely by tool execution speed—latency optimization is meaningless if the agent makes the wrong decisions or fails to achieve its actual objectives

Quick checklist

- [ ] Explicitly document the agent's goal, operational constraints, and termination conditions

- [ ] Implement retry logic with exponential backoff and tool-specific error handling strategies

- [ ] Capture detailed traces for every iteration of the control loop, enabling complete observability into agent behavior

Misconception #3: "MCP Is All About the Tools"

What people often say

"MCP boils down to defining tools with JSON schemas for inputs and outputs."

The real deal

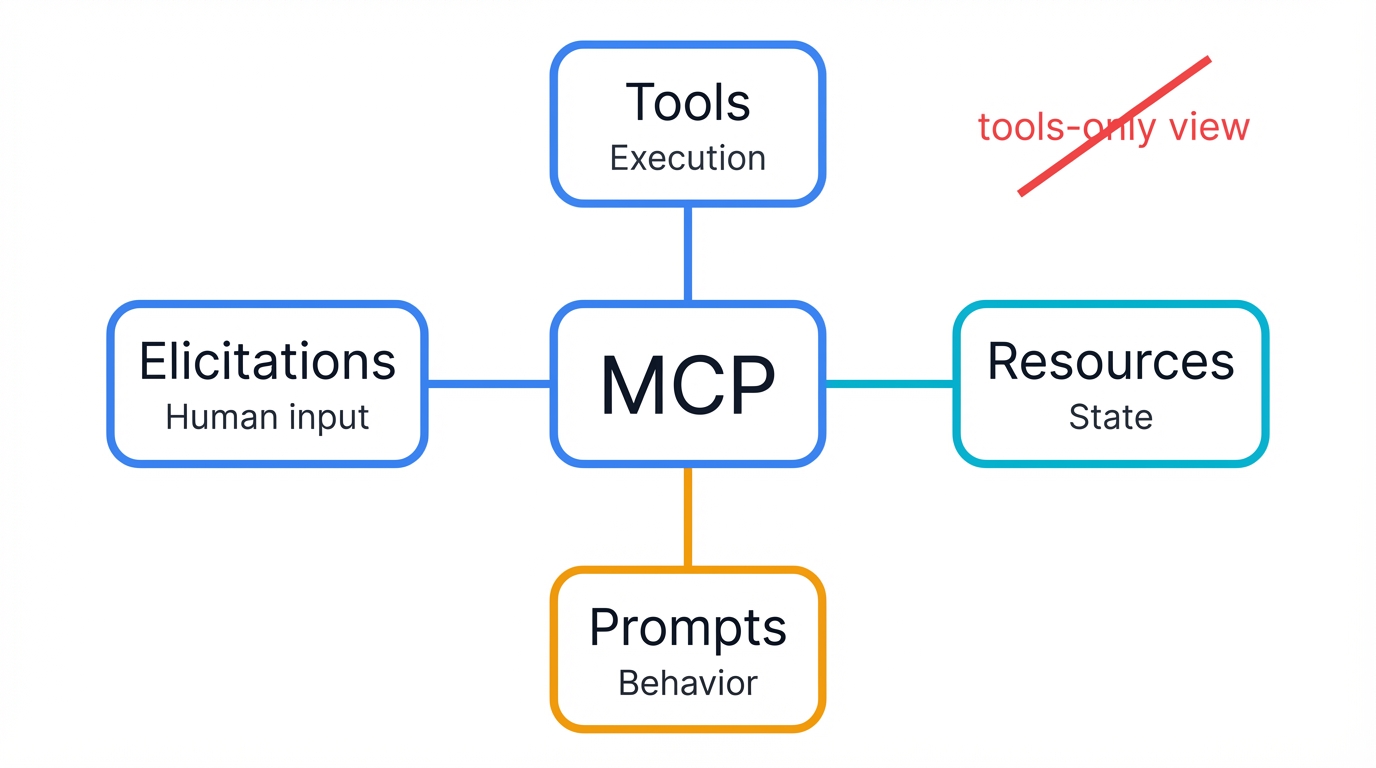

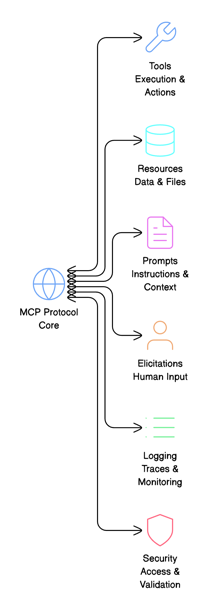

Tools matter, sure. But they're one piece of a much richer ecosystem. MCP provides resources for persistent state management, prompts as versioned behavioral templates, and elicitations for structured human interaction—all critical components for handling complex, context-heavy workflows that simple tool definitions can't address.

Why does this mix-up happen?

Early adopters zeroed in on tools and ignored everything else in the specification. Documentation examples perpetuate this narrow view by treating MCP as "natural language over APIs," which keeps things simple but misses the protocol's full power for building production-grade agentic systems.

What you're missing if you ignore the extras

- Resources enable agents to read, write, and reference structured data like files, databases, or documents across multiple steps, maintaining state continuity throughout complex workflows

- Prompts become versioned, testable, and trackable artifacts that you can deploy, A/B test, and roll back—treating behavioral instructions with the same rigor as application code

- Elicitations bring humans into the decision loop with structured, context-aware questions when the agent encounters ambiguity, then seamlessly resume autonomous operation once clarity is achieved

Smart ways to design this

- Build adapters for your resources—integrate knowledge bases, file systems, or ticketing systems with proper permissions, lifecycle management, and access control policies

- Manage prompts like production code—store them in a versioned registry where you can track changes, deploy updates, run automated tests, and instantly revert when prompt modifications degrade agent performance

- Design human-in-the-loop checkpoints—identify decision points where uncertainty exceeds acceptable thresholds, pause for structured user input, then continue execution with the additional context

Things to steer clear of

- Never use MCP as merely a conversational wrapper around backend services without leveraging resources and prompts—you're throwing away the protocol's most powerful capabilities

- Never hardcode prompts directly into application code—they'll drift, degrade, and become impossible to test or iterate on as your system evolves

Quick checklist

- [ ] Configure at least one readable resource and one writable resource that agents can leverage for state persistence

- [ ] Register all prompts with unique identifiers and semantic version numbers for change tracking

- [ ] Map out complete elicitation flows for scenarios where user input resolves ambiguity or low-confidence decisions

Tying It All Together: The Bridge That Makes AI Dependable

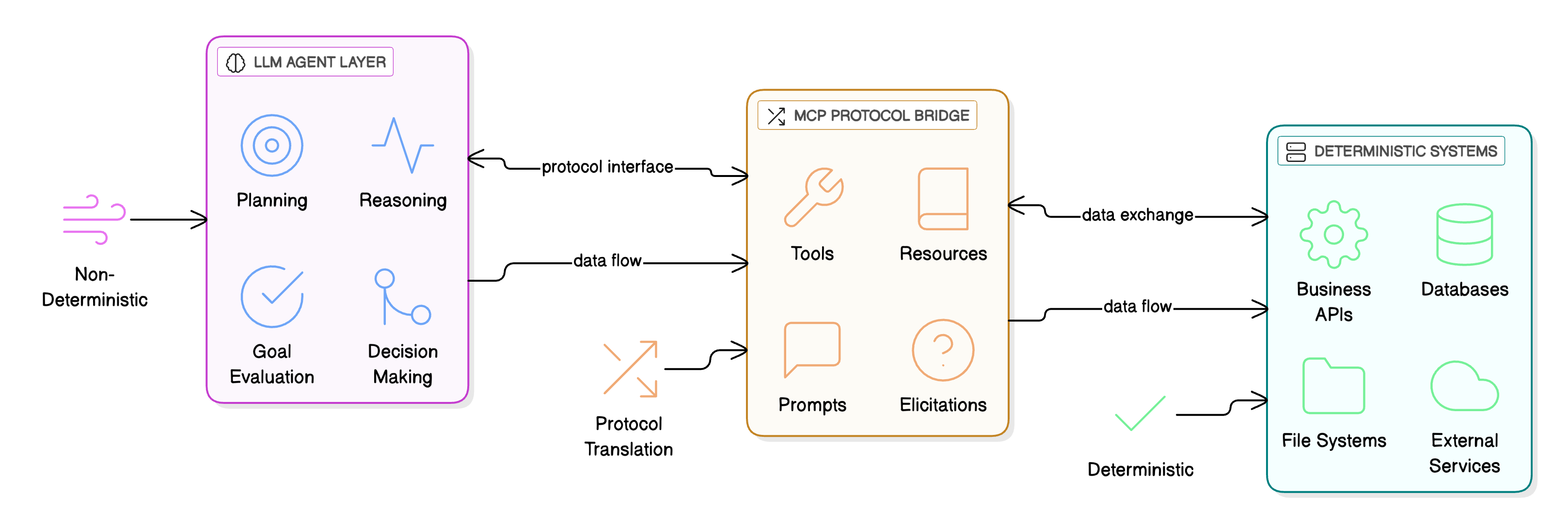

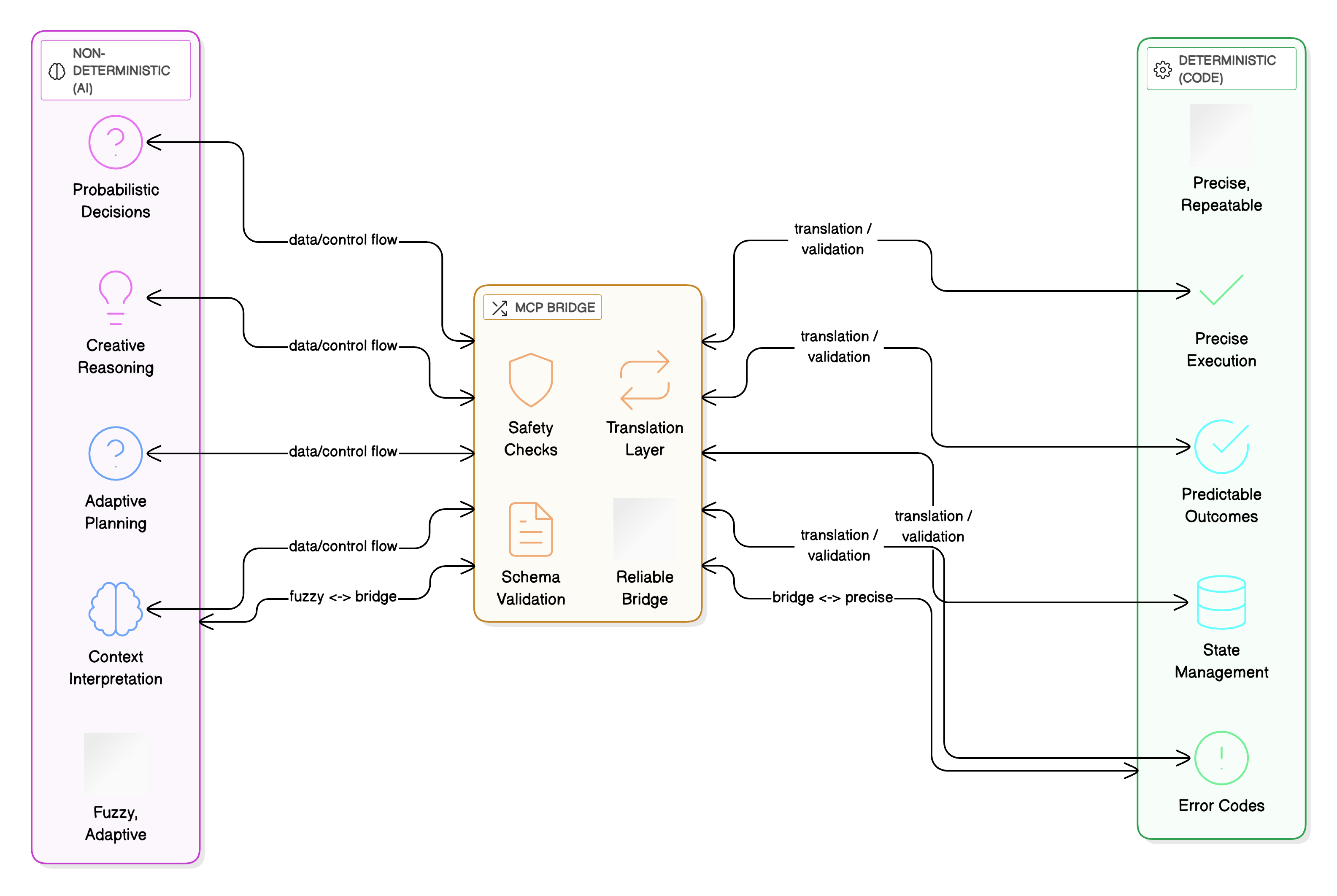

Visualize your architecture in layers. Two distinct worlds exist in your system, and they must remain separated by design.

- The non-deterministic layer handles planning—the model selects tools, formulates strategies, adjusts plans mid-flight, and evaluates outcomes probabilistically

- The deterministic layer executes operations—it validates inputs rigorously, enforces idempotency guarantees, and controls side effects with precision

MCP bridges these layers. It uses tools for execution primitives, resources for state management, prompts for behavioral control, and elicitations for human guidance—all while maintaining comprehensive logs and enforcing security boundaries that keep your production systems stable and auditable even when the AI layer makes unexpected decisions.

For better visibility and control

- Trace the complete execution path from initial plan to prompt selection to tool invocation to resource modifications—every step captured, timestamped, and queryable

- Version your prompts and tool configurations with the same discipline you apply to application code

- Enforce boundaries at the MCP layer for access control, rate limiting, and approval workflows that protect critical systems from agent mistakes

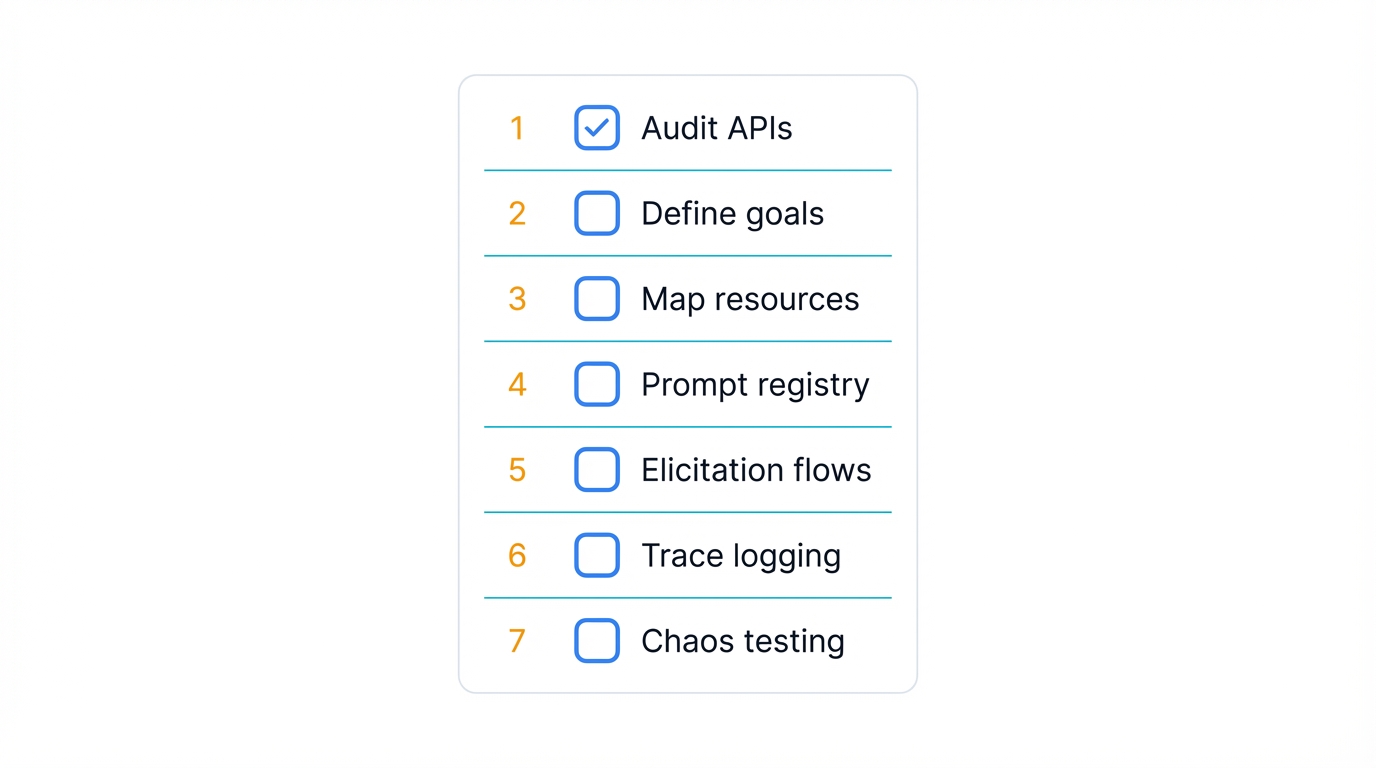

How to Get Started: A Practical Guide

- Audit your existing APIs and wrap them in MCP tool definitions that explicitly encode preconditions, postconditions, and the semantic meaning of each operation

- Define crystal-clear goals and success metrics for your agents, along with explicit termination conditions that prevent infinite loops and resource exhaustion

- Map out resource requirements agents will need, establishing read/write permissions, retention policies, and lifecycle management for persistent state

- Establish a prompt registry with version control, automated testing, and rollback capabilities for behavioral modifications

- Design elicitation flows for low-confidence scenarios where human input improves decision quality

- Implement comprehensive trace logging with session replay capabilities for post-mortem analysis and compliance requirements

- Run chaos testing scenarios where tools fail randomly—verify agents recover gracefully or escalate appropriately without data corruption

Watch Out for These Traps and How to Dodge Them

Common Pitfalls

- Treating MCP like REST: Move beyond simple status codes—implement real success verification with semantic validation and confidence scoring

- Relying on single-shot agent calls: Build iterative loops with retry logic, backoff strategies, and human escalation paths instead

- Skipping resource infrastructure: Without persistent context and state management, agents just spin in circles solving the same problems repeatedly

- Letting prompts become technical debt: Version them rigorously and test variations systematically—treat them as critical system components

- Operating in complete darkness: Without detailed traces, debugging becomes guesswork and building trust in agent decisions becomes impossible

Additional Misconceptions to Avoid

MCP replaces Retrieval-Augmented Generation entirely: Many developers think MCP handles all data retrieval needs, making vector searches and document retrieval obsolete. Dead wrong—MCP complements RAG by standardizing how retrieval tools get exposed to models, but you still need those RAG mechanisms for grounding information in prompts and handling unstructured data effectively. Build retrieval as an MCP tool and layer it strategically where context augmentation improves outcomes.

MCP makes all LLMs interchangeable: The fantasy that any model performs identically once MCP abstracts the interface. Reality hits hard—models vary drastically in tool interpretation, prompt following, and invocation decisions. Claude might nail zero-shot tool use while other models need extensive few-shot examples and fine-tuning. MCP provides interface consistency, not behavioral uniformity, so rigorously test across different LLMs before deploying.

MCP only works for complex agentic systems: Some assume MCP ties exclusively to multi-step agent workflows and offers nothing for simpler architectures. Wrong—even standalone models benefit from MCP for direct tool or data access, like querying databases without complex orchestration. This broadens applicability to quick integrations and lightweight use cases where full agent loops are overkill.

MCP represents groundbreaking new technology: The hype machine spins MCP as revolutionary innovation when it's fundamentally a standardization protocol for existing practices. It builds on tool-calling patterns LLMs have used for years, just making them more universal, secure, and maintainable—valuable for sure, but not a technological breakthrough that changes the fundamental capabilities of AI systems.

Wrapping Up

Stick with that "API mindset," and you'll build fragile prototypes that work once in demos and collapse spectacularly in production. Recognize the truth instead: tools are reliable executors, agents are intelligent orchestrators, and MCP's full power—tools, resources, prompts, and elicitations working together—creates the bridge between intelligent but unpredictable AI and the rock-solid deterministic systems your business depends on.

Example Implementation

# Example: Model training with security considerations

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

def train_secure_model(X, y, validate_inputs=True):

"""Train model with input validation"""

if validate_inputs:

# Validate input data

assert X.shape[0] == y.shape[0], "Shape mismatch"

assert not np.isnan(X).any(), "NaN values detected"

# Split data securely

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42, stratify=y

)

# Train with secure parameters

model = RandomForestClassifier(

n_estimators=100,

max_depth=10, # Limit to prevent overfitting

random_state=42

)

model.fit(X_train, y_train)

score = model.score(X_test, y_test)

return model, score