Part I: Why Your AI Needs to Think in Networks

Your AI excels at images. It crushes text. It masters structured data. But here's what it misses: relationships.

Key Concept: Understanding this foundational concept is essential for mastering the techniques discussed in this article.

Think about your business data. Social connections drive purchases. Transaction networks expose fraud. Molecular structures predict drug effectiveness. Supply chains forecast disruptions. Traditional AI treats each point separately. Graph Neural Networks (GNNs) focus on what matters: the connections that make data meaningful.

Machine learning has chased linear sequences and pixel grids for decades, yet the world's most valuable data exists as interconnected networks. GNNs mark a fundamental shift—from recognizing patterns to understanding relationships—and this shift transforms AI capabilities across every industry you can imagine.

What Makes Graph Data Different (And Why It Matters for Your AI)

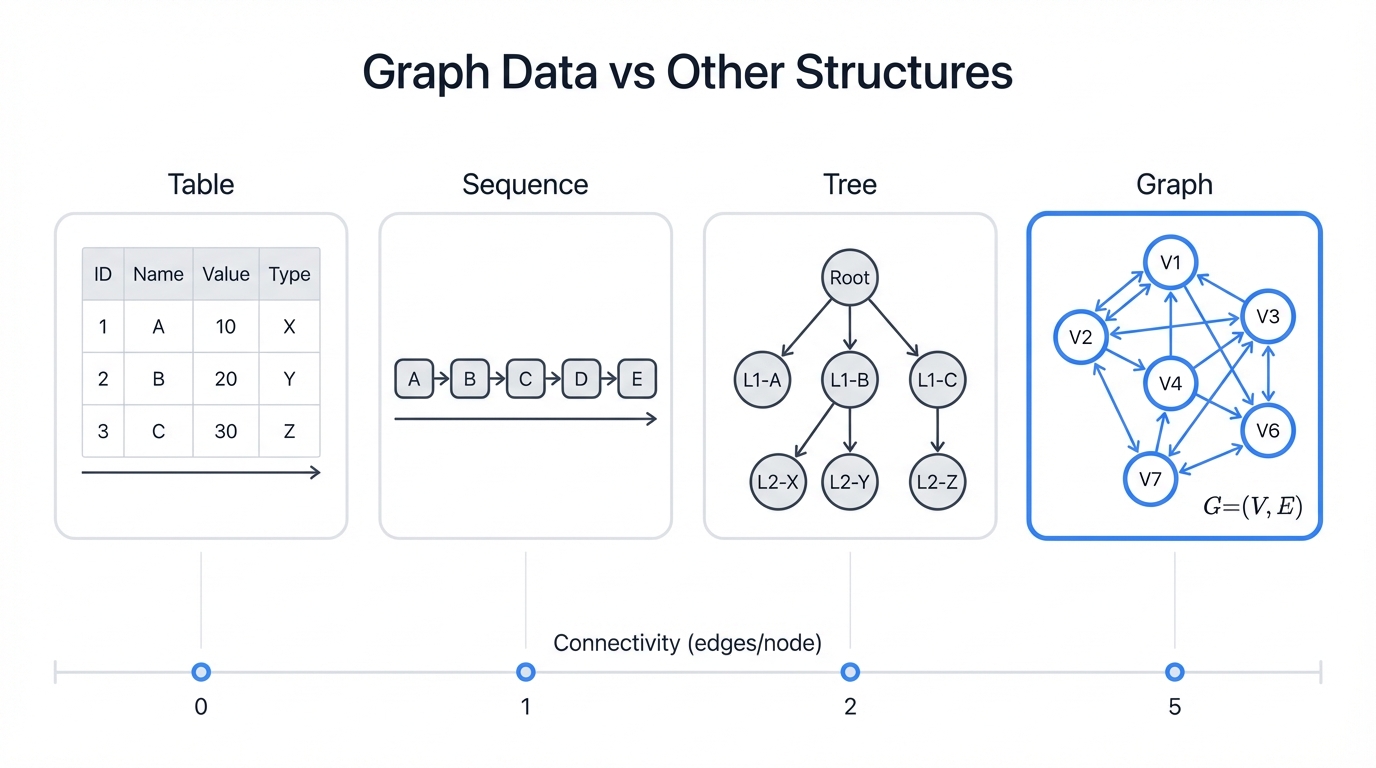

Graphs capture reality. Your database stores rows and columns. Arrays organize sequences. Trees build hierarchies. But graphs? Graphs model the messy, interconnected nature of how things actually relate to each other.

The math is simple: G=(V,E), where V represents vertices (nodes) and E represents edges (connections). This simplicity hides profound complexity—because graphs can represent any relationship structure imaginable.

The Three Building Blocks That Change Everything

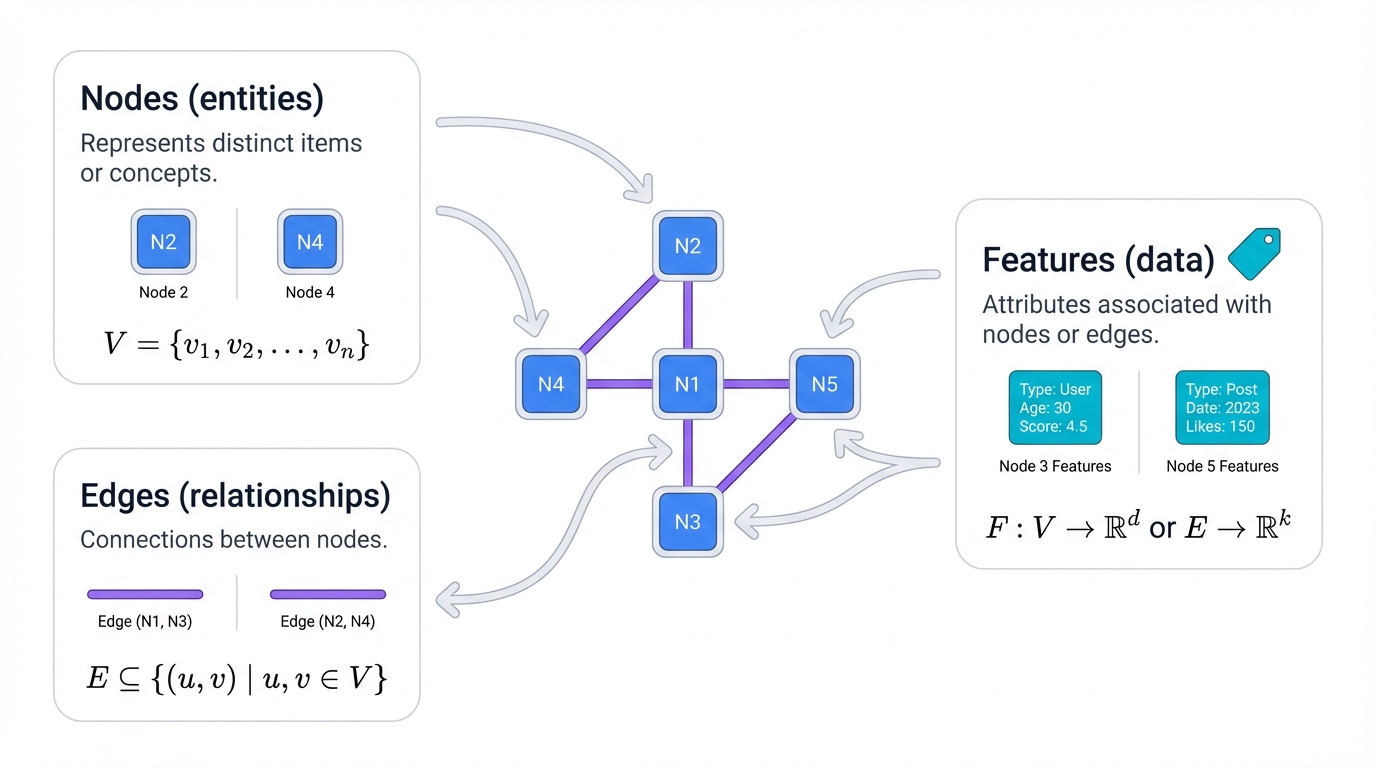

Nodes (Your Entities): Each node represents something critical in your domain. In fraud detection, nodes are customers, merchants, accounts. In supply chains, they're suppliers, manufacturers, distributors. In social platforms, they're users, posts, groups. Every node carries information—demographics, transaction history, content features.

Edges (Your Relationships): Edges show how entities interact. Financial transactions connect customers and merchants. Retweets link users. Chemical bonds join atoms. These connections carry information—transaction amounts, interaction frequency, relationship strength.

Features (Your Intelligence): Both nodes and edges hold detailed data. Your customer node includes credit score, location, purchase history. Your transaction edge contains amount, timestamp, payment method. GNNs use these features as starting points, then enhance them with relationship context.

Here's the breakthrough: Traditional AI analyzes features in isolation, but GNNs recognize that behavior patterns emerge from who you interact with, how often, and under what circumstances.

Part II: How GNNs Learn From Relationships

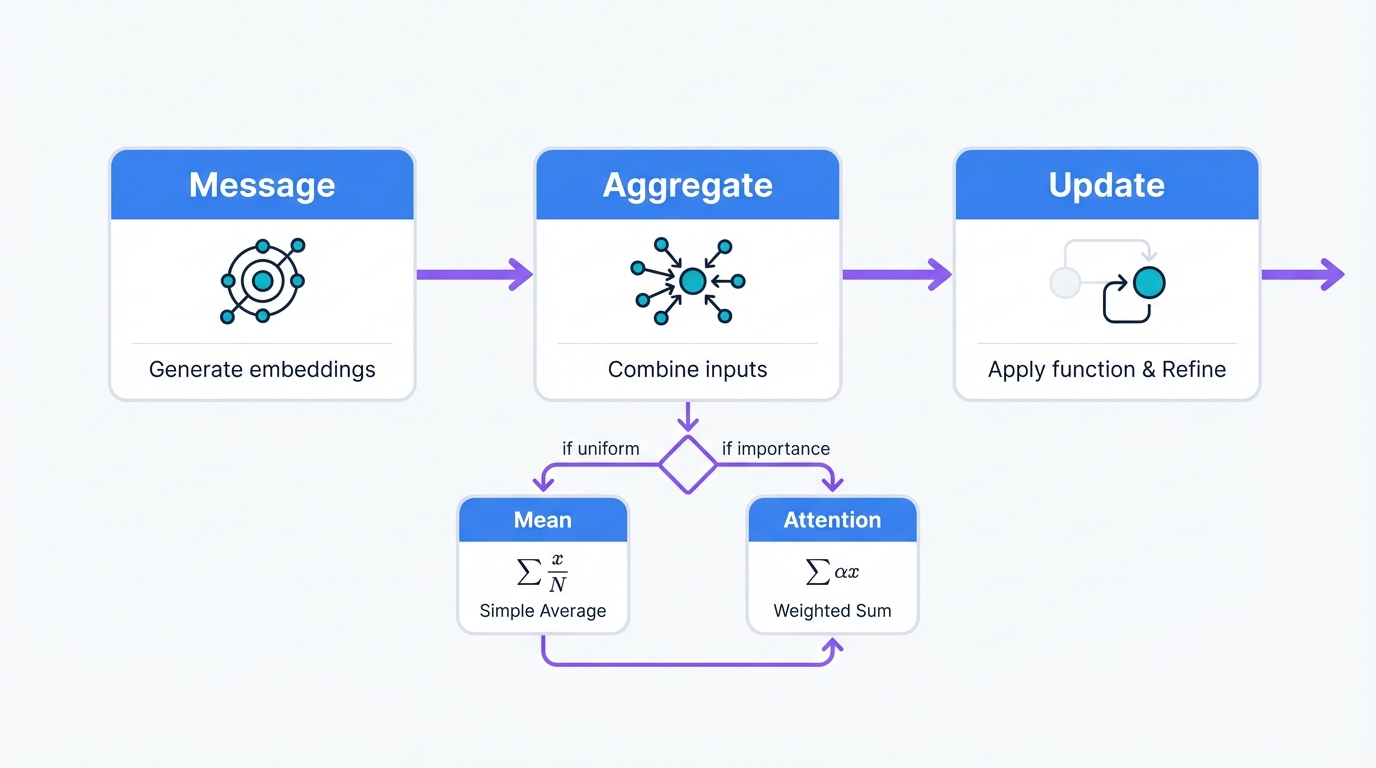

GNNs achieve this through message passing. It's elegant. It's powerful. Instead of nodes learning alone, they literally send information to their neighbors, creating collaborative intelligence that emerges from the network structure itself.

The Three-Step Dance: How Information Flows Through Your Network

Message passing happens in three coordinated steps that repeat across multiple layers:

Step 1: Message Creation

Each node crafts personalized messages for its neighbors. These aren't generic broadcasts—each message is tailored for the recipient based on the edge features connecting them, creating communication that respects the unique nature of each relationship.

Step 2: Message Aggregation

Each node collects incoming messages from neighbors. It combines them intelligently. Different GNN architectures use different strategies—some average messages, others weight them by importance using attention mechanisms, and some deploy sophisticated pooling operations.

Step 3: Node Update

Each node updates its understanding. It combines its previous state with aggregated neighborhood information. This creates a new, more informed representation that fuses local features with network context.

How Your AI's Understanding Expands Across the Network

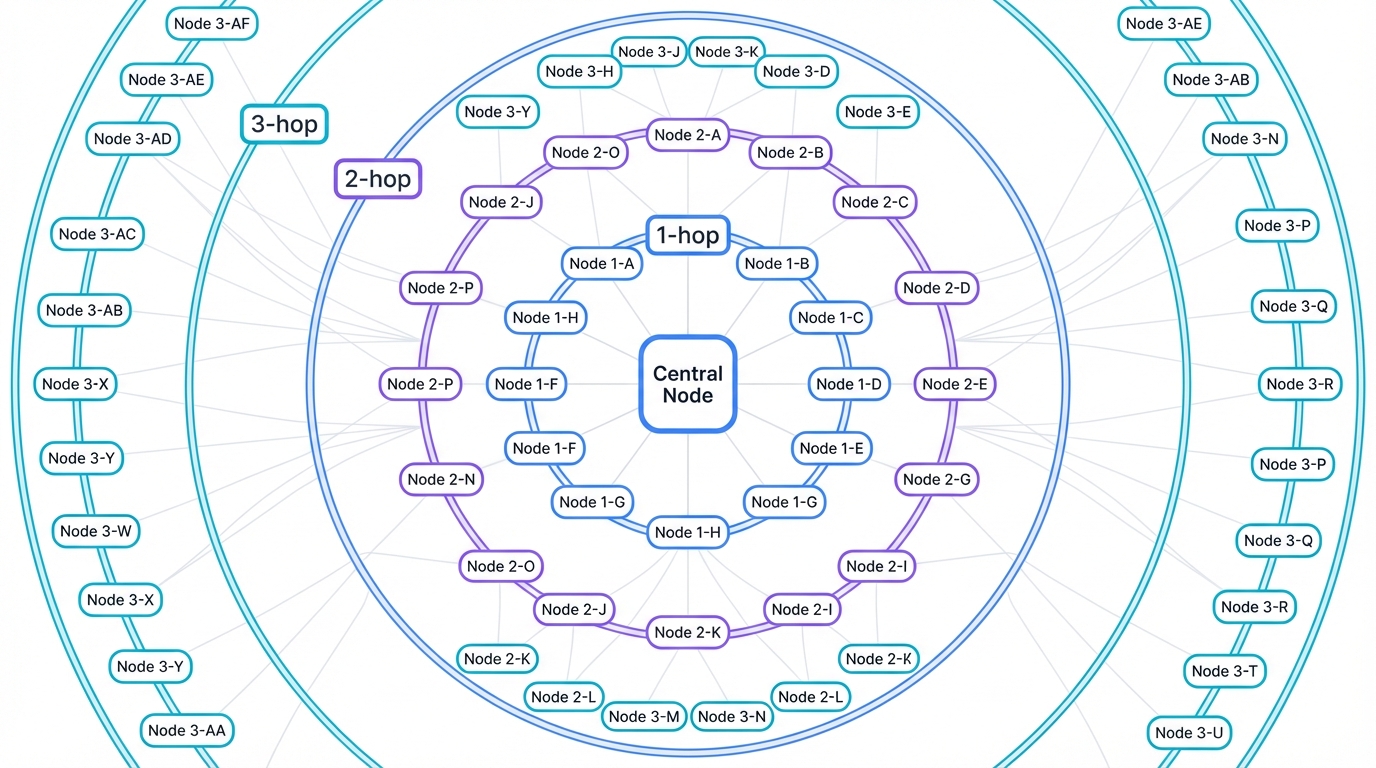

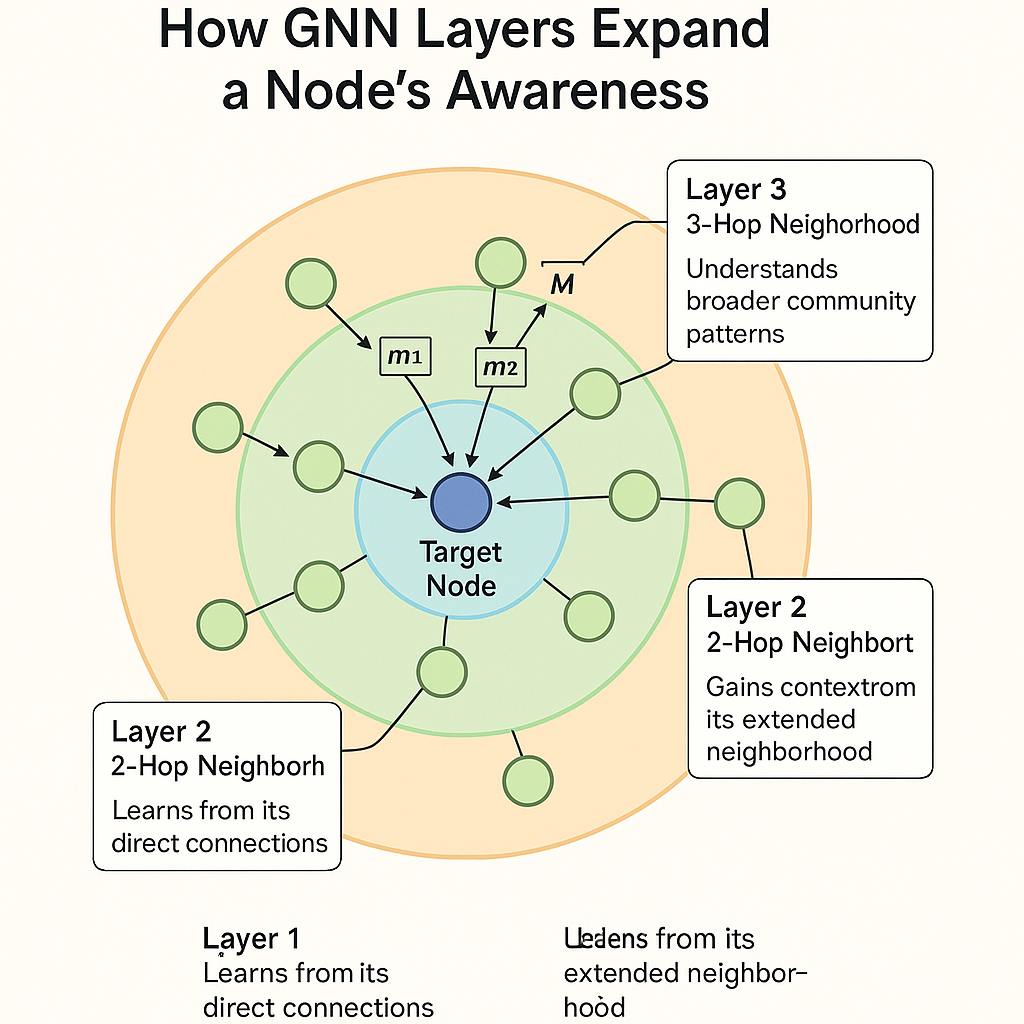

Each GNN layer extends information flow through progressive neighborhood aggregation. Layer 1 lets each node learn from direct neighbors via 1-hop connections—gathering immediate relationship data and local context. Layer 2 enables information from neighbors-of-neighbors to flow through 2-hop connections—helping nodes understand their extended neighborhood. Layer 3 spreads knowledge to the third degree of separation via 3-hop connections—capturing broader network context and distant relationships.

Part III: Security Vulnerabilities in Graph Neural Networks

GNNs rely on network structure. This creates unique security vulnerabilities. Traditional AI security approaches don't address these risks. Understanding these vulnerabilities is crucial for building robust AI systems that won't be exploited by adversaries who understand how to manipulate network topology.

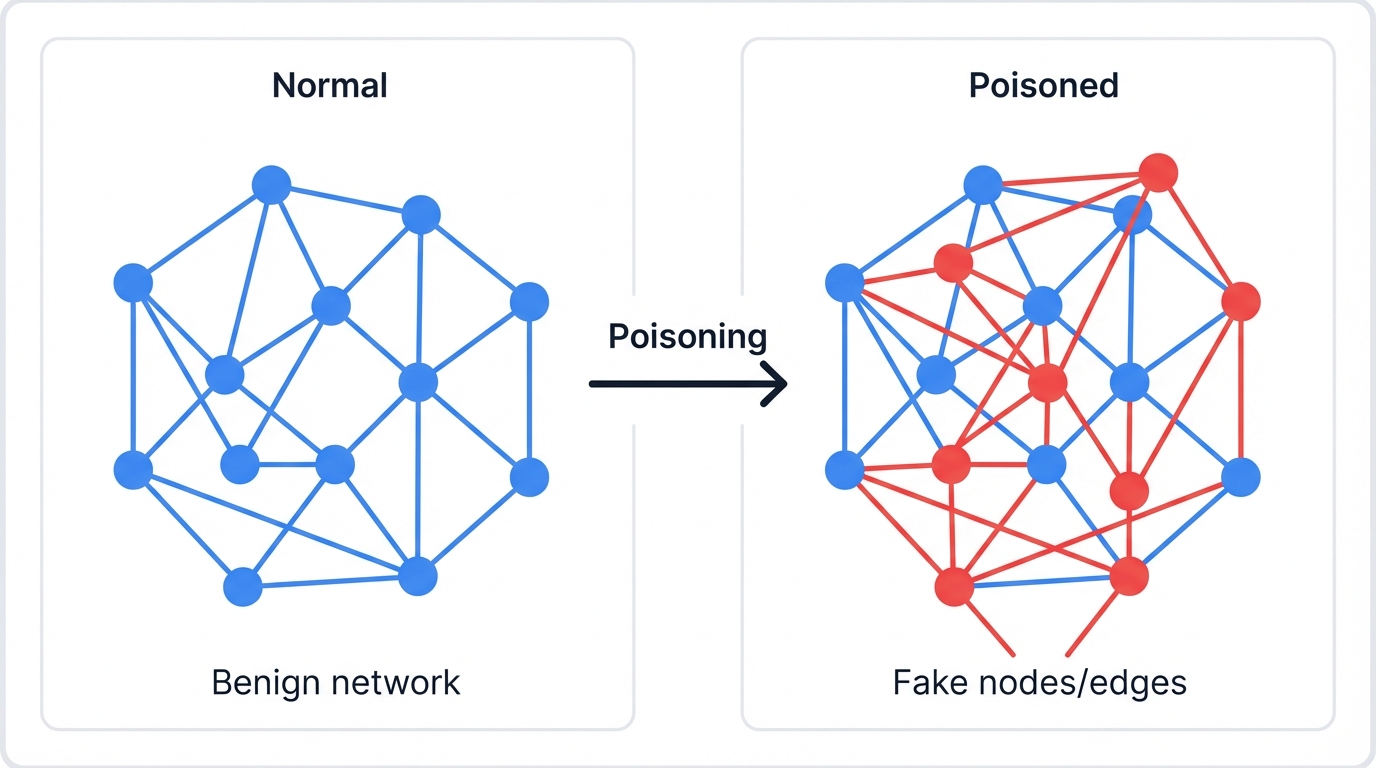

Graph Poisoning: When Bad Actors Manipulate Your Network Structure

Graph poisoning attacks manipulate the network structure to influence your AI's decisions. Attackers add fake nodes. They create false edges. They modify existing relationships to bias your model's output, exploiting the very feature that makes GNNs powerful—their reliance on network structure.

Real-world implementation: Creating fake social media accounts, establishing shell companies in business networks, generating synthetic user profiles in recommendation systems.

Case Study: How Criminals Game Financial AI Systems

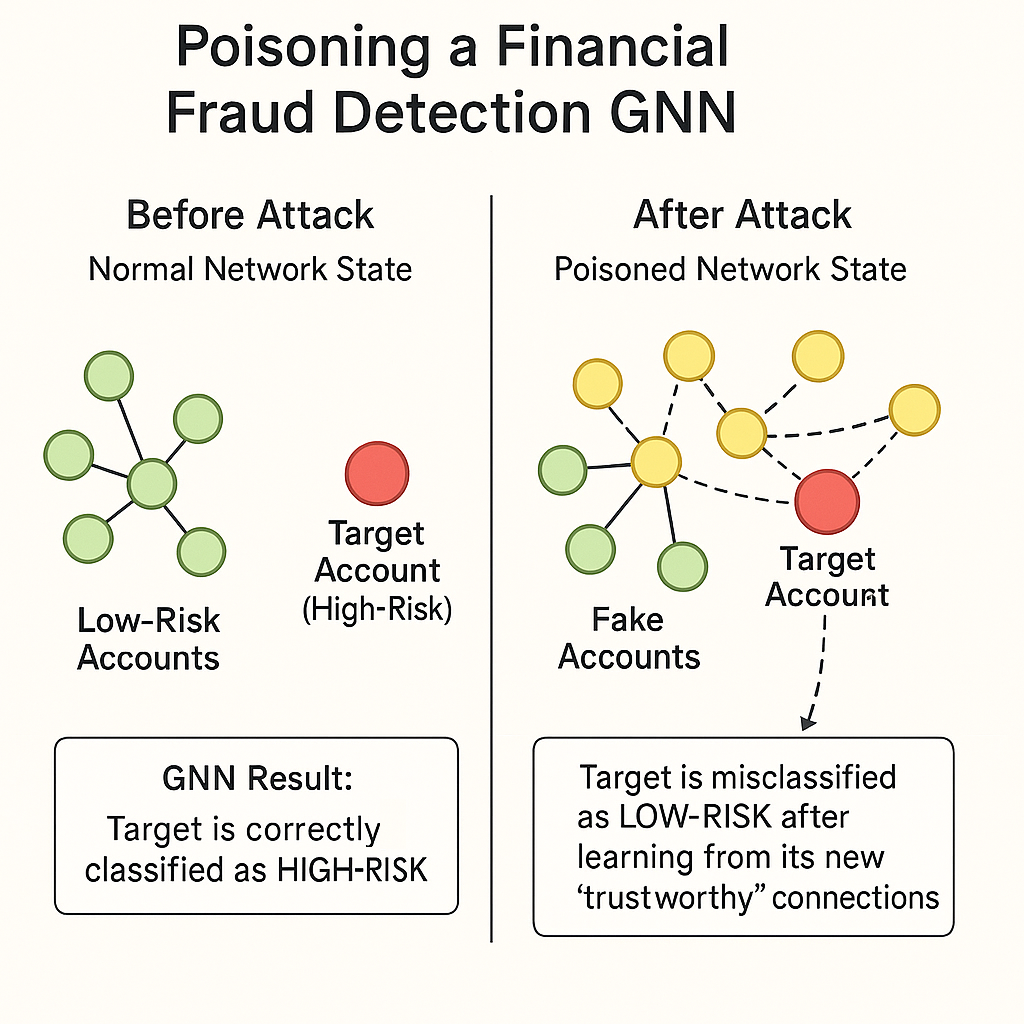

Scenario: Your bank uses a GNN to assess customer risk based on transaction network patterns. A high-risk individual wants to appear low-risk. They need to avoid fraud detection.

The Attack Strategy:

- Create multiple legitimate-looking shell accounts that mimic real customer behavior patterns

- Establish transaction patterns that mirror low-risk behavior over extended periods

- Gradually build trust relationships within the network through consistent, normal-appearing activity

- Use these cultivated relationships to "vouch for" the high-risk individual through network proximity

Conclusion: The Future of Connected AI

Best Practice: Following these recommended practices will help you achieve optimal results and avoid common pitfalls.

Graph Neural Networks represent a fundamental shift in how AI processes information. By focusing on relationships rather than individual data points, GNNs unlock insights that traditional methods miss entirely—revealing patterns hidden in the connections between entities, not just in the entities themselves.

With this power comes responsibility. The security implications of network-based AI demand careful consideration and robust defense mechanisms. Organizations implementing GNNs must balance the incredible potential of connected data with the real security risks inherent in this approach.

Moving forward, organizations that master both the opportunities and challenges of Graph Neural Networks will gain significant advantages in an increasingly connected world where relationships define reality more than isolated data points ever could.

This research is part of perfecXion.ai's ongoing commitment to AI security and infrastructure research. For the latest updates and detailed technical reports, visit our Knowledge Hub.