Table of Contents

Executive Summary

DoRA changes everything. Weight-Decomposed Low-Rank Adaptation sits at the cutting edge of Parameter-Efficient Fine-Tuning (PEFT), solving a problem that's plagued AI teams for years: how do you adapt massive foundation models without burning through your compute budget? Full fine-tuning works, sure. But it costs a fortune. DoRA offers something better—a principled approach that actually understands what happens during fine-tuning.

Here's the breakthrough. DoRA splits pre-trained weight matrices into two components: magnitude and direction. Think of it like separating the "how much" from the "which way." Each component gets fine-tuned separately—LoRA handles direction while magnitude trains directly—and the result is learning dynamics that mirror full fine-tuning with stunning accuracy. Performance improves across the board: large language models, vision-language models, you name it. Training stability? Rock solid.

Key Insight: DoRA keeps all of LoRA's efficiency wins. The trainable parameter increase? Negligible. Inference latency overhead? Zero. The learned components merge right back into the base model. It's efficiency without compromise.

This isn't just another alternative to LoRA—it's a superior replacement that costs nothing extra. The performance gap with full fine-tuning? Narrowed dramatically. This analysis dives deep into DoRA's technical foundation, maps the broader PEFT landscape, delivers hard comparisons against full fine-tuning, and gives you the strategic playbook for putting it to work.

The Adaptation Imperative: Context and Challenges of Fine-Tuning Large-Scale Models

Modern AI follows a simple recipe: pre-train massive foundation models, then adapt them for specific tasks. This "pre-training and fine-tuning" approach unlocks the vast knowledge encoded in billions of parameters, delivering state-of-the-art performance across countless applications. Sounds perfect, right? Not quite. The standard adaptation method—full fine-tuning—brings a crushing set of challenges that sparked an entire research revolution toward more efficient techniques.

The Prohibitive Cost of Full Fine-Tuning (FT)

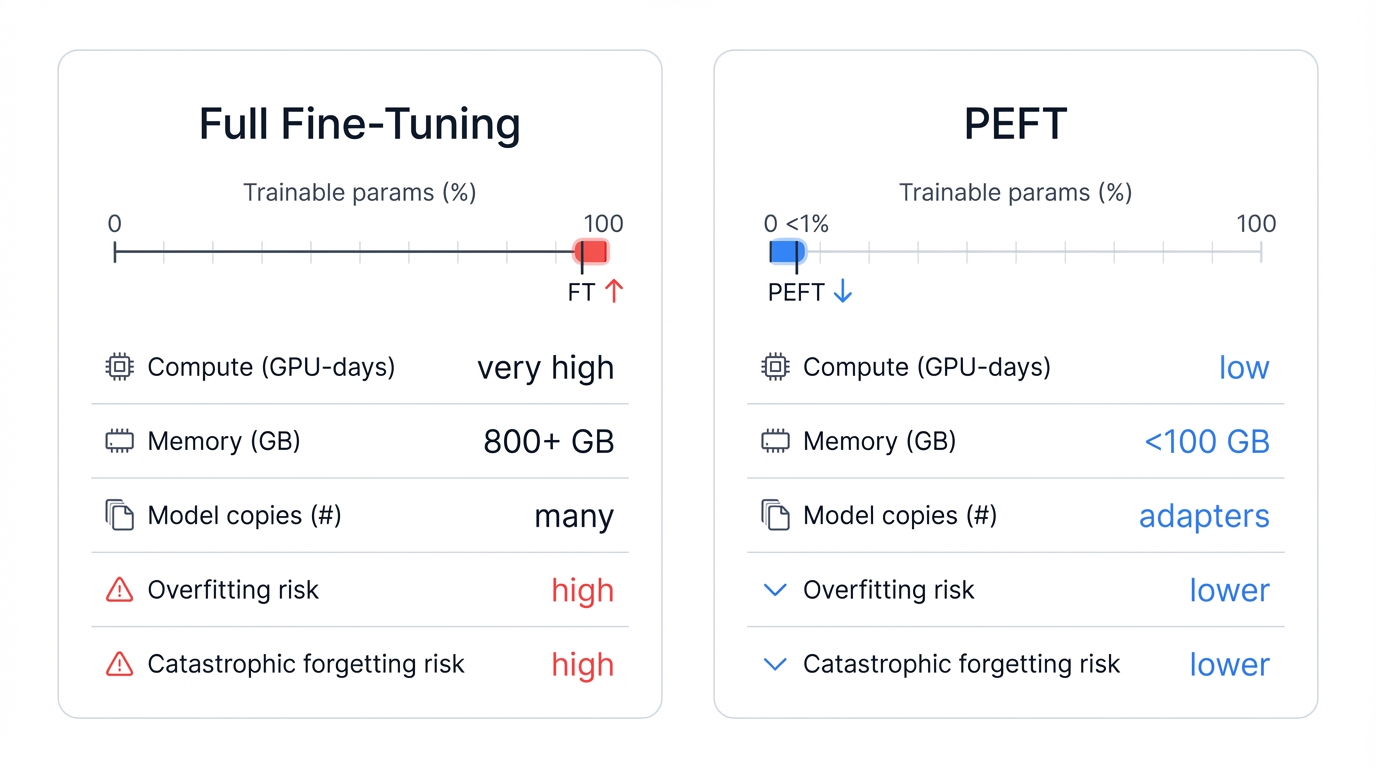

Full fine-tuning updates every single parameter in a pre-trained model using your task-specific data. Performance can be stellar. But practicality? That's another story entirely, because the resource requirements create massive barriers to entry.

Computational and Memory Demands

Let's talk numbers. Modern foundation models pack hundreds of millions to billions of parameters. Updating all those weights demands serious computational firepower—high-end GPUs or TPUs running for days or weeks. The memory footprint hits even harder. You're not just storing model weights; you need gradients, optimizer states, and activations for every trainable parameter. This multiplies memory requirements far beyond the model size itself. Fine-tuning a 65-billion-parameter model? That'll cost you over 800 GB of GPU memory. Only large-scale data centers can handle that kind of demand, making FT impractical for most organizations and researchers.

Storage Overhead

Here's a logistical nightmare: full fine-tuning modifies the entire weight set, producing a complete model copy for each downstream task. Imagine deploying specialized models for dozens of tasks—sentiment analysis, legal document summarization, customer support chatbots. You'd store dozens of multi-gigabyte checkpoints. Storage costs explode. Operational complexity skyrockets as you manage and serve these distinct models.

Risk of Catastrophic Forgetting

There's a deeper problem here. When you update all parameters on a narrow task, the model can overwrite the rich, generalized knowledge from pre-training. We call this "catastrophic forgetting." Performance degrades on tasks outside the fine-tuning domain, compromising the very generalizability that makes foundation models powerful in the first place.

Data Requirements and Overfitting

Full fine-tuning shines with large, high-quality datasets. Small datasets? That's where it falls apart. The model's billions of parameters can simply memorize training examples instead of learning underlying patterns. You get perfect training performance but terrible generalization to new data—the classic overfitting trap.

The Rise of Parameter-Efficient Fine-Tuning (PEFT)

Enter PEFT. The research community answered full fine-tuning's challenges with an entirely new class of methods that flip the paradigm on its head, prioritizing efficiency without sacrificing performance.

The core principle is elegant. Freeze most pre-trained parameters. Update only a tiny, targeted subset—either existing parameters or new ones added to the model. By training just a fraction of total parameters (often under 1%), PEFT slashes computational and memory requirements.

Economic Impact: This efficiency democratizes AI. Smaller companies, academic labs, and individual researchers with limited resources can now customize state-of-the-art foundation models. The barrier to entry crumbles.

But PEFT offers more than resource savings—it solves fundamental learning problems too. Constraining trainable parameters acts as built-in regularization. Models become less prone to overfitting on small datasets. Catastrophic forgetting? Minimized by preserving the vast majority of pre-trained weights. PEFT isn't just cheaper; it's often smarter and more robust, addressing core limitations that plague massive neural network adaptation.

An In-Depth Technical Analysis of DoRA (Weight-Decomposed Low-Rank Adaptation)

DoRA is NVIDIA Research's answer to LoRA's limitations. Built directly on Low-Rank Adaptation's foundation, it delivers superior performance and rock-solid training stability. But to grasp DoRA's breakthrough, we first need to understand what came before.

Foundational Precursor: A Primer on Low-Rank Adaptation (LoRA)

LoRA dominates the PEFT landscape. Its effectiveness and elegant simplicity won over the community. The design leverages a key empirical observation: weight matrix changes during task adaptation typically have low intrinsic rank.

Core Mechanism

LoRA takes a shortcut. Instead of learning the full, high-dimensional delta weight matrix (ΔW)—the difference between fine-tuned weights (W') and original pre-trained weights (W₀)—it approximates using two tiny, low-rank matrices. The math: W' = W₀ + ΔW, where ΔW = BA. For a d×k matrix W₀, matrix B is d×r and matrix A is r×k. The rank r? Much smaller than d and k (r ≪ min(d,k)).

Implementation

Watch what happens during fine-tuning. The original weight matrix W₀ freezes—no gradient updates. The forward pass changes to y = W₀x + BAx. Only the new low-rank matrices B and A get trained. Parameter reduction is massive. Full fine-tuning updates d² parameters for a d×d matrix. LoRA? Just 2dr parameters.

Inference Efficiency

Here's LoRA's killer feature: zero inference overhead. After training completes, compute BA and add it directly to the original weights: W' = W₀ + BA. This merged matrix W' runs inference without architectural changes or extra computation. Production systems demand speed—LoRA delivers.

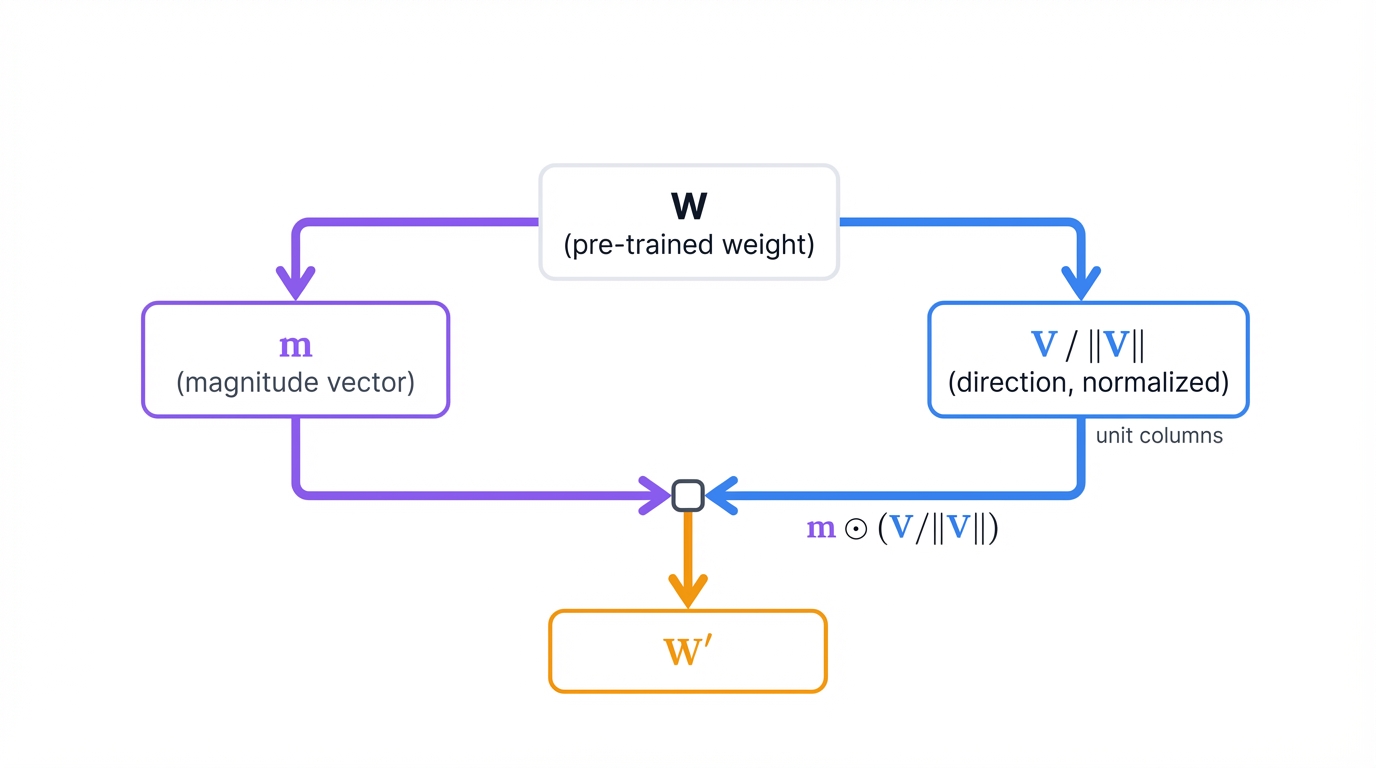

The Core Innovation: Decomposing Weights into Magnitude and Direction

LoRA works brilliantly. But analysis exposed a weakness—its weight update approximation is too blunt. DoRA's innovation emerges from deeper investigation into full fine-tuning's learning dynamics, revealing distinct adjustments to magnitude (length) and direction of weight vectors. DoRA explicitly models and decouples these components.

Theoretical Underpinnings

The central hypothesis? Separate the update process for magnitude and direction. PEFT can then faithfully replicate full fine-tuning's nuanced learning patterns, driving better performance. This transcends LoRA's monolithic ΔW approximation, achieving granular, principled adaptation.

Mathematical Formulation

DoRA re-parameterizes pre-trained weight matrix W into magnitude and direction. The decomposition:

W = m * (V / ||V||)

# Where:

# m = magnitude vector (learnable)

# V = directional matrix

# ||V|| = vector-wise norm of each columnBreaking it down:

- V is the directional matrix—same dimensions as W

- m is the magnitude vector—learnable elements representing each column vector's magnitude in W

- ||V|| denotes vector-wise norm. Each column vector of V gets normalized. Dividing V by this term creates unit norm columns, ensuring V represents pure direction

The decomposition splits weight scale (captured by m) from structural orientation (captured by normalized V). Clean separation, powerful results.

The DoRA Mechanism: Decoupled Optimization and Directional Updates

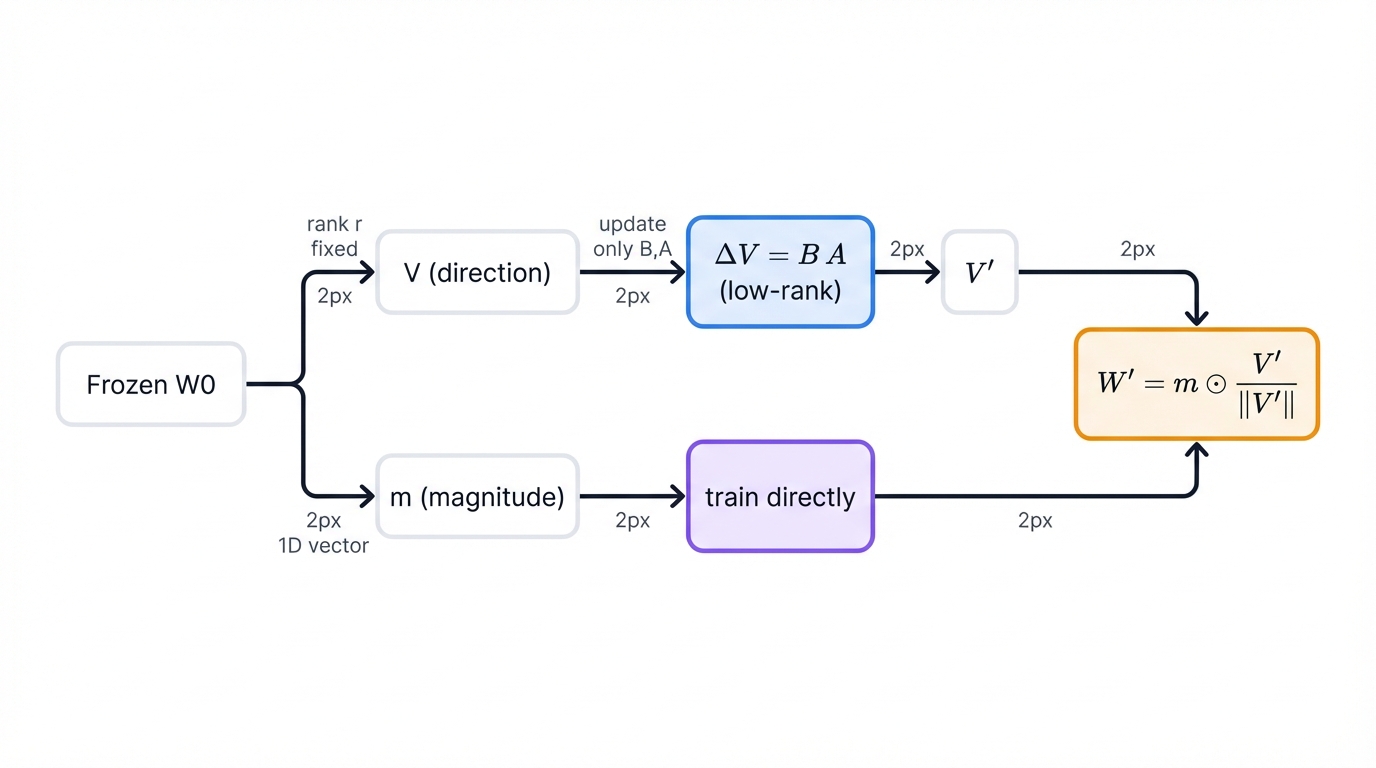

Weight matrix decomposed. Now DoRA fine-tunes magnitude and direction separately, using LoRA's efficiency for the complex directional update.

Applying LoRA to Direction

Directional matrix V is large. Direct fine-tuning? Expensive. DoRA applies LoRA for efficient updates. Fine-tuned directional matrix: V' = V + ΔV. The change ΔV approximates through two low-rank matrices: ΔV = BA.

Independent Magnitude Training

Meanwhile, magnitude vector m trains directly. Since m is one-dimensional, added trainable parameters are tiny—just 0.01% more than standard LoRA. Computational cost increase? Negligible.

The Complete Update

The final updated weight matrix W' combines trained magnitude with LoRA-updated direction:

W' = m' * ((V + BA) / ||V + BA||)

# During training:

# - Gradients update magnitude vector m'

# - Gradients update low-rank matrices B and A

# - Original pre-trained W₀ remains frozenGradients flow back updating magnitude vector m and low-rank matrices B and A. Original pre-trained weights? Frozen solid. This sophisticated modeling transforms LoRA's "black-box" approximation into a "white-box" decomposition targeting specific weight properties.

Empirical Performance and Benchmarking Results

Theory is beautiful. But empirical results? They prove DoRA's superiority over LoRA across every dimension that matters.

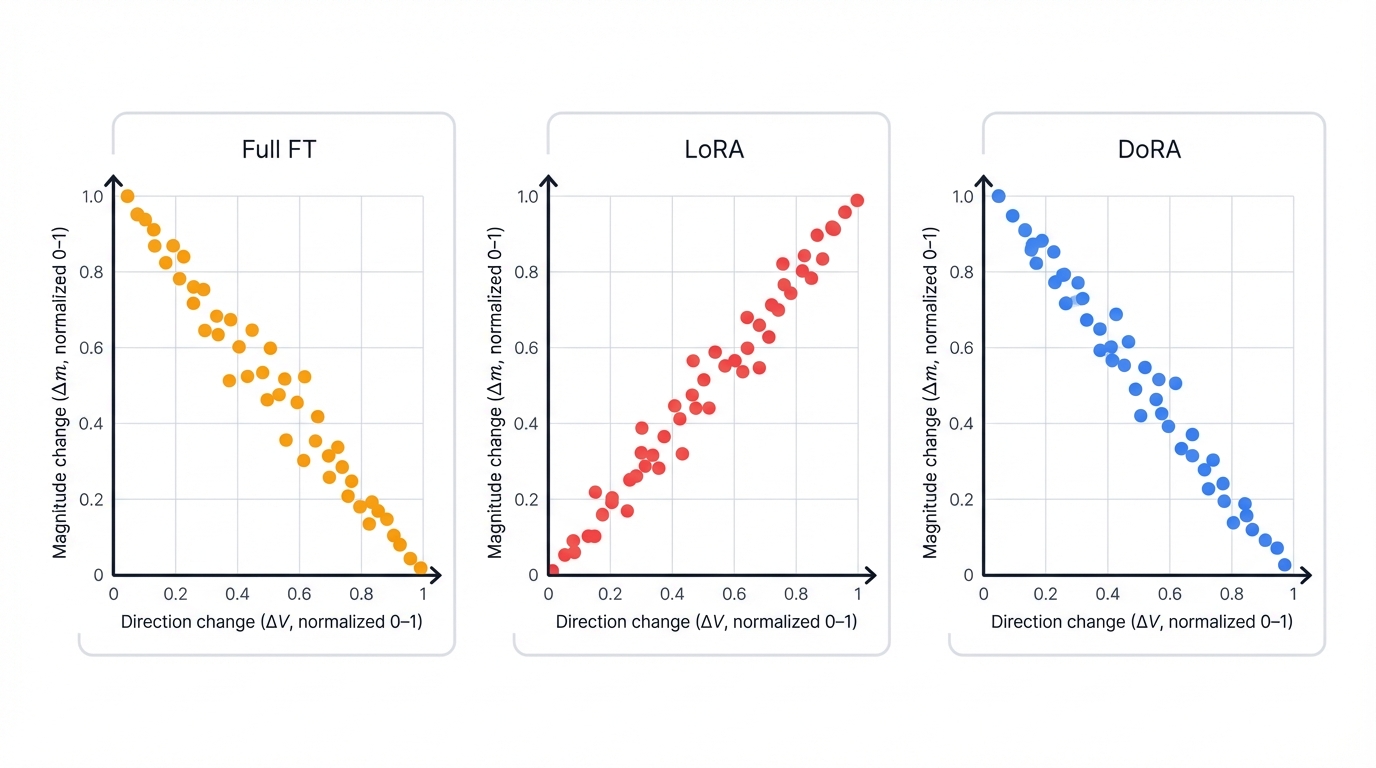

Mimicking Full Fine-Tuning Dynamics

Watch what happens when you plot magnitude change against direction change during training. A distinct pattern emerges. Full fine-tuning and DoRA both show negative correlation—large magnitude changes pair with small direction changes, and vice versa. Nuanced adaptation at work. LoRA? Positive correlation. Updates are either large in both magnitude and direction or small in both. Zero flexibility. This rigidity explains why LoRA falls short of FT. DoRA replicates FT's negative correlation, unlocking enhanced learning capacity and superior performance.

Consistent Performance Gains

Head-to-head comparisons tell the story. DoRA crushes LoRA across diverse benchmarks and model architectures. The numbers speak:

- Commonsense Reasoning (LLMs): Major improvements on LLaMA models—+3.7 on LLaMA-7B, +1.0 on LLaMA-13B, +2.9 on LLaMA2-7B, and a stunning +4.4 on LLaMA3-8B

- Vision-Language Tasks (VLMs): Superior visual instruction tuning (+0.6 on LLaVA-7B) and image/video-text understanding (+0.9 and +1.9 on VL-BART)

- Text-to-Image Generation: Better personalization in diffusion model fine-tuning compared to LoRA

Robustness and Stability

DoRA dominates with rank hyperparameter robustness. Lower ranks? DoRA significantly outperforms LoRA. One study: 22.4% improvement at rank 4, 37.2% at rank 8. This makes DoRA the go-to choice when parameter budgets are brutally constrained—strong performance with minimal trainable parameters.

Compatibility and Extensibility

DoRA plays well with others. Researchers have built hybrid methods: QDoRA combines DoRA with QLoRA's 4-bit quantization. DVoRA integrates DoRA with the ultra-efficient VeRA technique. The weight decomposition concept proves remarkably versatile.

NVIDIA's Assessment: DoRA delivers superior performance and stability with zero inference overhead and negligible training parameter increase. It's not just an alternative to LoRA—it's a direct, superior replacement. NVIDIA researchers call it a "costless replacement for LoRA," positioning it as the new de facto standard for LoRA-style parameter-efficient fine-tuning.

Clarification of an Alternative Formulation: DoRA (Dynamic Rank Adaptation)

Let's clear up confusion. Another PEFT method shares the DoRA acronym but operates on completely different principles: Dynamic Rank Adaptation. The paper "DoRA: Enhancing Parameter-Efficient Fine-Tuning with Dynamic Rank Distribution" details this approach. Keep it distinct from Weight-Decomposed DoRA.

Core Principle

This DoRA version tackles a key LoRA limitation: fixed, uniform rank (r) across all targeted weight matrices. The problem? Different layers have vastly different learning requirements for specific tasks. Some need large parameter budgets (higher rank) for effective adaptation. Others need barely anything. Uniform rank ignores this reality.

Technical Methodology

Dynamic Rank Adaptation centers on adaptive budget allocation:

- Decomposition into Single-Rank Components: Start by decomposing a high-rank LoRA layer into single-rank components. Think of each component as a LoRA layer with r=1

- Importance Evaluation: During training, evaluate each component's contribution to overall task performance

- Dynamic Pruning: Prune low-contribution components. This reallocates limited parameter budget from unimportant model parts to critical modules

Objective

Maximize constrained parameter budget utility. Create non-uniform rank distribution across the model dynamically. Achieve better performance than fixed-rank LoRA using identical total trainable parameters.

Distinction from Weight-Decomposed DoRA

The fundamental difference lies in core mechanism and objective:

- Dynamic Rank DoRA pursues intelligent budget allocation. The question: "Given fixed LoRA parameter budget, how do we distribute it most effectively across layers?" Innovation lives in structural adaptation and pruning within existing LoRA framework

- Weight-Decomposed DoRA seeks faithful fine-tuning approximation. The question: "Does LoRA approximate the right quantity? Can we decompose the approximation target (weight matrix itself) for better results?" Innovation transforms what gets adapted

Important: Two distinct methods emerging under the same acronym reveals a broader PEFT research trend. After LoRA's breakthrough, the field rapidly addressed second-order limitations. These approaches represent different philosophical paths: one optimizes adaptation structure (Dynamic Rank), the other optimizes adaptation target (Weight-Decomposed).

Comparative Analysis: The Trade-Offs Between DoRA and Full Fine-Tuning

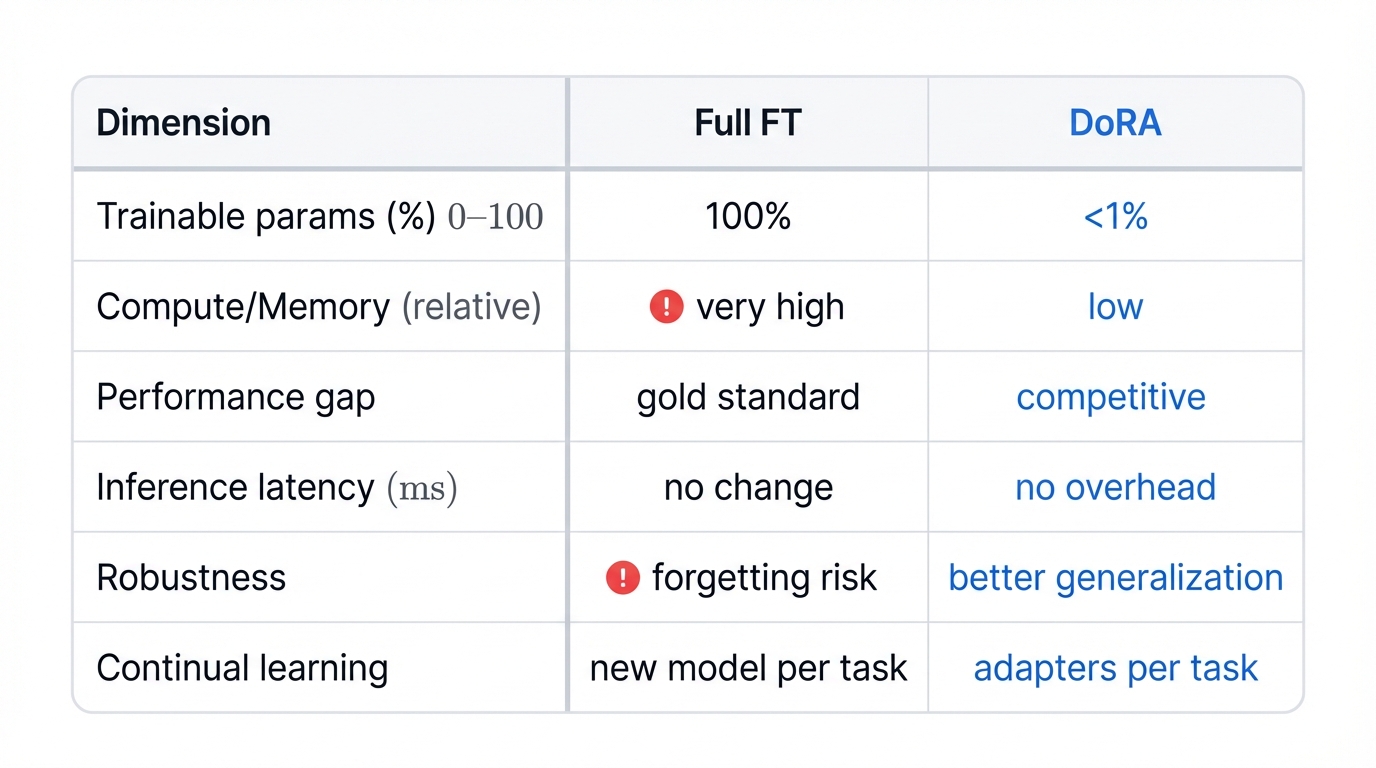

Choosing an adaptation strategy demands careful evaluation of trade-offs. This comparison pits Weight-Decomposed DoRA against traditional full fine-tuning (FT) across critical axes, moving far beyond simple efficiency-versus-performance debates.

Parameter and Resource Efficiency

The most dramatic divergence:

- DoRA: Trains a minuscule parameter fraction—often under 1%. Result? Drastically lower computational resource demand (GPU/TPU), reduced memory consumption during training, significantly faster training cycles

- FT: Updates 100% of model parameters. Resource requirements become immense barriers for all but the largest organizations

Performance and Accuracy

Historically the primary trade-off. FT held the performance crown:

- FT: The gold standard for performance—most comprehensive model adaptation to new tasks. But superiority isn't guaranteed. Dataset size and quality heavily influence results

- DoRA: Explicitly designed to close the FT performance gap. By accurately mimicking full fine-tuning's learning dynamics, DoRA achieves highly competitive performance. Some complex or domain-specific tasks may show marginal gaps. But documented cases prove DoRA can match or exceed FT performance, especially in data-constrained scenarios where FT overfits catastrophically

Inference Latency

Production systems demand response speed:

- FT: Final artifact maintains original architecture. Inference latency? Unchanged

- DoRA: Critical feature: learned magnitude vector and low-rank directional matrices (BA) merge mathematically back into original weight matrices post-training. Like LoRA, DoRA introduces zero additional inference overhead. This strategic advantage crushes other PEFT methods like Adapters, which permanently increase inference time with new layers

Model Robustness and Generalization

Generalization ability and knowledge retention define model utility:

- FT: Highly susceptible to catastrophic forgetting. Aggressive parameter updates erase generalized pre-training knowledge. Small datasets trigger overfitting, destroying generalization

- DoRA: Freezes vast majority of base parameters. Inherently robust against catastrophic forgetting. Low-rank update constraints act as powerful regularization. Overfitting risk plummets. Often delivers better generalization than poorly executed full fine-tuning on limited datasets

Continual Learning and Task Switching

Multi-task adaptation flexibility is standard enterprise need:

- FT: Fundamentally ill-suited for multi-task or continual learning. Each task demands creating and storing a new, massive model. Inefficient. Unscalable

- DoRA: Excels brilliantly. Train small, separate DoRA adapters for each task. At inference, load the appropriate adapter, apply to single shared base model. Modular approach enables hyper-efficient storage and lightning-fast task-switching—ideal architecture for multi-tenant systems handling diverse tasks

| Feature | Full Fine-Tuning (FT) | Low-Rank Adaptation (LoRA) | Weight-Decomposed DoRA |

|---|---|---|---|

| Trainable Parameters | 100% of model parameters | Typically < 1% | Typically < 1.01% |

| Memory Usage (Training) | Very High | Low | Low |

| Training Time | Very Long | Short | Short |

| Storage per Task | Full model size | Small adapter size (MBs) | Small adapter size (MBs) |

| Inference Overhead | None | None (mergeable) | None (mergeable) |

| Performance vs. FT | Baseline (100%) | High, but often with a gap | Very High, closes the gap |

| Catastrophic Forgetting Risk | High | Low | Low |

| Ideal Use Case | High-resource scenarios with large datasets | General-purpose efficient fine-tuning | High-fidelity tasks, default LoRA replacement |

The Broader Landscape: A Survey of Alternative Parameter-Efficient Fine-Tuning (PEFT) Methodologies

DoRA represents significant advancement. But it's part of a rich, rapidly evolving PEFT ecosystem. Understanding this broader landscape proves essential for selecting optimal adaptation strategies. PEFT methods fall into three main categories based on model modification approach: additive, selective, and reparameterization-based.

Additive Methods: Injecting New Parameters

These methods freeze the original pre-trained model entirely. New, trainable modules get introduced.

Adapters

Adapters rank among the earliest, most well-studied PEFT techniques. Small, trainable neural network modules get inserted between existing frozen model layers—typically after attention and feed-forward blocks in Transformers. To maintain low parameter counts, adapters employ "bottleneck" architecture: linear layer down-projects input to smaller dimension, non-linear activation fires, another linear layer projects back to original dimension.

- Key Advantage: Highly modular. Proven effective, especially in multi-task learning

- Key Limitation: Adding new architecture layers introduces permanent (albeit small) inference latency increase. Adapter weights can't merge into original model layers

Prompt-Tuning

Prompt-tuning is extremely parameter-efficient. The entire model stays frozen. Instead, it learns small continuous vector sets—"soft prompts"—prepended to input embedding sequences. The model interprets these trainable "virtual tokens" as task-specific instructions, conditioning output accordingly.

- Key Advantage: One of the most efficient methods for trainable parameters and storage. Only small prompt vectors need storage per task

- Key Limitation: Performance can lag behind more intrusive methods, especially on smaller models (under 10B parameters) or complex tasks requiring substantial internal representation changes

Prefix-Tuning

Prefix-tuning extends prompt-tuning powerfully. Rather than simply adding learnable prefixes to input layers, it introduces trainable prefix vectors to self-attention mechanism keys and values in every Transformer layer. Learned prefixes exert direct influence over model computations at each processing stage.

- Key Advantage: Generally more expressive. Performs better than simple prompt-tuning, particularly on generation tasks

- Key Limitation: Requires more trainable parameters than prompt-tuning. Can be more complex to implement and stabilize during training

Selective Methods: Fine-Tuning a Subset of Existing Parameters

No new parameters added. Instead, identify and fine-tune small subsets of original model parameters.

BitFit (Bias-Term Fine-Tuning)

BitFit takes exceptional sparsity. All main weight matrices freeze. Only bias terms get updates. Bias terms constitute tiny fractions of total model parameters—extreme efficiency for computation and storage.

- Key Advantage: Extreme parameter efficiency. Suitable for very low-resource environments

- Key Limitation: Relatively weak adaptation. Often insufficient for complex tasks requiring significant learned representation changes

Other Reparameterization-Based Advances

This category—including LoRA and DoRA—modifies models during training by re-parameterizing weight updates. Often merges back to original architecture for inference.

QLoRA (Quantized Low-Rank Adaptation)

QLoRA saves memory by enhancing LoRA. Core innovation? Quantize frozen, pre-trained base model to lower numerical precision (typically 4-bit) before applying standard LoRA. Drastically reducing base model memory makes fine-tuning massive models (65B+ parameters) possible on single, consumer-grade GPUs.

VeRA (Vector-based Random-matrix Adaptation)

VeRA is recent LoRA variant further reducing trainable parameters. The insight: one low-rank matrix (matrix A) can be shared, frozen random matrix across all layers. Only small, learnable scaling vectors needed per layer to adapt matrix B. Even more parameter-efficient than standard LoRA.

OFT (Orthogonal Finetuning)

OFT preserves pre-trained model semantic structure more effectively. It operates on preserving hyperspherical energy—cosine similarity between neuron representations. Achievement method? Learning orthogonal transformation for weights, parameterized efficiently as sparse block-diagonal matrix keeping trainable parameters low.

The PEFT Trilemma: Choosing between these methods means navigating complex trade-offs. No single "best" PEFT technique exists. Rather, there's a trilemma: performance, parameter efficiency, and intrusiveness (inference cost). DoRA and LoRA push performance boundaries while being non-intrusive at inference. Prompt-Tuning and BitFit champion parameter efficiency but may sacrifice performance. Adapters offer strong performance with permanent inference cost. Optimal choice depends entirely on specific application constraints and goals.

| Category | Method | Core Mechanism | Key Advantage | Key Limitation | Inference Overhead |

|---|---|---|---|---|---|

| Additive | Adapters | Inserts small bottleneck layers | High modularity, strong performance | Adds permanent inference latency | Yes |

| Additive | Prompt-Tuning | Learns soft prompt prepended to input | Extremely high parameter efficiency | Less effective on smaller models | Yes (longer sequence) |

| Additive | Prefix-Tuning | Learns prefixes for keys/values in attention | More expressive than prompt-tuning | More parameters and complexity | Yes (longer sequence) |

| Selective | BitFit | Fine-tunes only bias terms | Minimal trainable parameters | Weak adaptation, unsuitable for complex tasks | None |

| Reparam. | LoRA | Approximates weight updates with low-rank matrices | Strong performance, no inference overhead | Suboptimal approximation of FT dynamics | None |

| Reparam. | QLoRA | Applies LoRA on quantized base model | Drastically reduces memory usage | Minor accuracy impact from quantization | None |

| Reparam. | DoRA | Decomposes weights into magnitude and direction | Superior performance and stability to LoRA | Negligibly more parameters than LoRA | None |

Strategic Applications and Implementation Guidance

DoRA's technical superiority translates into clear strategic advantages for specific applications. This section delivers actionable guidance on when to choose DoRA and how it stacks against other PEFT methods in practical scenarios.

When to Choose DoRA

DoRA should be preferred in scenarios demanding high performance combined with resource efficiency:

- High-Fidelity Tasks: Applications in sensitive or nuanced domains—legal document analysis, financial modeling, complex dialogue systems—demand performance as close as possible to full fine-tuning. DoRA's ability to maintain model stability and fidelity makes it ideal for high-stakes use cases where subtle errors carry significant consequences

- Resource-Constrained, High-Performance Needs: Real-world settings often limit training resources while maintaining high performance requirements. DoRA's demonstrated superiority over LoRA, especially at lower ranks, makes it the ideal solution for this common trade-off. Strong results emerge even with highly constrained parameter budgets

- As a Default LoRA Replacement: DoRA consistently outperforms LoRA. More stable. No additional inference cost or significant training overhead. It should be the default choice over standard LoRA for most new fine-tuning projects. A "free" upgrade in performance and robustness

Application Domains

DoRA's effectiveness has been empirically validated across AI domains:

- Natural Language Processing (NLP): DoRA shows clear superiority over LoRA in foundational NLP tasks—commonsense reasoning, text classification, instruction following on leading LLMs. Well-suited for building specialized chatbots, summarizers, and analytical tools

- Vision-Language Models (VLMs): In the rapidly growing multimodal space, DoRA proves effective for visual question answering (VQA), image and video captioning, visual instruction tuning. Its ability to enhance performance on models like LLaVA and VL-BART makes it valuable for developing more capable multimodal systems

- Generative AI: Creative applications like text-to-image generation use fine-tuning to teach specific artistic styles or subject matter. DoRA achieves better personalization and higher-fidelity results than LoRA, allowing more precise control over generative processes

Choosing Between DoRA and Other PEFTs

The decision to use DoRA versus another PEFT method hinges on primary project constraints:

- DoRA vs. Adapters: Critical deciding factor? Inference latency. Zero additional latency as strict requirement for real-time applications? DoRA is the clear choice due to mergeable nature. Small, constant inference time increase acceptable and highest modularity degree (composing multiple adapters) top priority? Then Adapters may be considered

- DoRA vs. Prompt/Prefix-Tuning: Choice depends on required adaptation depth. Tasks necessitating significant internal representation and behavior changes? DoRA is superior—directly modifies model weights. Simpler classification or generation tasks, especially using extremely large models (>10B parameters) where prompt-based methods become more competitive? Prompt-Tuning offers absolute maximum parameter efficiency. Prefix-Tuning occupies middle ground—more power than Prompt-Tuning but typically less than DoRA

Conclusion and Future Outlook

Weight-Decomposed Low-Rank Adaptation (DoRA) marks a significant, mature step in Parameter-Efficient Fine-Tuning's evolution. It moves beyond LoRA's initial, powerful-but-imperfect heuristic to more principled, empirically grounded methodology. By identifying and isolating magnitude and direction's distinct roles in weight updates, DoRA provides faithful, effective approximation of full fine-tuning. The result? A technique demonstrably more performant and stable than its predecessor, substantially narrowing the long-standing performance gap with resource-prohibitive full fine-tuning.

Crucially, DoRA achieves these gains while preserving PEFT's core economic and operational benefits. Computationally inexpensive? Check. Minimal storage? Check. Zero inference latency? Absolutely. This combination positions DoRA as powerful, practical, costless LoRA replacement—poised to become the new standard for vast ranges of fine-tuning applications across NLP, computer vision, and multimodal AI.

Future Research Directions:

- More Sophisticated Decompositions: Future research may explore even more granular decompositions beyond magnitude and direction

- Hybrid Methods: Combining different PEFT family strengths—integrating DoRA's reparameterization with Dynamic Rank DoRA's adaptive budget allocation or prompt-based methods' extreme efficiency

- Dynamic Adaptation: Methods automatically determining optimal rank or switching between different PEFT strategies based on task complexity

Looking forward, DoRA's success points toward ever more granular, efficient, principled techniques. The field won't stop at decomposing weights into just magnitude and direction. The ultimate goal remains crystal clear: develop adaptation techniques that are maximally performant, minimally resource-intensive, robustly generalizable. As these methods advance, they'll further democratize state-of-the-art AI access, empowering broader communities of developers and researchers to build more capable, specialized intelligent systems.