The $50 Million Wake-Up Call

The email arrived at 7:23 AM. A Monday morning that would change everything for DataFlow AI. "Congratulations on your Series B funding" — fifty million dollars to scale from 50 employees to 500 in eighteen months. What should have been a celebration quickly turned into an existential crisis for Alex Chen, the company's first and only security hire.

Key Concept: Understanding this foundational concept is essential for mastering the techniques discussed in this article.

Money wasn't the problem. Growth wasn't the issue. The problem was infrastructure — their security infrastructure was built for twelve people working out of a cramped office, not for a company about to become a major player in the AI industry. Password policies and basic endpoint protection weren't going to cut it when handling enterprise customer data and facing sophisticated nation-state attackers who specifically target AI companies for their valuable intellectual property.

Alex stared at the screen. Everything had to change overnight. This wasn't about incremental improvements or gradual scaling — this was about fundamental transformation from startup security thinking to enterprise-grade capabilities that could withstand real-world threats while enabling rapid business growth.

This transformation represents the most critical challenge facing AI companies today, and unlike traditional software companies that can add security gradually, AI organizations must handle model security, algorithmic bias, data governance, and regulatory compliance from day one, because getting it wrong means facing regulatory sanctions, customer defection, and potentially catastrophic safety incidents that can destroy years of progress in minutes.

DataFlow AI's journey from security startup to industry leader took eighteen brutal months. The transformation was successful. Their experience, combined with lessons learned from dozens of other AI companies that have navigated this path, provides a blueprint that works in practice, not just in theory.

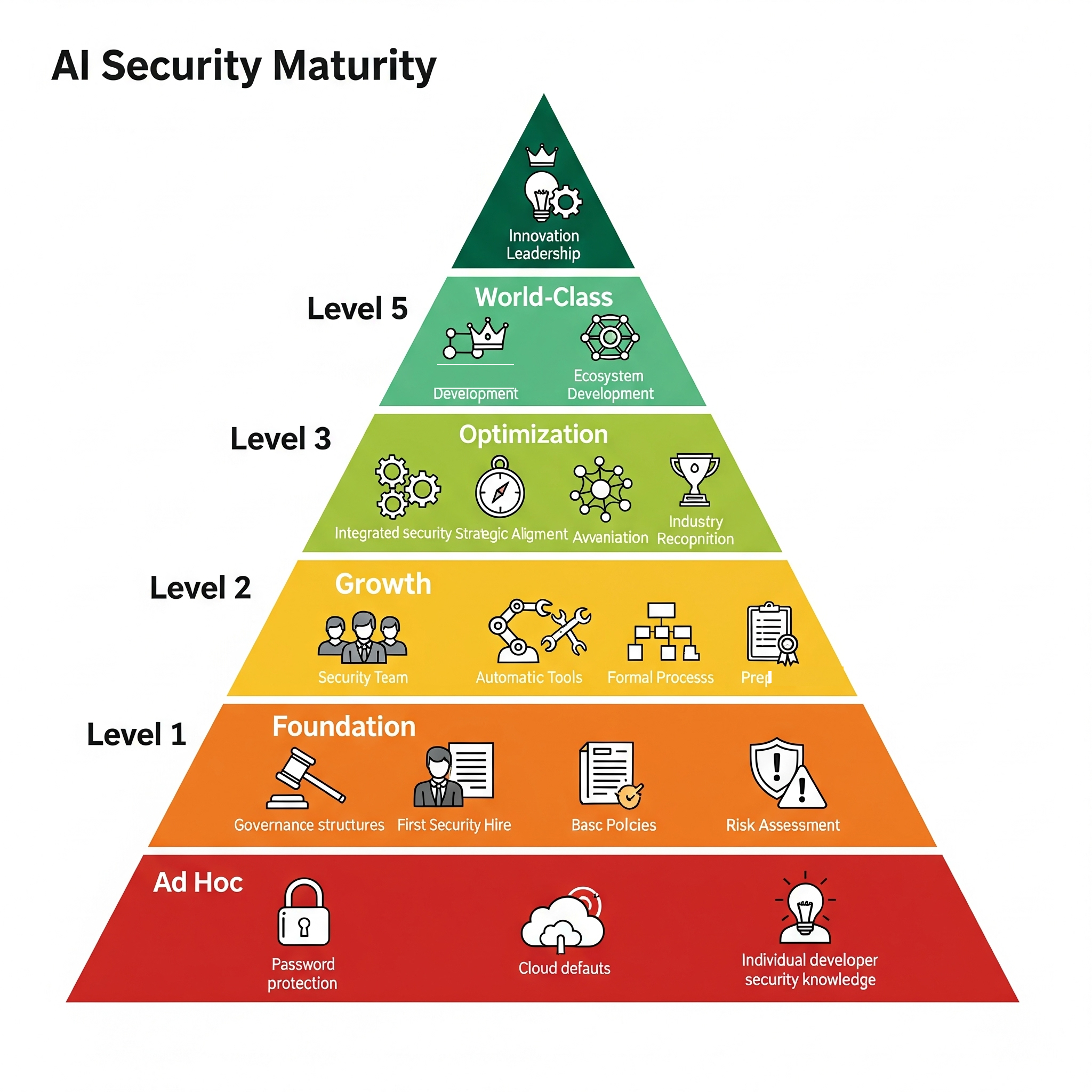

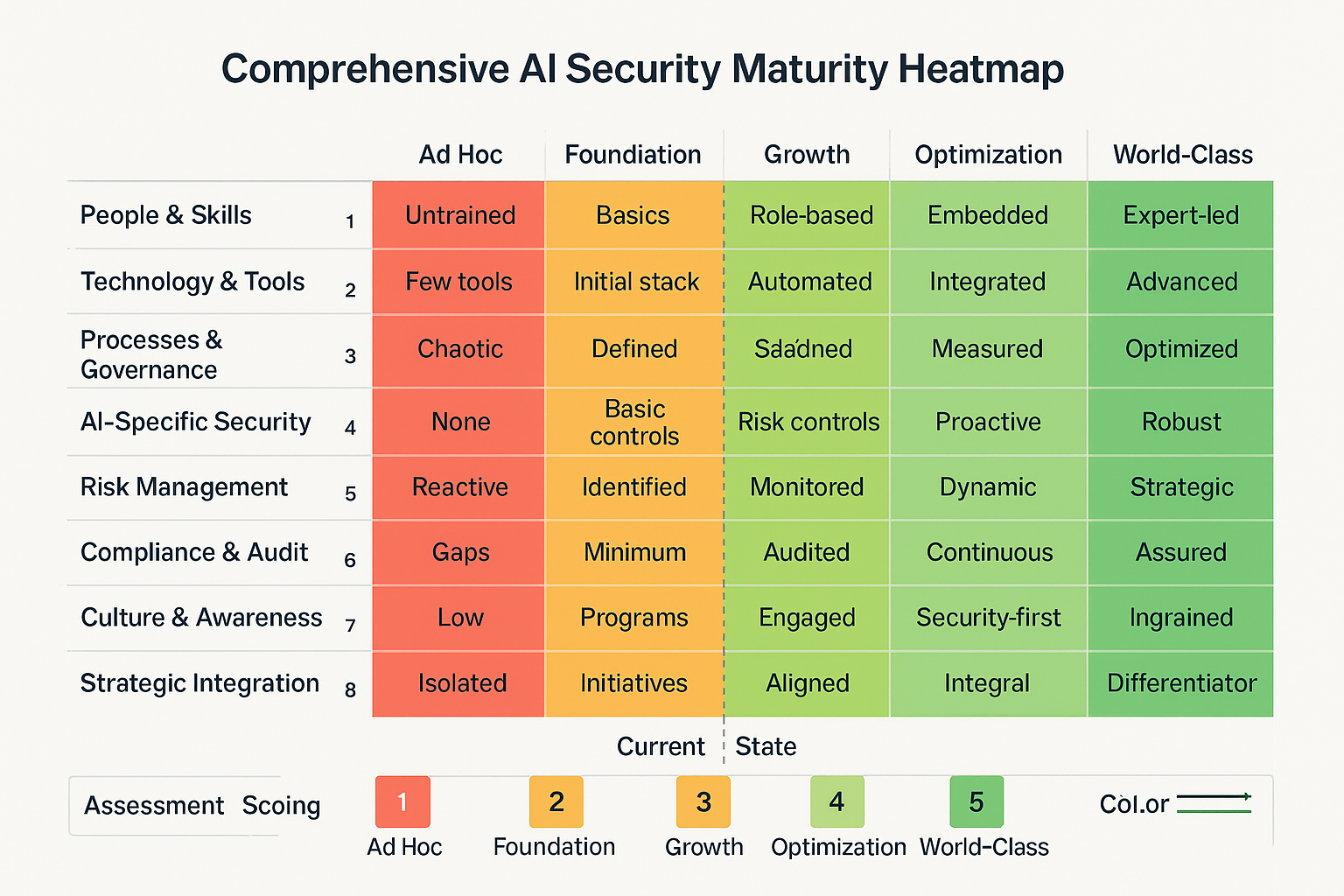

Understanding AI Security Maturity

Most discussions of security maturity focus on traditional IT systems. They miss the unique challenges that artificial intelligence creates. AI security maturity isn't just about protecting computers and networks — it's about safeguarding intelligent systems that can learn, adapt, and make decisions that directly impact business outcomes and human welfare.

The maturity journey follows predictable patterns, but with AI-specific twists that catch most organizations off guard, because traditional security frameworks like NIST or ISO 27001 provide useful foundations yet they weren't designed for systems that can be compromised through adversarial examples or model poisoning attacks that don't exist in conventional IT environments.

Consider the fundamental differences. Traditional applications process data according to fixed rules programmed by humans. AI systems learn patterns from data and make decisions based on statistical relationships that even their creators don't fully understand. This creates entirely new categories of risk that require specialized expertise, tools, and approaches that most security professionals haven't encountered before.

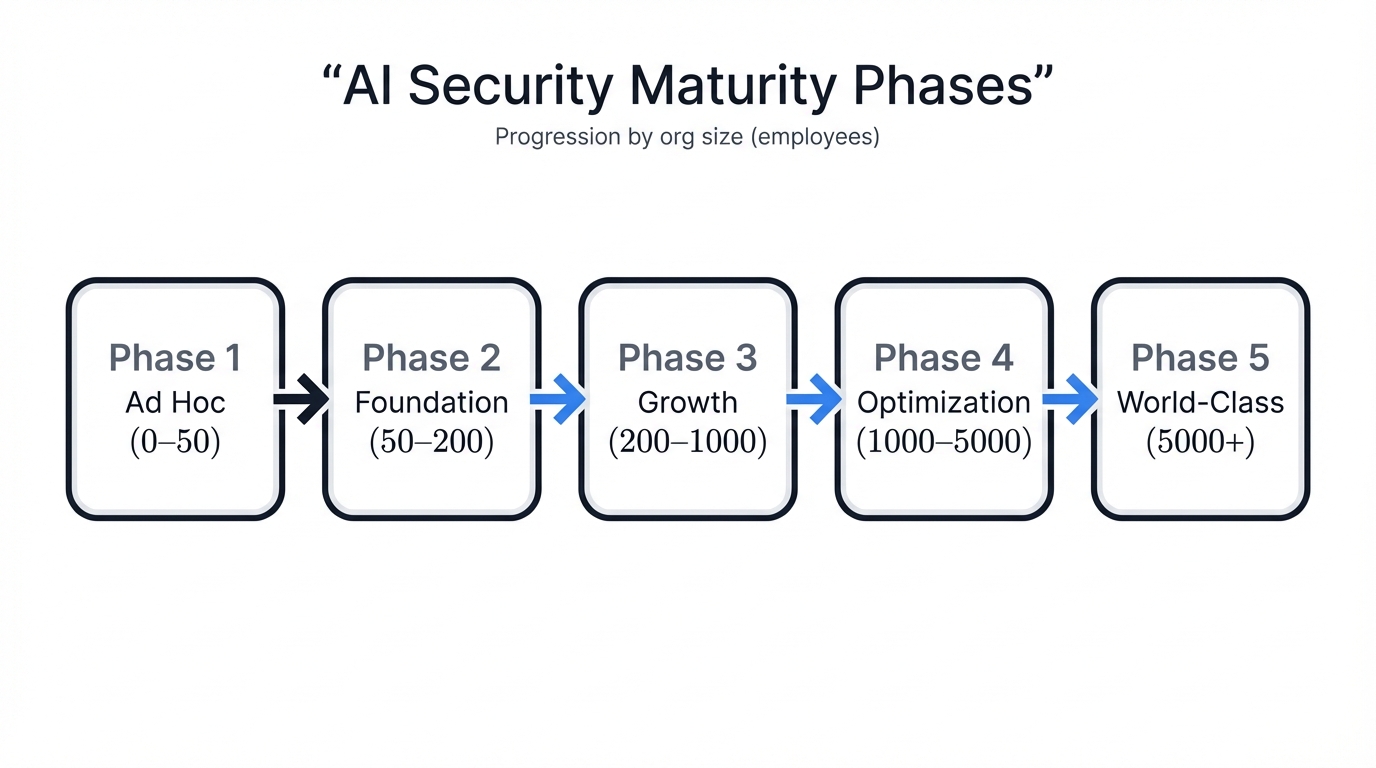

The Five Phases of AI Security Maturity

Phase 1: Ad Hoc (0-50 employees) represents the startup reality where security happens through individual heroics rather than systematic processes, and companies at this stage typically rely on cloud provider defaults, basic access controls, and the security knowledge of individual developers who may or may not have formal security training.

Phase 2: Foundation (50-200 employees) involves building the basic infrastructure and processes that can scale. This phase requires hiring dedicated security talent. It demands implementing fundamental governance structures. Most importantly, it establishes the cultural patterns that will determine long-term security effectiveness.

Phase 3: Growth (200-1000 employees) focuses on systematic scaling of security capabilities through automation, specialized roles, and enterprise-grade tools that can handle increasing complexity without proportional increases in manual effort.

Phase 4: Optimization (1000-5000 employees) emphasizes efficiency, integration, and strategic value creation where security capabilities directly enable business objectives rather than simply protecting against threats.

Phase 5: World-Class (5000+ employees) represents industry leadership. Innovation drives it. Ecosystem development sustains it. Security capabilities become competitive advantages in their own right.

Each phase requires different approaches, investments, and leadership focus, and trying to skip phases or implement capabilities before the organization is ready typically results in expensive failures and security debt that becomes increasingly costly to address over time.

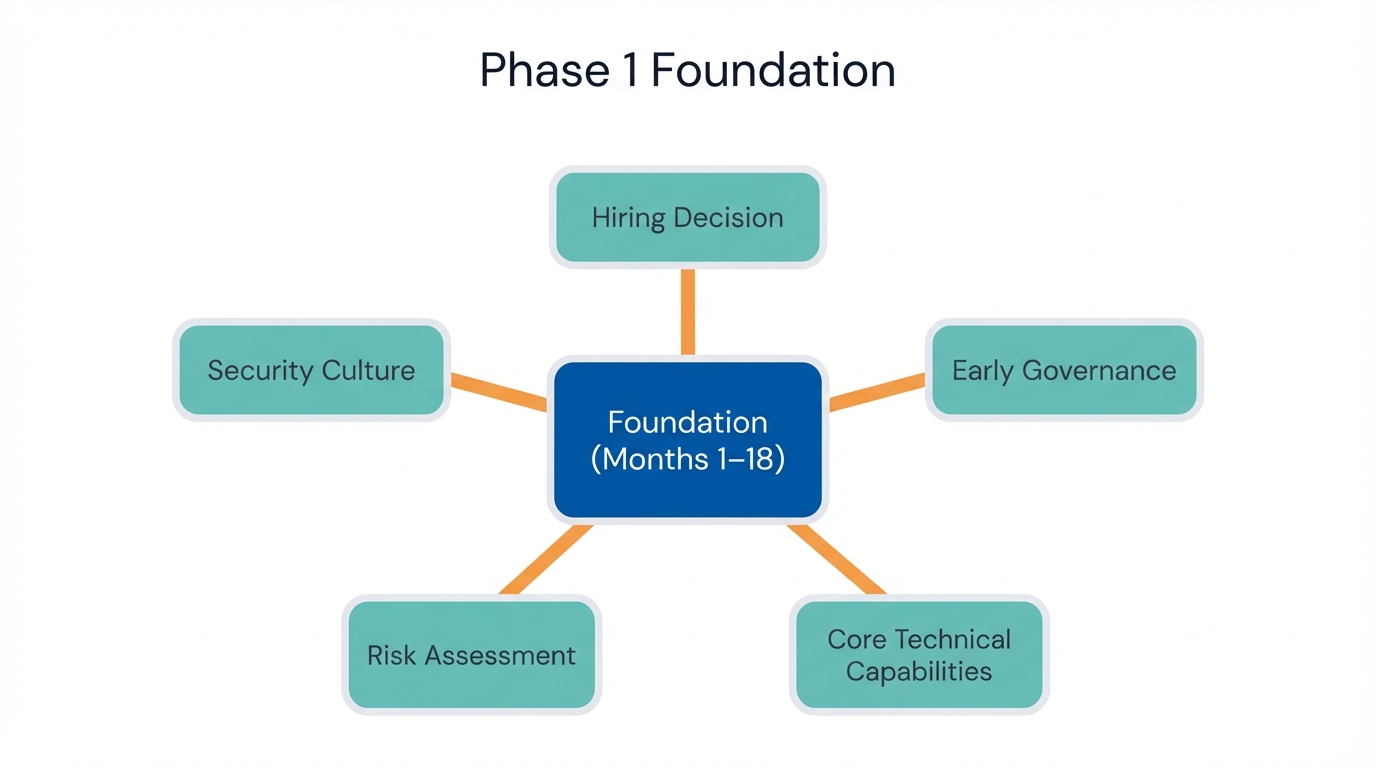

Phase 1: Building Your Foundation (Months 1-18)

The foundation phase determines whether your security program will scale successfully or collapse under its own weight as the organization grows, because early decisions about people, processes, and technology create path dependencies that can either accelerate future development or force expensive rebuilding efforts later.

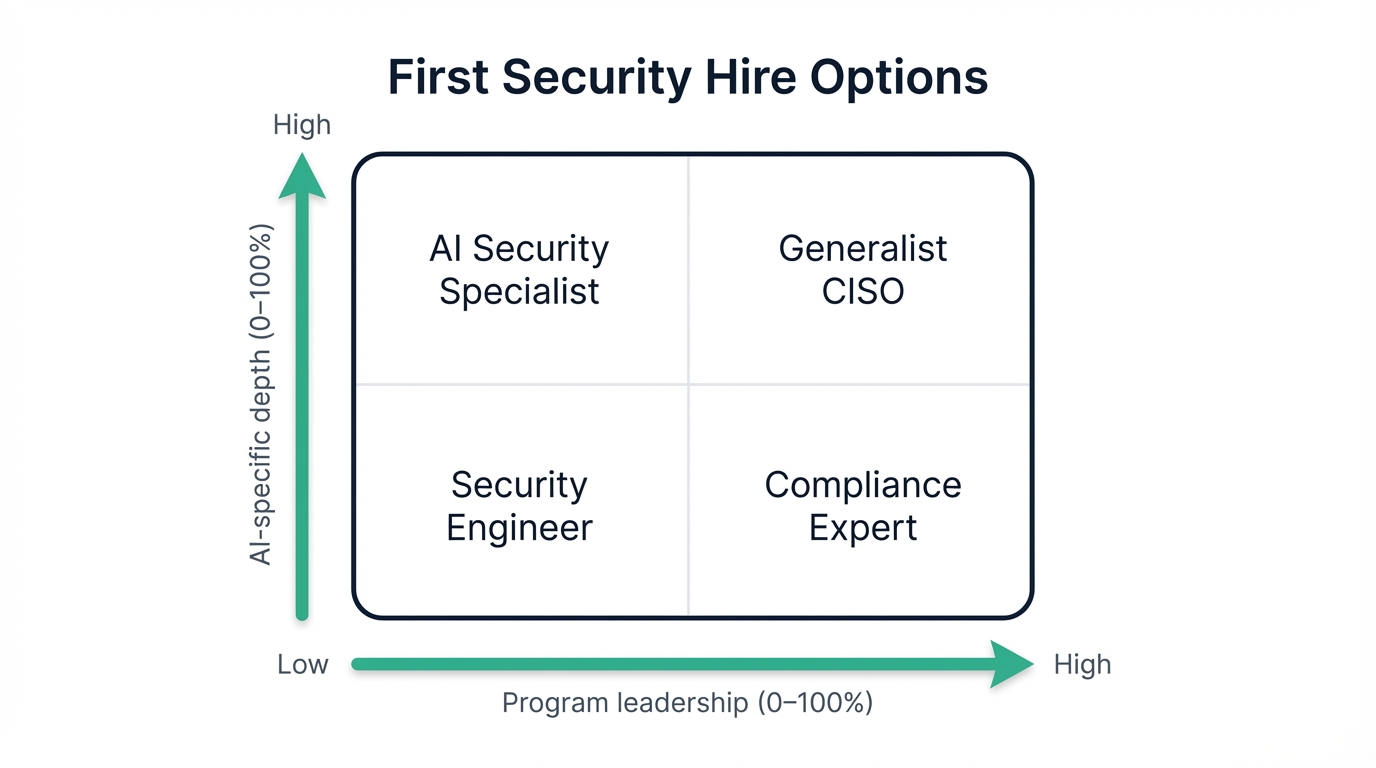

The Make-or-Break Hiring Decision

Your first security hire will shape organizational security culture for years to come. This makes it one of the most critical decisions early-stage companies face. The wrong choice creates persistent problems that affect every subsequent security investment and initiative.

The hiring landscape presents four primary options, each with distinct advantages and limitations that you need to understand before making your decision. AI Security Specialists bring deep knowledge of machine learning vulnerabilities, adversarial attacks, and model security — expertise that's increasingly valuable but often comes with limited broader cybersecurity experience and premium compensation requirements that strain startup budgets.

Generalist CISOs offer strategic thinking and comprehensive security program experience, but they typically need months to understand AI-specific threats and may focus too heavily on compliance rather than the technical security challenges that AI companies face during rapid growth phases.

Security Engineers provide the hands-on technical skills needed to build security infrastructure while growing alongside the company's expanding needs, and although they may initially lack strategic experience, their technical depth and adaptability make them ideal for organizations that need to build capabilities organically rather than implementing pre-existing frameworks.

Compliance Experts excel at navigating regulatory requirements and customer security assessments. They bring structure and process rigor. However, they often lack the technical depth needed to address algorithmic vulnerabilities, model security, and other AI-specific technical challenges that can't be solved through policy alone.

For most AI startups, the optimal choice is a senior security engineer with AI experience and demonstrated leadership potential, because this profile provides immediate technical value while building the strategic capabilities needed as the organization matures, and the key is finding someone who can solve today's technical problems while growing into tomorrow's leadership requirements.

Establishing Early Governance

Even small organizations need clear decision-making frameworks. Security issues demand them. The absence of governance structures leads to inconsistent decisions, unclear accountability, and ad-hoc responses that create vulnerabilities and inefficiencies.

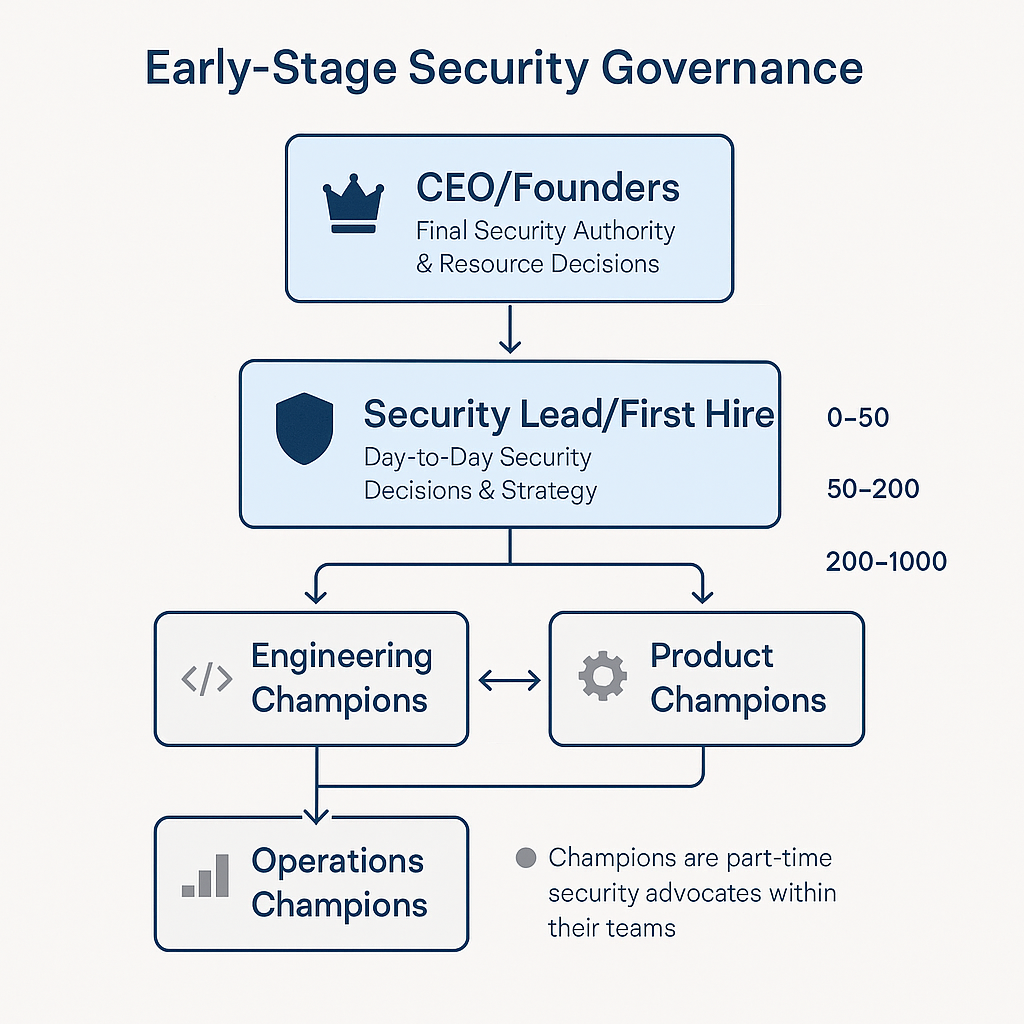

Effective early governance starts with simple structures that can evolve as the organization grows, and the CEO or founding team retains final authority over security decisions and resource allocation, ensuring that security considerations receive appropriate attention at the highest organizational level while the security lead handles day-to-day security decisions within established parameters, developing expertise and judgment while building relationships with other functional leaders.

Security champions embedded within engineering, product, and operations teams serve as bridges between security requirements and practical implementation challenges, and while these champions don't need deep security expertise, they understand how security affects their functional areas and can advocate for security considerations during routine decision-making processes.

This governance structure prevents security from becoming a bottleneck while ensuring that security considerations influence decisions across all organizational functions, and regular review meetings, clear escalation paths, and basic metrics tracking provide the feedback mechanisms needed for continuous improvement as the organization grows and faces new challenges.

Core Technical Capabilities

AI systems create unique security challenges. They require specialized approaches. Traditional application security methods aren't enough. Model protection, data governance, and secure development practices must be designed specifically for machine learning workflows and deployment patterns.

Model protection begins with treating trained models as valuable intellectual property that requires the same level of protection as source code or customer data, where encryption at rest and in transit protects model weights and parameters from unauthorized access, while access controls limit who can view, modify, or deploy models based on business roles and responsibilities.

Version control for models creates audit trails that enable rollback capabilities and change tracking, but unlike traditional software version control, model versioning must account for training data provenance, hyperparameter settings, and performance metrics that affect model behavior in ways that aren't immediately obvious from examining the model artifacts alone.

Data governance for AI systems goes far beyond traditional data classification schemes because training data requires special handling — it directly influences model behavior in ways that persist long after the original data is deleted, and data lineage tracking becomes critical for understanding how upstream changes affect model performance and for supporting regulatory requirements that may emerge years after models are deployed.

Privacy controls for AI training data must account for the fact that machine learning models can inadvertently memorize sensitive information from training datasets, creating privacy risks through model inversion attacks and membership inference techniques that can extract information about individuals whose data was used for training.

Secure development integration embeds security considerations directly into AI development workflows rather than treating security as a separate concern, and code review processes must consider AI-specific issues like adversarial robustness, bias detection, and model validation techniques that go beyond traditional security code review practices.

Dependency management for AI systems requires special attention because machine learning libraries and frameworks evolve rapidly, often with security implications that aren't immediately apparent, and supply chain security for AI components must account for the fact that pre-trained models and datasets can contain backdoors or biases that traditional vulnerability scanners can't detect.

Risk Assessment and Management

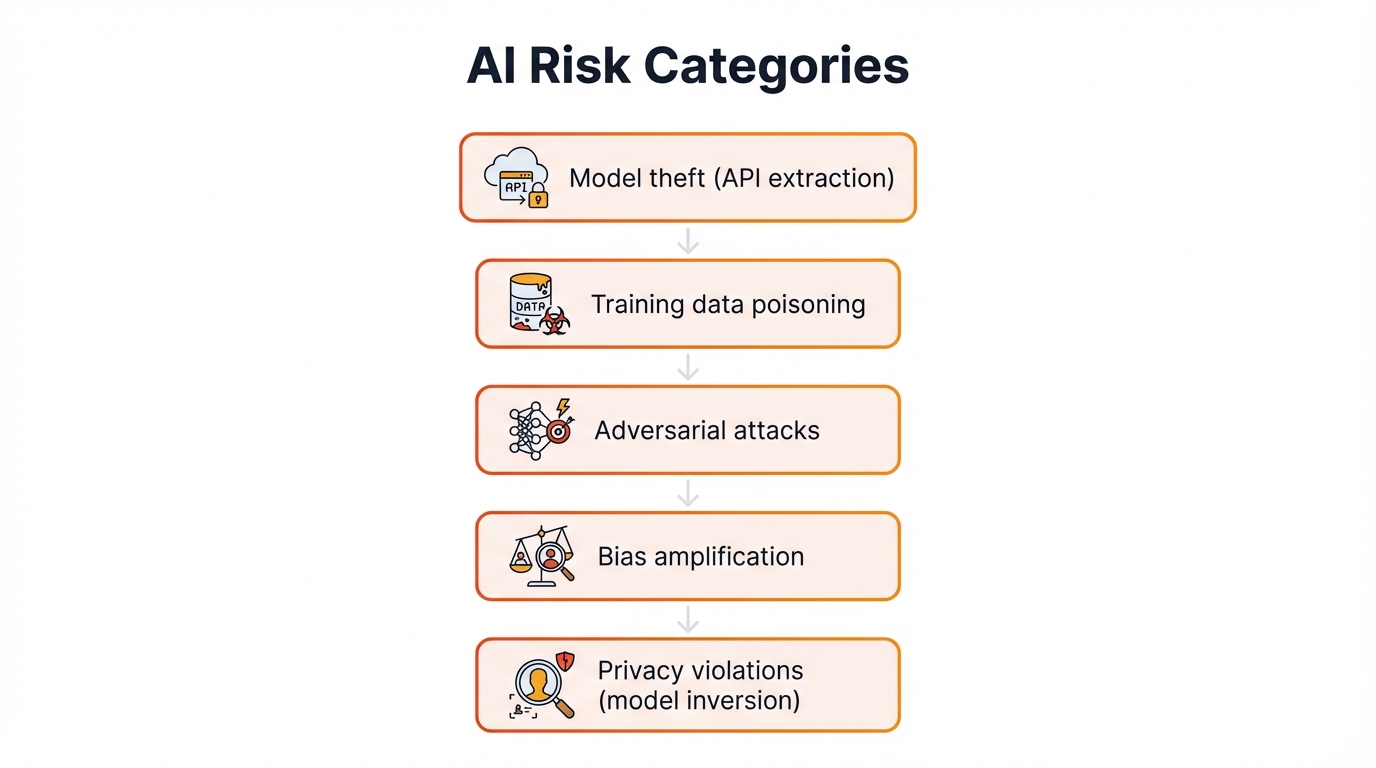

AI introduces risk categories. Traditional assessment approaches can't adequately address them. Model theft through API extraction, training data poisoning, adversarial attacks, bias amplification, and privacy violations through model inversion represent threats that didn't exist before artificial intelligence became central to business operations.

| Risk Category | Likelihood | Impact | Mitigation Priority |

|---|---|---|---|

| Model Theft/IP Loss | High | Critical | Immediate |

| Training Data Poisoning | Medium | High | Medium-term |

| Adversarial Attacks | High | Medium | Immediate |

| Bias/Fairness Issues | High | Critical | Immediate |

| Privacy Violations | Medium | High | Medium-term |

The risk assessment process for AI systems requires specialized approaches that account for the statistical nature of machine learning and the fact that AI failures often emerge gradually rather than through discrete security events, where asset inventory must include not just traditional IT assets but also training datasets, model versions, and the complex dependencies between different AI system components.

Threat modeling for AI systems must consider adversaries who can manipulate inputs, training data, and even the learning process itself, and unlike traditional threat models that focus on unauthorized access to systems or data, AI threat models must account for authorized users who might try to extract intellectual property through legitimate API access or influence model behavior through carefully crafted training examples.

Building Security Culture

Technical capabilities alone cannot create effective security programs. Security culture determines whether policies get followed. It influences daily decisions. It affects whether the organization can maintain security effectiveness as it scales beyond the direct oversight of the initial security team.

Developer security training for AI systems requires specialized knowledge that goes far beyond traditional secure coding practices, because understanding adversarial examples, model poisoning, differential privacy, and other AI-specific concepts becomes essential for developers who are building systems that can learn and adapt in ways that create new security implications.

The training program should emphasize practical skills rather than theoretical knowledge, where developers need to understand how to validate model robustness, implement privacy-preserving techniques, detect bias in training data, and respond to AI-specific security incidents, and this knowledge enables them to make better security decisions during daily development work rather than relying on security reviews that happen too late in the development process.

Cross-functional integration ensures that security thinking spreads beyond engineering teams to product management, operations, and business functions that make decisions affecting security outcomes, and product managers need to understand how security features can create competitive advantages and how security requirements affect feature development timelines, while operations teams need security knowledge to deploy and maintain AI systems securely in production environments.

Phase 2: Scaling and Formalization (Months 18-36)

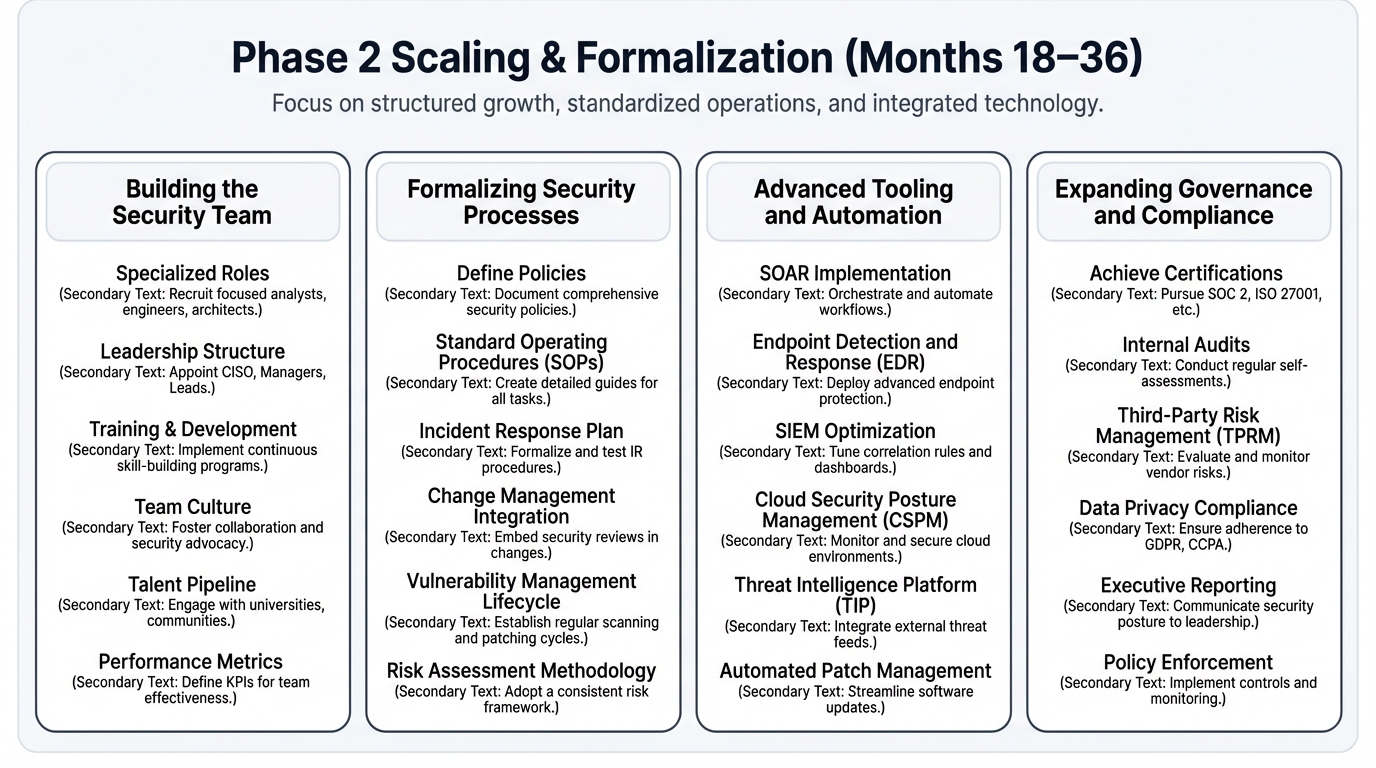

The scaling phase represents the most dangerous period. Organizational security maturity development reaches a critical juncture. Ad-hoc processes that worked for fifty people break down catastrophically at two hundred people, creating security gaps that can take months to identify and years to fix properly.

Building the Security Team

The transition from individual heroics to team-based security requires careful attention to role definition, skill development, and team dynamics that can either accelerate security maturity or create persistent dysfunction that undermines security effectiveness for years.

Specialized roles become necessary as the complexity of security challenges exceeds what any individual can handle effectively, where Application Security Engineers focus on securing the AI development lifecycle through specialized tools, processes, and expertise that address the unique challenges of machine learning systems, while Cloud Security Engineers manage the expanding infrastructure where models are trained and deployed, requiring deep knowledge of cloud security patterns and AI-specific deployment challenges, and Governance, Risk, and Compliance Analysts formalize policies, manage customer security assessments, and prepare for audits that become increasingly important as the organization pursues enterprise customers.

The key challenge during team building is maintaining culture and effectiveness while adding specialized expertise, because new team members must understand not just their functional responsibilities but also how their work integrates with other security functions and supports overall business objectives, and this requires systematic onboarding, clear role definitions, and ongoing collaboration that prevents silos from developing between security specializations.

Team structure evolution requires the initial security lead to transition from hands-on technical work to management and strategic responsibilities, and this transition often proves difficult because the skills that made someone effective as an individual contributor don't automatically translate to team leadership and strategic thinking, while organizations that don't manage this transition effectively often lose their founding security talent or create dysfunctional team dynamics that persist long after the immediate crisis passes.

Formalizing Security Processes

Process formalization transforms ad-hoc security activities. It creates systematic, repeatable, and measurable programs. These programs can scale with organizational growth while maintaining consistent effectiveness across different teams and business units.

Risk management evolves from periodic assessments to continuous programs that integrate with business planning cycles and strategic decision-making processes, where formal risk registers track identified risks, mitigation strategies, and ownership assignments that ensure accountability and progress measurement, while regular risk assessments tied to business changes, new product launches, and infrastructure modifications ensure that risk management remains current and relevant as the organization evolves.

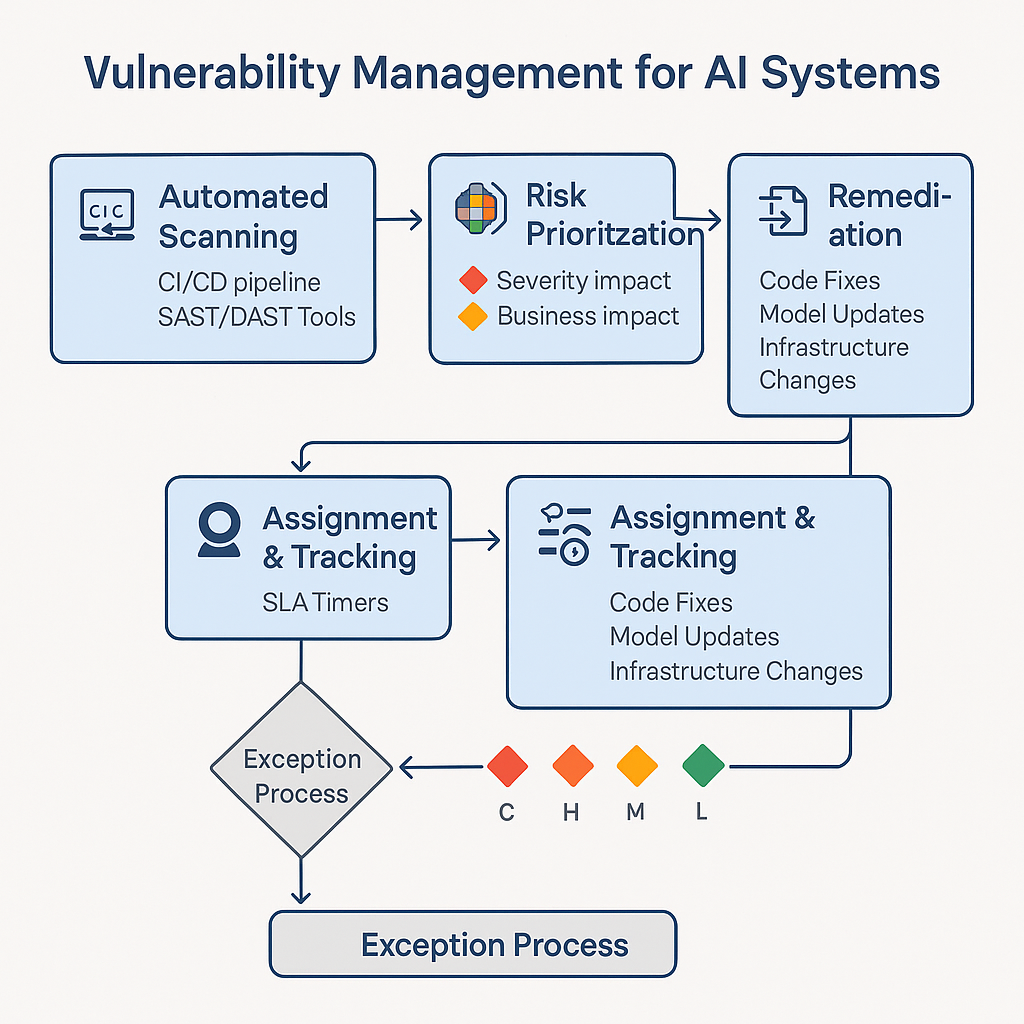

Vulnerability management requires systematic approaches that can handle the scale and complexity of modern AI systems without overwhelming security teams with manual work, where automated scanning tools integrated into development pipelines catch security issues early when they're less expensive to fix, while defined service level agreements for patching create accountability and ensure that vulnerabilities get addressed promptly based on their severity and potential business impact.

Incident response planning prepares the organization to handle security events effectively without panic or improvisation that can make incidents worse, and formal incident response plans define roles, responsibilities, communication procedures, and decision-making authorities that enable coordinated responses under stress, while playbooks for common incident types provide step-by-step guidance that reduces response time and ensures that important steps don't get overlooked during high-pressure situations.

The incident response plan for AI systems must account for unique challenges like model poisoning detection, adversarial attack identification, and bias incident management that don't exist in traditional IT environments, where response procedures must address both technical remediation and business communication challenges that arise when AI systems fail in ways that affect customer experiences or business outcomes.

Advanced Tooling and Automation

Manual security processes that worked for small teams become bottlenecks. They constrain business growth. They create security gaps as organizational complexity increases. Strategic technology investments provide force multiplication that enables security teams to handle increasing responsibility without proportional increases in manual effort.

Security Information and Event Management systems centralize logging and monitoring across all organizational systems, providing unified visibility into security events and enabling coordinated analysis that would be impossible with isolated monitoring approaches, and SIEM implementation for AI organizations must account for the unique logging and monitoring requirements of machine learning systems, including model performance metrics, data pipeline monitoring, and AI-specific threat detection patterns.

AI security platforms provide specialized capabilities for protecting machine learning systems that general-purpose security tools can't address effectively, and these platforms typically include adversarial attack detection, model integrity verification, bias monitoring, and privacy compliance features designed specifically for artificial intelligence workloads, while investment in specialized AI security tools becomes cost-effective as the organization's dependence on AI increases and the potential impact of AI-specific attacks grows.

Compliance automation tools streamline the evidence collection, control monitoring, and audit preparation processes that become increasingly important as organizations pursue enterprise customers and industry certifications, where automated compliance monitoring provides continuous visibility into control effectiveness while reducing the manual effort required for audit preparation and regulatory reporting.

| Tool Category | Investment Range | Primary Benefits | Key Selection Criteria |

|---|---|---|---|

| SIEM Platform | $50K-$200K annually | Centralized monitoring, threat detection | AI workload support, scalability |

| AI Security Platform | $100K-$500K annually | Model protection, adversarial detection | Integration capabilities, expertise |

| Compliance Automation | $25K-$100K annually | Audit preparation, control monitoring | Framework support, automation depth |

Expanding Governance and Compliance

Governance expansion transforms basic security oversight into comprehensive risk management that supports business objectives while meeting increasingly complex regulatory and customer requirements that enterprise-focused organizations must navigate successfully.

Audit preparation becomes a systematic process rather than a crisis-driven scramble when compliance deadlines approach, and gap analysis against chosen frameworks like SOC 2 or ISO 27001 identifies specific improvements needed for certification while providing roadmaps for systematic compliance development, while policy and procedure documentation creates the formal frameworks that auditors expect while ensuring that actual practices align with documented intentions.

Vendor risk management addresses the growing complexity of third-party relationships that can introduce security risks through data sharing, system integration, or service dependencies, and formal vendor security assessment processes evaluate potential partners before contracts are signed, while ongoing monitoring ensures that vendor security posture remains acceptable throughout the relationship lifecycle.

The vendor risk management process must account for AI-specific considerations like training data sources, model development partnerships, and specialized service providers that may have access to sensitive intellectual property or customer data through their work with AI systems.

Phase 3: Enterprise-Grade Capabilities (Months 36-60)

The enterprise phase transforms security. It shifts from a protective function into a strategic capability. This capability directly enables business growth, competitive differentiation, and market expansion. Organizations at this maturity level use security capabilities as tools for creating value rather than simply managing risk.

Strategic Security Leadership

Security leadership evolution requires fundamental shifts in how security organizations think about their role, measure their success, and contribute to overall business objectives, and the transition from cost center thinking to value creation requires new skills, metrics, and relationships that many security professionals find challenging.

Product differentiation through security capabilities creates competitive advantages that can command premium pricing and access markets that competitors cannot enter, because AI systems designed with security at their core can handle more sensitive data, support higher-risk use cases, and meet stringent regulatory requirements that create barriers to entry for organizations with weaker security capabilities.

Market expansion becomes possible when comprehensive security capabilities meet the requirements of enterprise customers, regulated industries, and international markets that demand high security standards, and healthcare organizations, financial institutions, and government agencies often require security certifications and capabilities that take years to develop, creating significant competitive moats for organizations that invest in comprehensive security programs.

Risk enablement represents a fundamental shift from risk avoidance to risk management that enables innovation and business growth, where mature security programs provide frameworks for evaluating and managing risks associated with new AI applications, emerging technologies, and market expansion opportunities that would be too dangerous without proper security foundations.

Executive integration ensures that security considerations influence strategic decisions rather than being afterthoughts that create implementation delays or constraints, and security leaders who report directly to CEOs or board members can contribute to merger and acquisition decisions, strategic partnerships, and product development strategies in ways that create business value while maintaining appropriate risk management.

Industry leadership positions organizations as trusted advisors and thought leaders who help shape the future of AI security rather than simply reacting to developments created by others, and standards development participation, research publication, and conference speaking create relationships and reputation that support business development while contributing to the broader advancement of AI security knowledge.

Advanced Technical Architecture

Enterprise-grade security architecture requires comprehensive approaches. It addresses every aspect of AI system security. It remains manageable and cost-effective. Zero-trust architectures provide frameworks for systematic security that don't rely on perimeter defenses or implicit trust relationships.

Identity and access management for AI systems must account for both human users and automated systems that access models, training data, and inference capabilities, where multi-factor authentication, role-based access controls, and just-in-time access provisioning create security layers that protect against both external attacks and insider threats while maintaining operational efficiency.

Network security for AI workloads requires microsegmentation approaches that isolate different components while enabling necessary communication, and encrypted communication between components, network behavior analysis, and software-defined perimeters provide defense in depth that protects against network-based attacks while supporting the distributed computing patterns that AI systems typically require.

Data protection capabilities must address the full lifecycle of AI training and inference data, from initial collection through model deployment and eventual retirement, where end-to-end encryption, privacy-preserving computation techniques, automated classification, and data loss prevention create comprehensive protection that addresses both regulatory requirements and intellectual property concerns.

Model security represents the most unique aspect of AI security architecture, requiring specialized approaches that don't exist in traditional IT security, and model integrity verification, adversarial robustness testing, continuous monitoring for model drift, and secure deployment pipelines address the specific threats that target artificial intelligence systems.

AI-Powered Security Operations

Enterprise security organizations increasingly use AI technologies. They enhance their own capabilities. Feedback loops emerge where artificial intelligence improves the security of AI systems while reducing operational overhead and improving threat detection effectiveness.

Machine learning for threat detection enables security operations centers to identify sophisticated attacks that would overwhelm human analysts while reducing false positive rates that create alert fatigue and reduce operational efficiency, and advanced analytics can identify subtle patterns in network traffic, user behavior, and system performance that indicate potential security incidents before they become critical problems.

Automated incident response systems learn from human decisions and gradually take on more responsibility for routine security events, freeing human analysts to focus on complex investigations and strategic improvements, while natural language processing analyzes security intelligence feeds, vulnerability reports, and threat research to extract actionable insights that inform defensive strategies and tactical responses.

Predictive security analytics anticipate potential risks and threats before they materialize, enabling proactive risk management that prevents incidents rather than simply responding to them after they occur, and these capabilities require sophisticated data integration, advanced analytics, and close collaboration between security teams and business units that provide context about risk tolerance and business priorities.

| AI Security Application | Maturity Level | Implementation Complexity | Business Impact |

|---|---|---|---|

| Automated Threat Detection | High | Medium | High |

| Incident Response Automation | Medium | High | Medium |

| Predictive Risk Analytics | Low | Very High | Very High |

| Intelligence Analysis | Medium | Medium | Medium |

Global Operations and Compliance

Enterprise organizations must navigate complex regulatory environments, cultural differences, and operational challenges that span multiple jurisdictions with different legal requirements, security standards, and business practices.

Multi-region security management requires balancing consistent security standards with local adaptation needs that address specific regulatory requirements, cultural expectations, and operational constraints, and data residency requirements, privacy regulations, and cybersecurity frameworks vary significantly across countries and regions, creating compliance complexity that requires specialized expertise and systematic management approaches.

Regional security teams combine local knowledge with global standards, ensuring that security implementations respect cultural norms and regulatory requirements while maintaining the consistency needed for effective risk management and operational efficiency, where global policies provide frameworks that enable local adaptation without compromising security effectiveness or creating unnecessary complexity that reduces operational efficiency.

Advanced privacy technologies enable organizations to leverage sensitive data for AI purposes while meeting stringent privacy requirements that vary across jurisdictions, and homomorphic encryption, secure multi-party computation, differential privacy, and zero-knowledge proofs provide mathematical guarantees that protect individual privacy while enabling valuable business applications that would otherwise be impossible due to privacy constraints.

The implementation of privacy-preserving technologies requires significant investment in specialized expertise, computational infrastructure, and development processes that most organizations find challenging, but these investments can create competitive advantages by enabling business models and market access that competitors cannot achieve without similar privacy capabilities.

Implementation Strategy and Best Practices

Best Practice: Following these recommended practices will help you achieve optimal results and avoid common pitfalls.

Successful security maturity development requires systematic approaches. Balance immediate needs with long-term objectives. Avoid common pitfalls. These pitfalls can derail progress or create expensive problems that take years to resolve properly.

Assessment and Planning

Current state assessment provides the foundation for effective maturity development by establishing baseline understanding of capabilities, risks, and requirements before beginning improvement initiatives that may otherwise address the wrong problems or create new vulnerabilities.

Comprehensive assessment examines security capabilities, risk exposure, compliance status, and business context to understand the organization's security posture relative to its objectives and requirements, and technology architecture analysis evaluates how well current systems support security requirements and future scaling needs, while team skills assessment identifies training needs and hiring priorities that will be necessary for successful maturity development.

Strategic planning translates assessment results into actionable roadmaps. These roadmaps align security investments with business objectives. Maturity development remains realistic and achievable within resource constraints. Vision setting defines the desired future state while gap analysis identifies specific improvements needed to achieve maturity objectives within realistic timeframes.

Success metrics establish measurable goals. They enable progress tracking and value demonstration. They provide feedback for continuous improvement and strategic adjustment based on actual results rather than assumptions about security program effectiveness.

Resource Allocation and Investment

Strategic resource allocation maximizes security program effectiveness while maintaining fiscal responsibility that supports long-term sustainability and business growth objectives.

Budget allocation patterns for AI security typically follow predictable ranges based on organizational size, growth stage, and risk profile, where early-stage organizations typically invest 2-4% of revenue in security capabilities, while mature enterprises may invest 6-8% to maintain competitive advantages and support complex compliance requirements.

People investments represent the largest component of security budgets. They provide the greatest long-term value. Capability development emerges that can't be easily replicated by competitors. Hiring senior talent early establishes proper foundations while balancing generalists and specialists ensures comprehensive coverage without excessive costs or unnecessary complexity.

Technology investments focus on automation and scalability that provide force multiplication for human capabilities while addressing AI-specific security requirements that general-purpose tools can't handle effectively, and strategic tool selection emphasizes integration capabilities, vendor stability, and long-term support rather than cutting-edge features that may not provide lasting value.

Process development investments create the frameworks and procedures that enable effective use of people and technology investments while ensuring consistent security outcomes across different teams and business units, where policy development, training programs, and measurement systems provide the infrastructure needed for sustainable security program operation and continuous improvement.

Change Management and Culture

Cultural transformation often determines whether security maturity initiatives succeed or fail. Technical capabilities without supporting culture create security programs. These programs look impressive but fail to provide effective protection or business value.

Leadership modeling sets organizational tone through executive behaviors that demonstrate genuine commitment to security rather than just policy compliance or regulatory requirements, and consistent executive support for security investments, participation in security activities, and integration of security considerations into strategic decisions create cultural patterns that influence behavior throughout the organization.

Education programs ensure that every employee understands their role in maintaining security while building the knowledge needed for security-conscious decision-making in daily work activities, and security training integrated into career development programs creates positive associations while ensuring that security knowledge remains current as threats and technologies evolve.

Accountability mechanisms translate security culture into measurable behaviors and outcomes. These outcomes can be managed and improved over time. Clear role definitions, performance metrics, and consequence systems create frameworks for consistent security behavior while providing recognition for security contributions that support organizational objectives.

Common Pitfalls and Recovery Strategies

Understanding failure patterns enables organizations to avoid expensive mistakes. It helps develop recovery strategies. Problems inevitably arise during complex organizational transformations.

Scaling too quickly represents the most common failure mode, where organizations attempt to implement advanced capabilities before establishing proper foundations, and symptoms include security tools that don't integrate effectively, processes that can't be followed consistently, and teams that become overwhelmed by complexity that exceeds their ability to manage effectively.

Recovery requires returning to foundational capabilities and building systematic approaches that can support more advanced features, and this often means accepting temporary capability reduction while establishing sustainable foundations that enable long-term success.

Technology over strategy creates expensive tool collections. These collections don't provide measurable security improvement or business value. Organizations with this problem typically experience high costs, alert fatigue, and security teams that spend more time managing tools than improving security outcomes.

Recovery focuses on strategic alignment that determines technology needs based on risk assessment and business objectives rather than feature comparisons or vendor marketing claims, and this may require replacing expensive tools with simpler alternatives that provide better value and integration capabilities.

Compliance over security creates programs that pass audits. They fail to reduce actual risks or improve business outcomes. These organizations often experience security incidents despite compliance certifications because their security measures address regulatory requirements rather than actual threats.

Recovery requires refocusing on risk-based approaches that achieve compliance as a beneficial outcome of effective security practices rather than as the primary objective that drives security decisions and investments.

The Path Forward

AI security maturity development requires sustained commitment. Strategic thinking drives it. Systematic execution transforms security from a necessary burden into a competitive capability that enables business growth and market differentiation.

The journey from startup security to enterprise excellence typically takes three to five years of consistent investment and systematic development, and organizations that attempt to accelerate this timeline through expensive shortcuts often create security debt that becomes increasingly costly to address as business growth creates additional complexity and risk exposure.

Success requires executive commitment that goes beyond budget approval to include active participation in security strategy development and sustained support through the inevitable challenges that arise during organizational transformation, because security maturity development affects every aspect of business operations and requires change management approaches that address both technical and cultural dimensions of organizational evolution.

The rewards for successful security maturity development extend far beyond risk reduction to include market access, competitive differentiation, customer trust, and operational capabilities that directly support business growth and profitability, and organizations with mature security programs consistently outperform their peers across multiple business dimensions while achieving superior risk management outcomes that provide confidence for innovation and growth.

Your security maturity journey represents one of the most important investments your organization will make. It determines future competitiveness. It shapes sustainability. The time to begin is now, before competitive pressures and regulatory requirements make strategic security development more difficult and expensive to achieve effectively.